Is there a big performance difference between write back and write through in terms of runtime? I would assume that a write in a write through policy would take more time since data has to be written also to memory.

I don't understand how we calculated the length of the tag for each line to be 26 bits.

-

I understand that the first 20 bits are used to map memory addresses to cache lines. Meaning that if the memory address is 64 bits long, the first or last 20 bits could be used to determine which line in cache the contents at this address would be stored at.

-

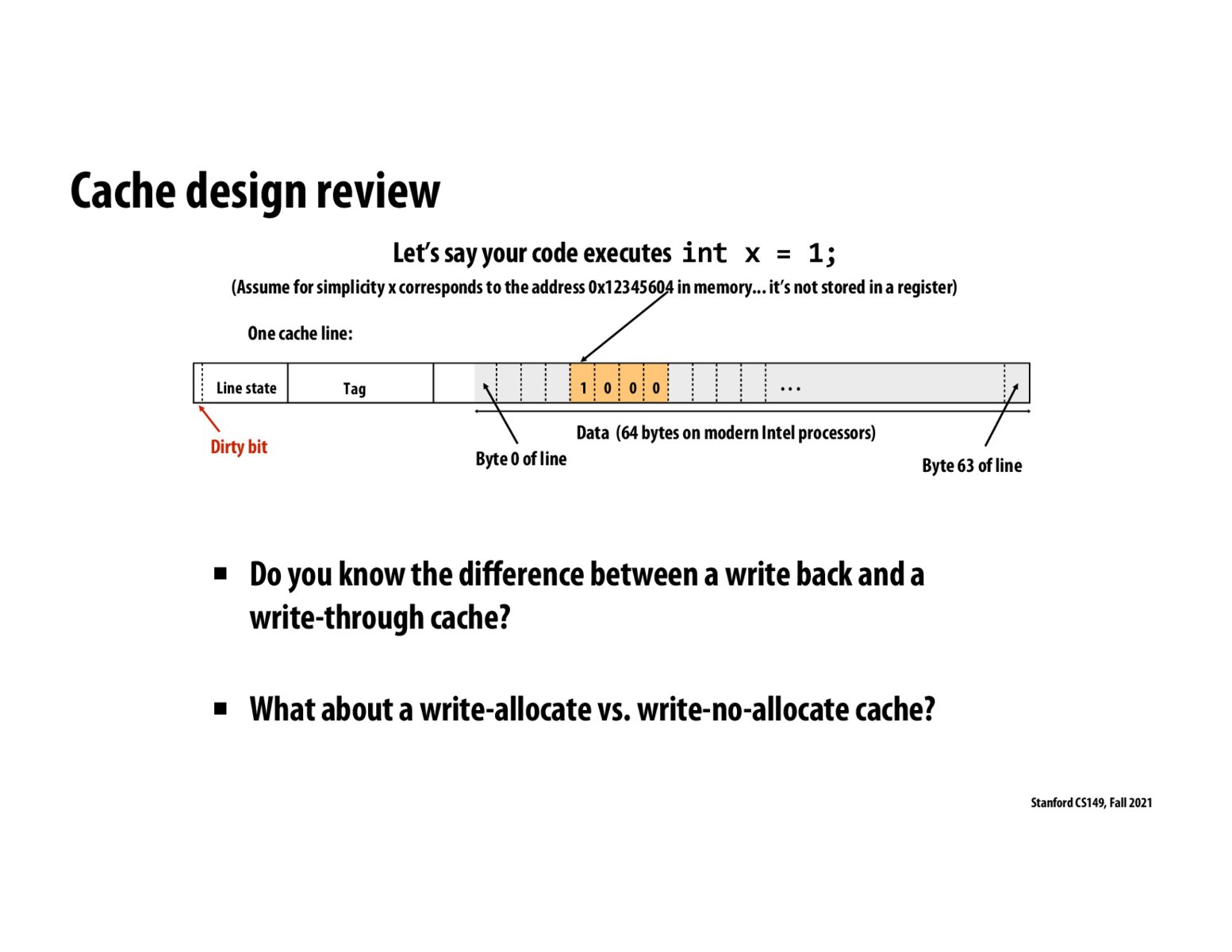

Why do we need the 6-bit offset? Don't we store ALL 64 consecutive bytes of memory into the cache line at once? Would we need to specify where exactly in those 64 bytes the value of the integer "x" would be stored?

Let me know if I am thinking about this correctly.

@nassosterz, I think there is a higher overhead associated with a write-through policy compared to a write-back policy. Since with a write-through policy, an address has to be updated in memory when read or written to regardless of eviction from the cache and this update will incur the memory latency associated with engaging with memory. A write-back policy only incurs this overhead on a cache line eviction which may occur less frequently than an address read or write. This is my intuition. I would be glad to know if it's wrong and if I misunderstood something from the lecture.

Write-allocate cache loads the enclosing cache block into the cache in addition to writing to memory when a write misses. Write-no-allocate directly writes to memory without adding it to the cache when a write misses. Intuitively it seems that for writes that will likely be read soon after, write-allocate would be more preferable. On the other hand, for writes that Lille won’t get read often or in the near future, write-no-allocate might be better.

Are we assuming fully associative cache in this case? If not, shouldn't we dedicate some bits to indicate the index within a cache?

@czh I remember in PA1 problem 5 there's a bonus question asking about what's the 4th memory operation for 2 loads and 1 store. And the answer is that the store operation will first load the cache line and then write it back to the memory. Does this mean that a write-allocate cache is used there? Will it still be the case if a write-no-allocate cache is used?

@leave

Does this mean that a write-allocate cache is used there? Yes. Will it still be the case if a write-no-allocate cache is used No. My reason is if it is a write no allocate cache, only read misses would load data into the cache. "the store operation will first load the cache line and then write it back to the memory" implicitly means loading data into the cache on write misses.

Is this "header" stored with the cache data? Does that mean that each cache line is not really a power of two in length? "64 + X"?

@alrcdilaliaou I have a similar question as to what data the header stores for the cache data in the diagram above. Additionally, am I missing something in the counting to determine that the offset is 6 bits—just wondering where we can find this? To have an explanation of each component of this diagram and how it relates to write-through and write-back would be super helpful!

What are the line state and tag for? I feel like we've gone over a few bits of each but will we see a comprehensive reference?

@ecb11 In this example, each cache line can store 64 bytes of data. We need the offset bits to know which byte out of the 64 bytes we're requesting (since the x86 architecture is byte-addressable). You can think of the offset as an index we use to access the specific byte. Lastly, we need log2(64) = 6 bits to represent decimal 0 through 63.

A cache line is usually longer than a memory bus. It might take multiple memory bus transactions to actually move the contents of a line.

Write back - When a write operation modifies a line in cache, the dirty bit is set so that when the line is evicted from cache, the dirty bit serves as an indication that this update should be pushed into the memory as well.

Write allocate - When a write operation is to be done, the corresponding line in memory is pulled into a line in cache. Data is written into the cache line and the dirty bit is set. Later when cache line is evicted, the modified data is written into memory via write-back property of cache.

@gklimias yes, i think this would be fully associative. if we had a n-way set associative cache, we'd need to iterate through the n locations for that address.

When we talk about the size of the cache line, are we only talking about the data portion of the cache line or the whole thing we see in the slide (i.e. dirty bit, line state, tag, data, etc.)?

- Write-back cache: writes are made directly to the cache; main memory is only updated when a cache line is evicted (and the dirty bit is set)

- Write-allocate cache: on a write miss, instead of just writing to main memory, allocate a line of cache for the write (bring the line in from main memory as if it were a read), write, and mark the line as dirty

- Write-no-allocate cache: on a write miss, write directly to main memory

- Write-through cache: writes are made directly to main memory

@tmdalsl, when we talk about the size of the cache line I believe we are talking about the whole thing we see in the slide.

I understand that addresses are assigned to cache lines based on their address number and the size of the cache, but what confuses me is how variables are assigned these addresses. Particularly those that are initialized one after another.

Please log in to leave a comment.

Write back writes a line back to main memory when evicted (if the dirty bit is set) whereas write through writes it back immediately