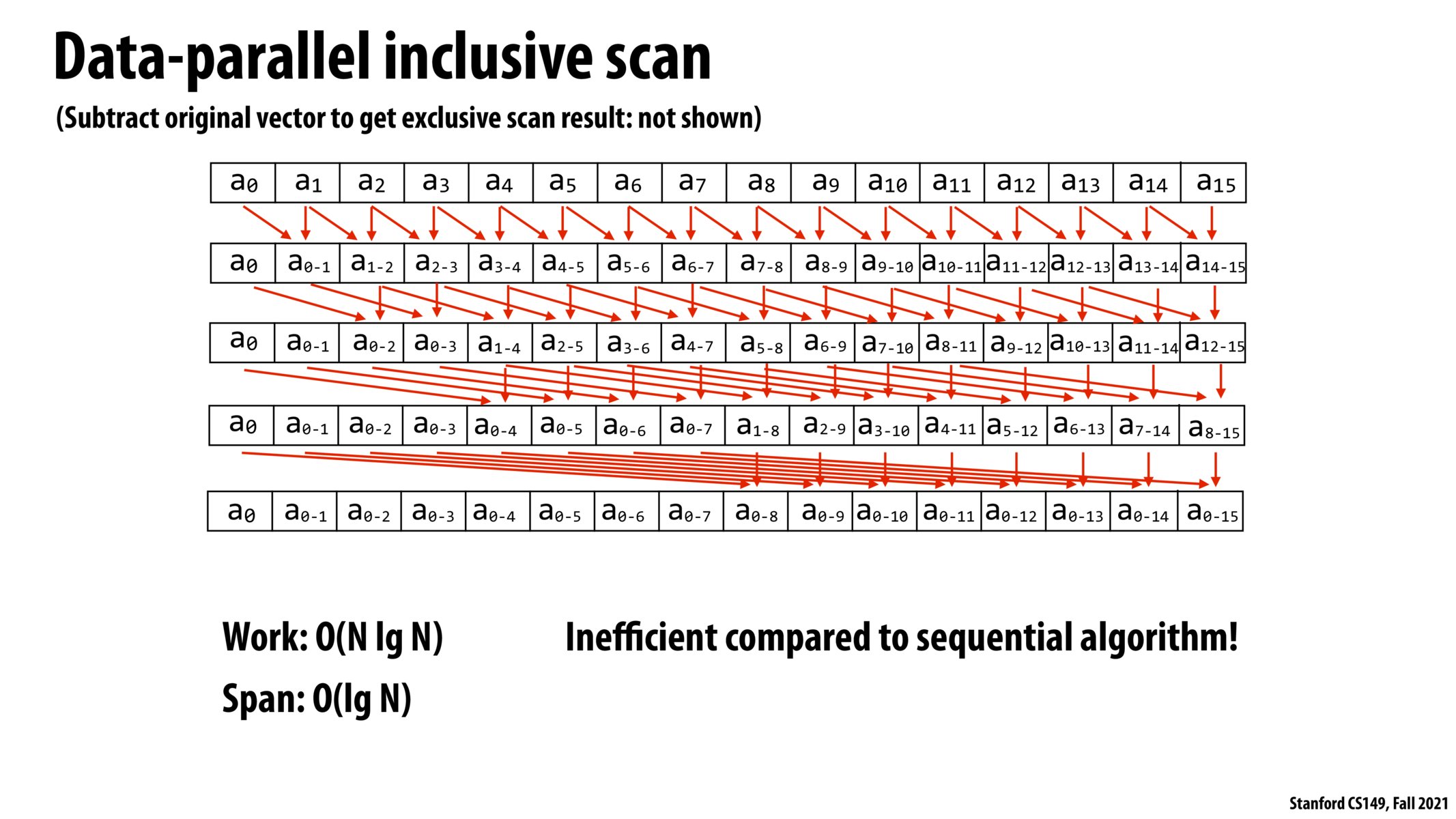

I think the O(NlogN) work represents the total number of works from all parallel processors and therefore might not be an indicator for the performance of running the program on these processors in parallel. Span is more representative of the actual runtime when scan runs on these parallel processors because it means the number of steps required to finish the scan operations, where each step would require parallelizing operations on multiple processors.

As a followup @jaez, span can be thought of as the depth of the dependency tree for the algorithm.

Does span law give a close upper bound for execution time complexity for p processors or do we usually find that execution time complexity for p processors >> span?

I think Kayvon mentioned that the first has N / 2 work, the next has N / 4, and so on, but why is this? Isn't there N - 1 parallelizable work in the first step, N - 2, etc.? I don't see why we're halving it in 2

Would the number of parallel elements performing this task decrease every step. If so, how would assignment of these work?

Or would they just be "scheduled tasks" as it were

work and span are two measures of parallel computations. Work refers to the total number of computations across all cores, whereas span is the height of the dependency tree. Two algorithms with the same O(log N) on span can have very different work measure

If we naively try to parallelize certain functions that work with sequences, then we could end up doing more work than what we would've been doing had we used the serial implementation of the function (i.e. shown in the current slide). We have to make sure that the parallel work can also give us not only parallelism but less work as a result of the parallelism.

Please log in to leave a comment.

In the lecture it was mentioned that the "complexity" of this algorithm is O(N), and the minimum time to run given infinitely many processors is the "span" and is O(logN). I am a bit confused what "complexity" refers to in this case, if it is different from the "span".