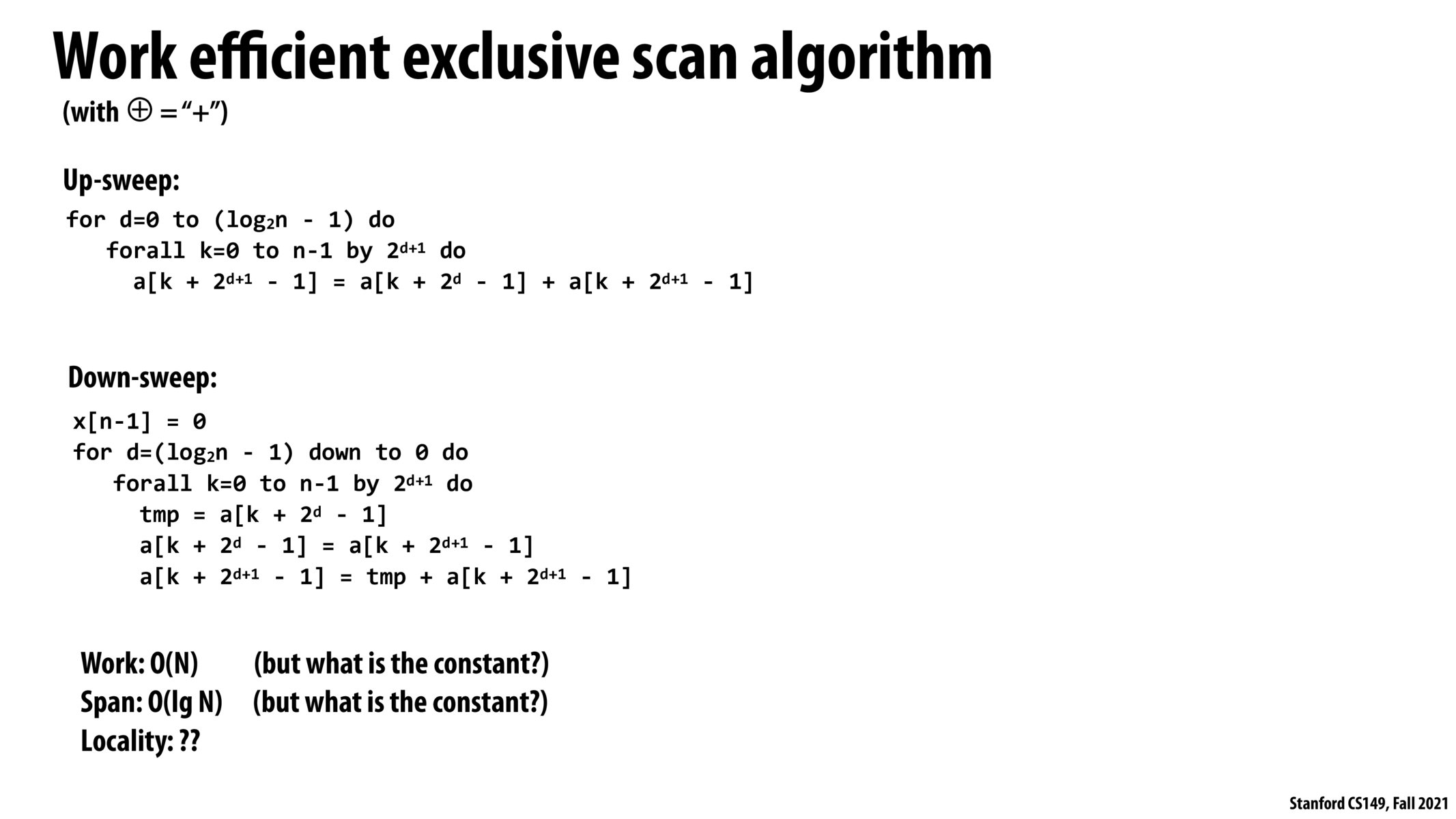

Although this implementation does asymptotically less work than the data-parallel inclusive scan of previous slides, the trade-off is that the constant in the span is 2 because of the two phases, each with a span of O(log N). The data-parallel inclusive scan had a constant of 1. Since the span is a measure of the minimum number of steps required for a computation given unlimited processors, the increase in the span is a significant consideration for parallel systems.

However, the particular access pattern for this algorithm is that the number of threads modifying the array decreases exponentially during the up sweep, before increasing exponentially again during the down sweep. If I've understood correctly, at most N/2 threads are needed in any iteration of either sweep, and so this algorithm could process an array twice as long as the previous data-parallel implementation for a fixed number of threads. To further complicate the analysis, the previous implementation requires fewer threads as it progresses, too, although it seems to use asymptotically more threads than this work-efficient implementation in any iteration.

It is interesting to notice that even we are more efficient in terms of work, we may not achieveing ideal performance in terms of span from parallel computing's perspective

I think there may be a typo in the first line of the down-sweep phase. It should say a[n-1] = 0.

Please log in to leave a comment.

Locality in this work efficient scan is going to be inferior, as we access elements widely separated in memory. Unless the cache lines hold the entire working set, we may find the cache replacing cache lines quite frequently.