The map and fold/reduce operations are also used in distributed programming models for large-scale data workloads like MapReduce, are there any other such programming models where operations like filter, scan, join, sort, partition/flatten, etc are used?

The Java Stream class seems to be an example of high-performance parallel implementations of these operations. Using the parallelStream() function on a Java collection will returns a possibly parallel Stream with this collection as its source (depending on hardware support and other stuff). One may then perform many of the operations listed in this slide on this Stream in parallel. Here is some more information on this: https://docs.oracle.com/javase/tutorial/collections/streams/parallelism.html

Does the existence of a sort operation on a sequence, for example, imply the existence of separately designed data structures for sequences?

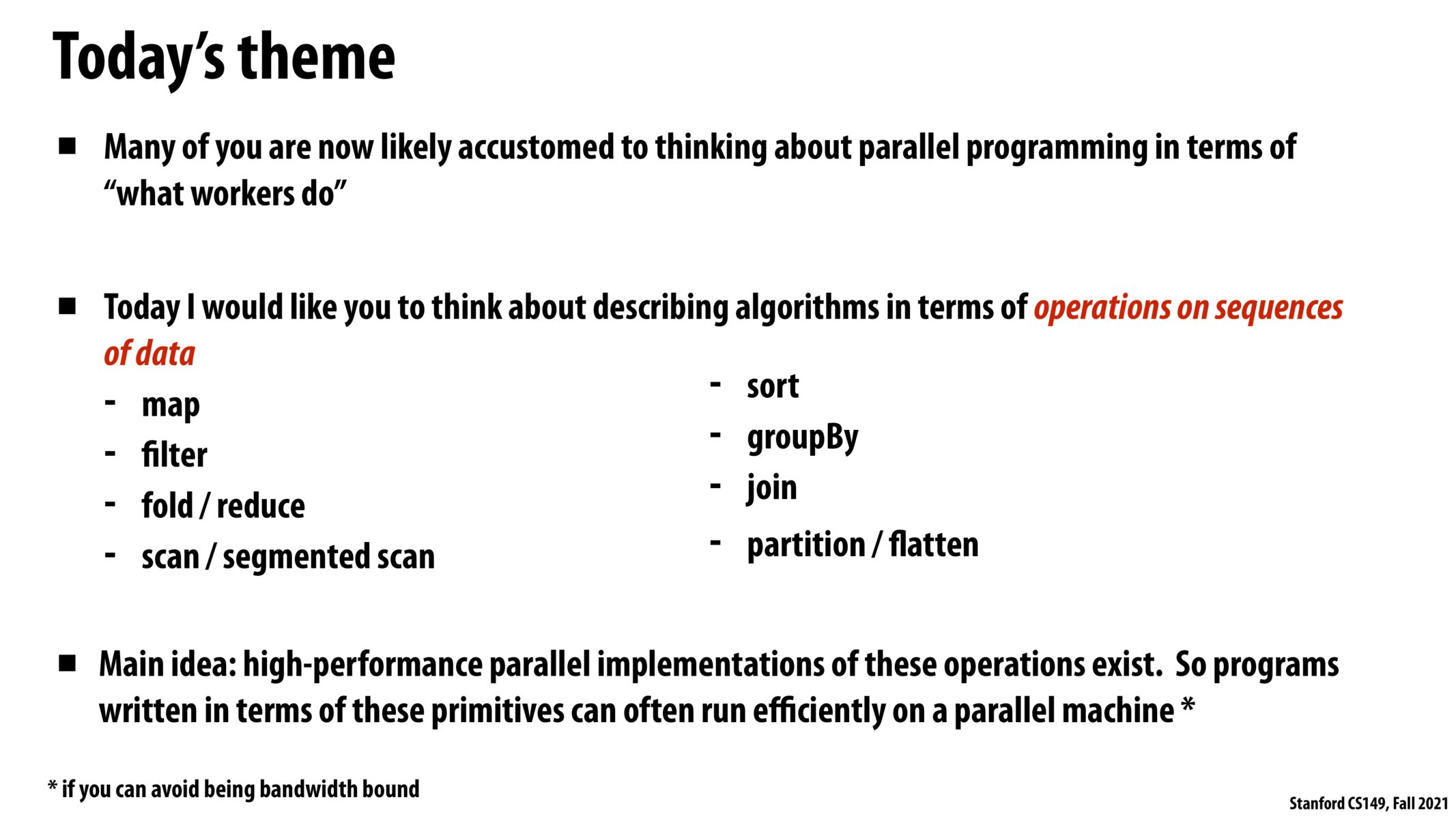

This goes back to the key idea of abstraction vs implementation. The programmer can use a primitive (fold, for example) to describe the operation of running fold on a sequence of data, but the hardware or compiler implementation might reorder and parallelize the computations to take advantage of all compute resources and improve the performance of the algorithm.

Is the primary purpose of these functions that they allow programmers to write parallel code without having to write SIMD or CUDA code? Rather, these functions provide an abstraction that hides implementation and architecture details?

@shreya_ravi I think that is one of the reasons. A bit more broadly, it allows for optimized implementations as noted in the `Main idea'' on the slide. For example,map` assumes something very strong about how you want to process your data -- every operation in the map is independent (note that things like cache locality of different operations in the map are still not known however).

This is related to the ideas used for RISC in computer architecture. If you can write programs using a few highly optimized primitives, that can actually be more performant than having many complex primitives (or writing all the low level code yourself, which also takes much time to write and debug).

Are those operations mentioned in the slide the most commonly used ones? Are most programs implemented sorely with those operations for efficiency?

Please log in to leave a comment.

Learning the existing collection of well known and high performing parallel algorithms for sequences, trees, etc, and designing your program in those terms seems like a huge time saver.