Here, we can reduce the amount we need to read from memory by compressing data. Using lower precision values helps us store and read more data from the same amount of memory.

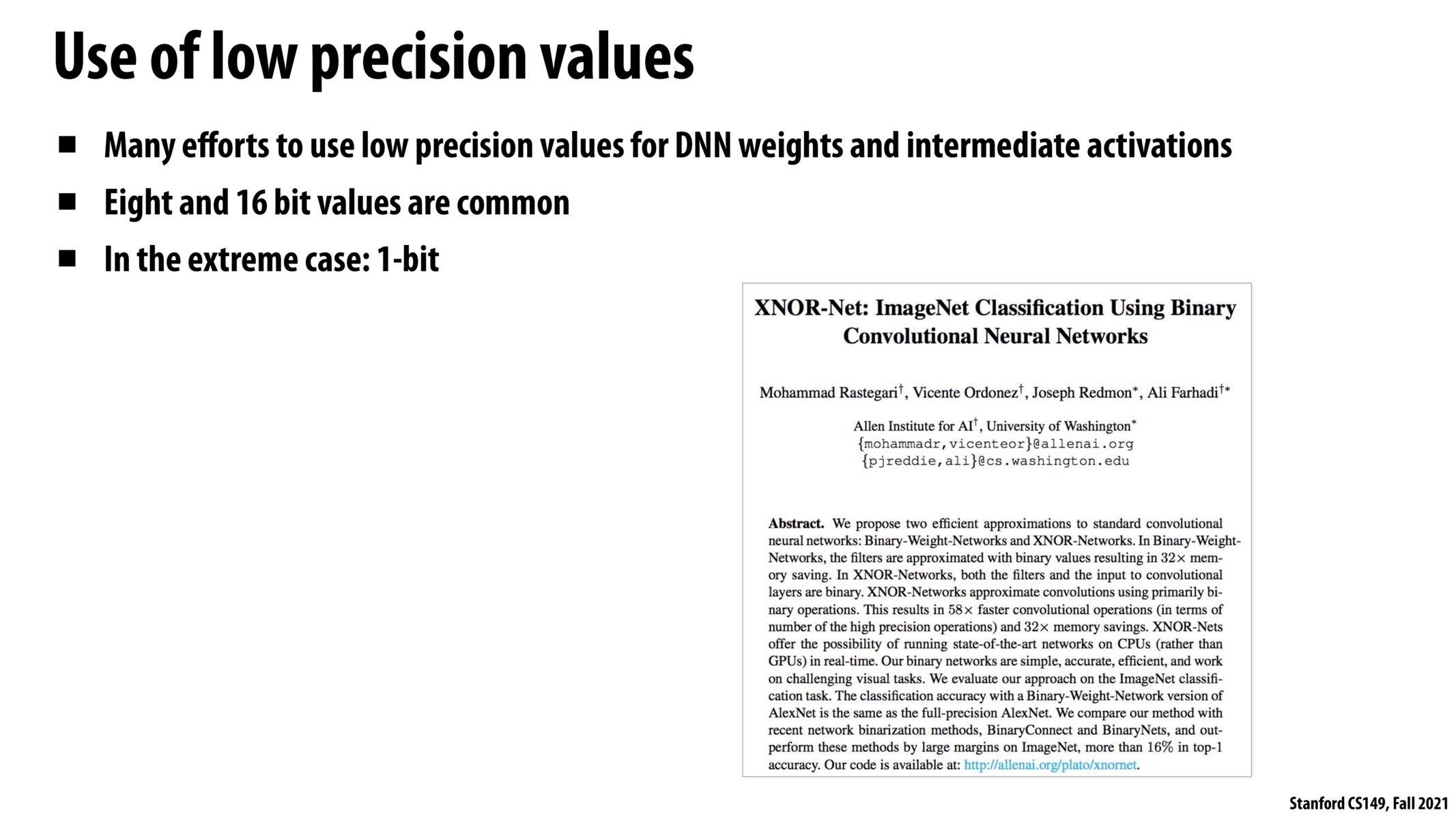

I can understand using 8- or 16-bit numbers since typically we add regularization anyway to encourage "reasonable" parameter values, but I find it hard to believe that 1-bit values produce effective models. If the research shows they do, that's very impressive!

@matteosantamaria I thought the same thing! I don't know anything in particular about this domain, but I assume that one-bit models use far more layers to achieve the same level of accuracy or granularity. If layer number/size had a large positive effect on accuracy, then a one-bit model that could hold 8x-16x the number of features might have an advantage. I did some brief Googling and found what seems to be a pretty foundational paper (https://arxiv.org/abs/1602.02830) in which they use values -1/1 rather than 0/1.

These are often called half-precision (short), single-precision (float) and double precision (double).

Please log in to leave a comment.

Do different models take different accuracy hits when using lower precision/different floating point formats? If so, does that mean HW will have to support multiple floating point formats to be most efficient or are the gains small enough that using a standardized low precision format is good enough?