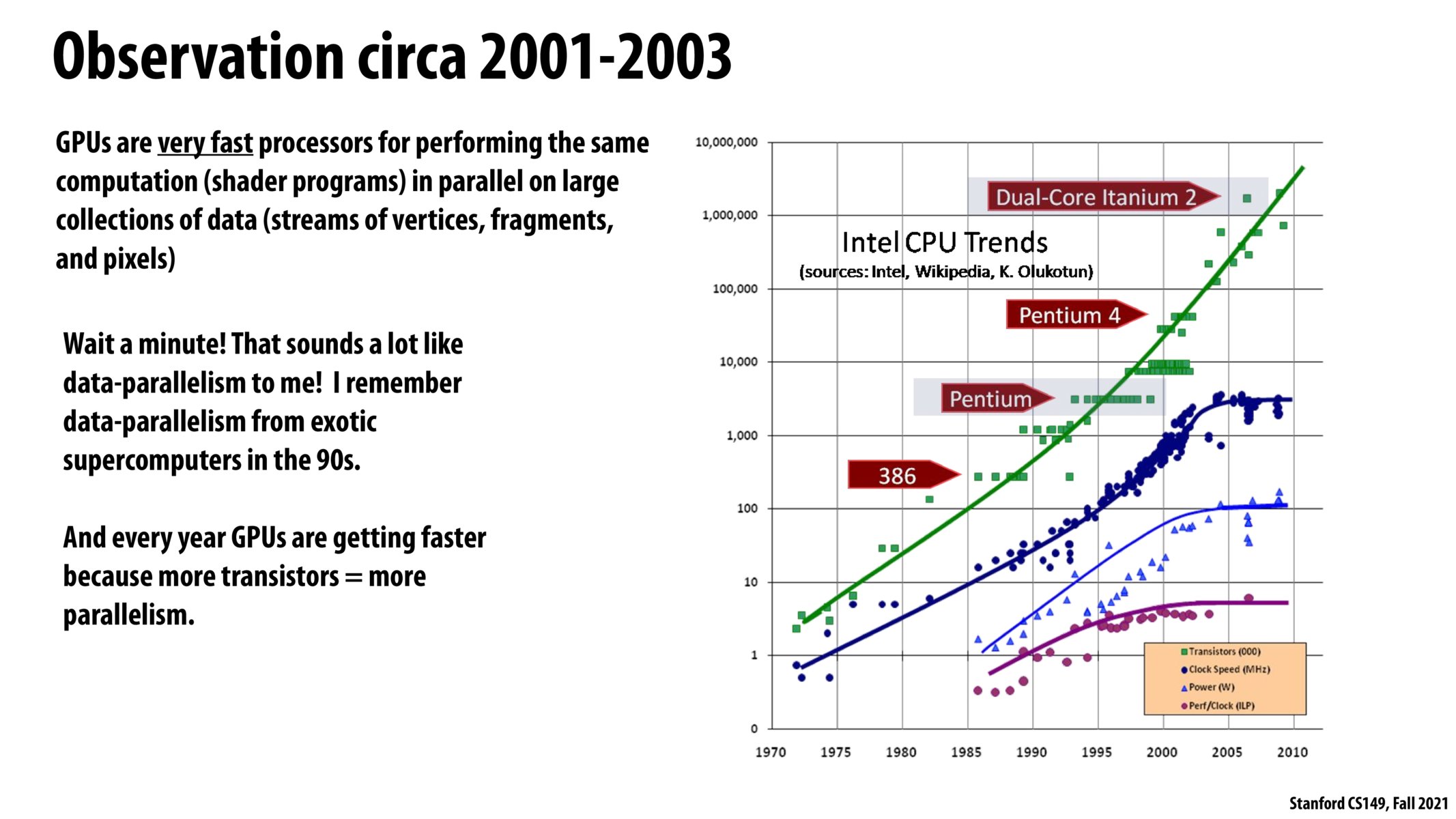

GPUs were able to utilize the additional transistors to increase parallelism since their use-case fits nicely with adding more and more parallel compute resources. CPUs running general purpose software may not be able to utilize the vast number of parallel compute resources as GPU use-cases can unless there's a use-case that fits and software changes are done, but at that point, we might have very custom/specialized deployments and using a GPU might be a better fit.

I think it is the single-core CPU matches the blue line. The recent trend of CPU is to add more cores, which also increases transistors and adds more processing powers.

What about performance per dollar, do we see a similar pattern?

@juliob, adding to @wkorbe's answer, CPUs are more specialized to deal with serial programs, while GPUs are specialized for more parallel applications. Therefore, it is easy to see that increasing number of transistors will allow you to duplicate the number of resources that will lead to more performant GPUs. On the other hands, serial code is much harder to speed up, as it's limited by control dependencies and memory latency. Unfortunately, we're saturated in our capability of dealing with control dependencies (branches and jumps) as we have very highly accurate branch predictors. The memory latency is also hard to deal with since it's dictated by the capabilities of physical circuits that we use to implement it.

Please log in to leave a comment.

In lecture, it was mentioned that GPUs are on the green line, while the CPUs were more like the blue lines. Why is this? Why couldn't CPUs continue the trend of speeding up like the GPUs? Couldn't they too have more transistors?