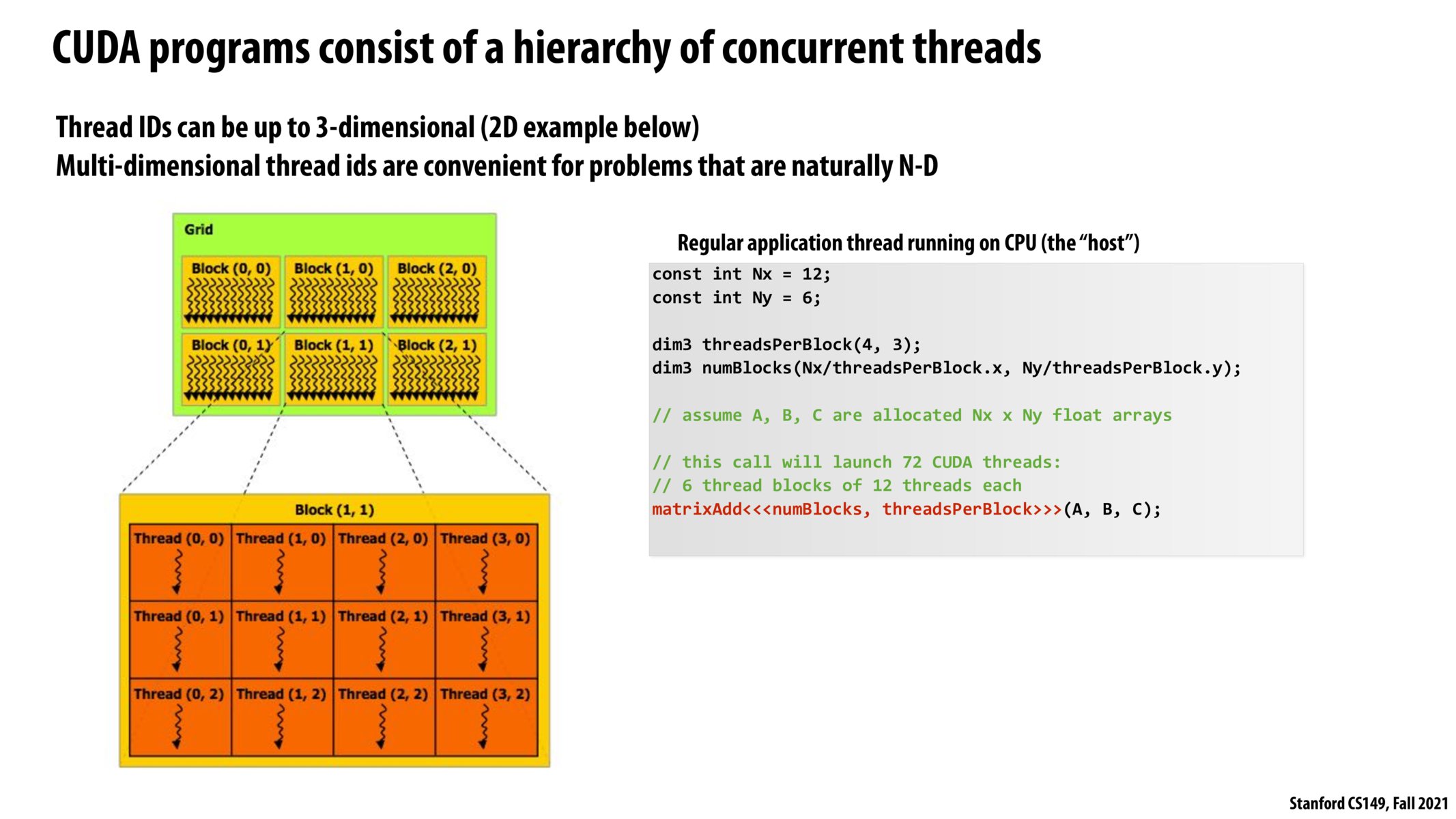

Would numBlocks ideally be the number of cores on the GPU and threadsPerBlock ideally be the number of ALUs/GPU core?

If we were to create a task pool and use thread blocks, would each block take threadsPerBlock amount of tasks each time, or does each thread in the block take tasks independently?

Do we organize the block in matrix format because the operation sent to CUDA is a matrix operation, so the 2D indices offer an easy way to partition the works? Meanwhile, is the thread within the block the CUDA thread? Or is the CUDA thread referring to something different?

I find it difficult to understand how this implementation would be useful outside of graphics or 2d/3d computations. Is CUDA only ever used for those situations?

How did we determine that the optimal arrangement of numBlocks is (3,2). If we used threadsPerBlock(2,3), then numBlocks = (12/2, 6/3) = (6,2) = 6 * 2 = 12 blocks? So how do we design this combination?

I initially found it slightly uncomfortable to think about threads in two dimensions -- mainly just because there's an extra index to keep track of -- but I think it makes sense given the graphics and AI applications that GPUs are often associated with. I wonder what type of hardware support there is for this on the GPU.

Should we be thinking about threads in 2D as one where the thread partition diagram is superimposed over something like an image, which shows the split of work in the image?

What is the reasoning behind creating the block abstraction? Wouldn't just using threads or tasks abstraction just work?

@albystein I think the idea is that it allows you to easily implement the shared block memory abstraction and it helps let the processor know what you want to be executed concurrently which is really useful if blocks are implementing different instruction sets like in the example later in lecture.

Does this idea of GPU thread blocks abstract into higher-dimensional tensors? The way thread blocks were explained in lecture, it seems as though you can really only go as high as 2D. How do 3D or 4D thread blocks work?

I had a hard time grasping this, so just to make sure I understand correctly: The grid is 12x6 and it is divided such that each block will have 12 threads, where each thread works on just one coordinate (x,y)?

@beste I think that's correct. The input grid is 12 x 6, and is divided into numBlocks blocks (so that there are 6 total blocks, arranged in the green grid). Each block has threadsPerBlock threads (so that there are 12 total threads, arranged in the orange grid). Each thread is able to work only one coordinate (x, y) because we have 12 x 6 = 72 elements in the input grid and numBlocks x threadsPerBlock = 6 x 12 = 72 total threads.

At first, I got confused trying to think about what blocks, threads in blocks, etc. are and how they mapped onto hardware - had to stop myself to remember that this slide is about the abstraction that CUDA provides, not the implementation:

- Inputs take the form of some kind of multidimensional arrays

- We specify the number of CUDA threads we want to create, as well as the layout of the work assigned to each thread, using multi-dimensional "blocks" and "threads per block".

- Calling matrixAdd will cause many instances of the matrixAdd CUDA function to be spawned, and those will execute, with some parallelism, on the inputs, before the function call returns.

I also needed to remind myself that "CUDA thread" != the kinds of software threads I'm used to.

I think that's right?

(Separately, Can blocks be N-dimensional too? Will they always have same dimensions as thread IDs?)

So basically Nx/threadblocks.x is the number of columns in the grid and Ny/threadblocks.y gives the number of rows in our grid

@tcr You're definitely correct in thinking of it as an abstraction. A CUDA thread represents a piece of work to be completed (much like an ISPC task), and my understanding is that unlike pthreads which have their own instruction stream, CUDA threads share their instruction stream in blocks. Also, I don't think blocks can be more than 3 dimensional; for a higher-dimensional use-case you can probably just manually map a 1D block into n dimensions. I think the existence of 2D and 3D blocks are just as a helpful abstraction to make implementation easier for very common 2D and 3D use-cases, so the programmer doesn't have to map a 1D block into multiple dimensions every time.

Assuming we wanted to repeat this computation on 10^9 different 12-by-6 matrices, would there be any advantage to this code over more serial computation? I'm not very familiar with CUDA yet but I don't have any intuition one way or another

If we are comparing to ISPC (and this isn't a direct / fully correct analogy). Would threadIdx be the programInstance, blockDim be the programCount and blockIdx be the number of gangs?

For a data input of size N and threadsPerBlock T, is there a reason to not set numBlocks to ceil(N/T)?

Please log in to leave a comment.

On paper I find an N-dimensional work decomposition policy unintuitive, so I will be interested to see how much I enjoy actually programming under this model. I'm sure throughout the assignments I will get more comfortable with this way of thinking.