Just to check my understanding, my takeaway so far is that CUDA is the most commonly relied-upon language to achieve SPMD programming on GPUs, where most use cases harness multi-dimensional calculations with more thorough indexing capabilities (block, thread). During lecture, I found that ISPC has its own GPU capabilities and am curious why CUDA's functionality or interface prevailed as a more popular technique.

Inside of these global functions, do we have to consider a limit on how much memory we can use? Also, is it safe to assume that the cost for all memory accesses within global functions have the same cost or is memory implemented using a cache hierarchy on the gpu similar to the processor.

@ecb11. CUDA actually came first. In fact, it was the "ease" of getting the performance benefits of SIMD execution on GPUS via SPMD programming in CUDA that motivated the creation of ISPC.

I highly recommend this series of blog posts. https://pharr.org/matt/blog/2018/04/18/ispc-origins

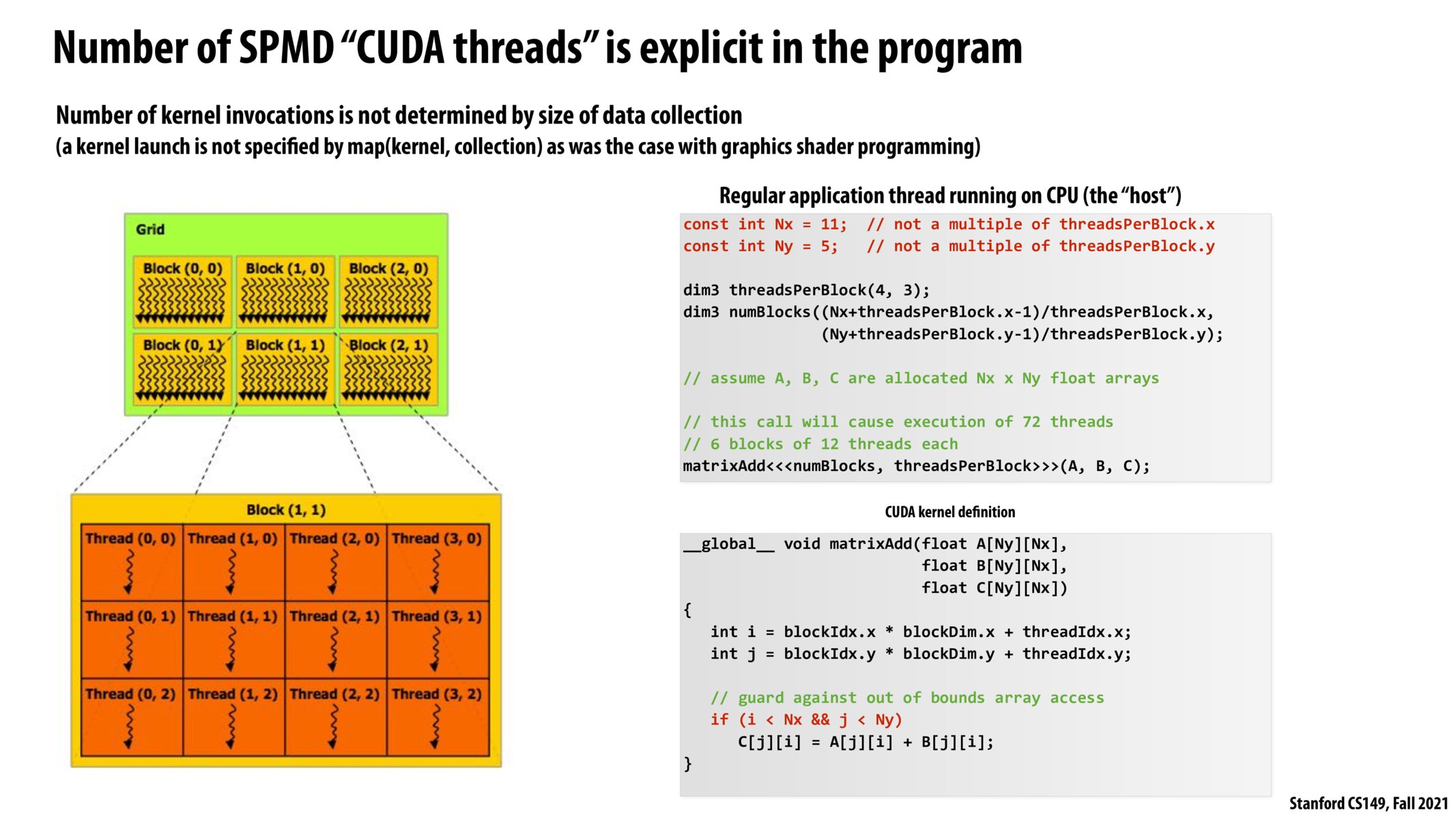

if(i < Nx && j < Ny) gates the code to prevent the program from going out of bounds. Therefore some threads will not do any useful work.

Here it was emphasized that SPMD "CUDA threads" were an abstraction, whereas SIMD was an implementation. Therefore, could we think of one block as a gang size of 12 program instances?

Notice that in this code example, we are launching 72 - 55 = 17 CUDA threads that are not being used. If we think about CUDA threads as instances of an ISPC gang, this would be the equivalent of creating a gang size larger than the amount of work we have. How significant is this underutilization of unused CUDA threads? Are there workarounds to this, or is this a tradeoff of CUDA's N-dimensional block implementation?

@noelma I think that yeah in the abstraction that you are describing, a block is a gang of instances that are called threads in the context of CUDA

Just as a sort of conceptual understanding...why don't we replace CPUs with GPUs?

I think it's since GPUs are faster. CPUs have multiple specialized units and a much bigger computational overhead to support all the instructions a computer could need. Generally CPUs can support many more instructions than a GPU can and can do anything a GPU does but they pay the price in a much slower execution (especially for computationally intensive applications like ML, Image Processing...etc). GPUs on the other end are much more specialized for intense computations and allow hundreds of thousands of more concurrent computations than GPUs but have a smaller instruction set than a CPU as a result and can't do everything a CPU does.

How do warps and blocks relate? My understanding is that we have a core that runs x threads with x/block size blocks. And within each block there are x/block_size/32 warps. But how do these warps communicate with eachother within the block, if at all. And are all these warps and blocks and what not sharing execution contexts? At what level (warp, blk, core) do the contexts lie to be shared?

Please log in to leave a comment.

Are there optimization decisions that can be made by developers to shape blocks so that there are few calls to out-of-bounds coordinates, or does it not really matter?