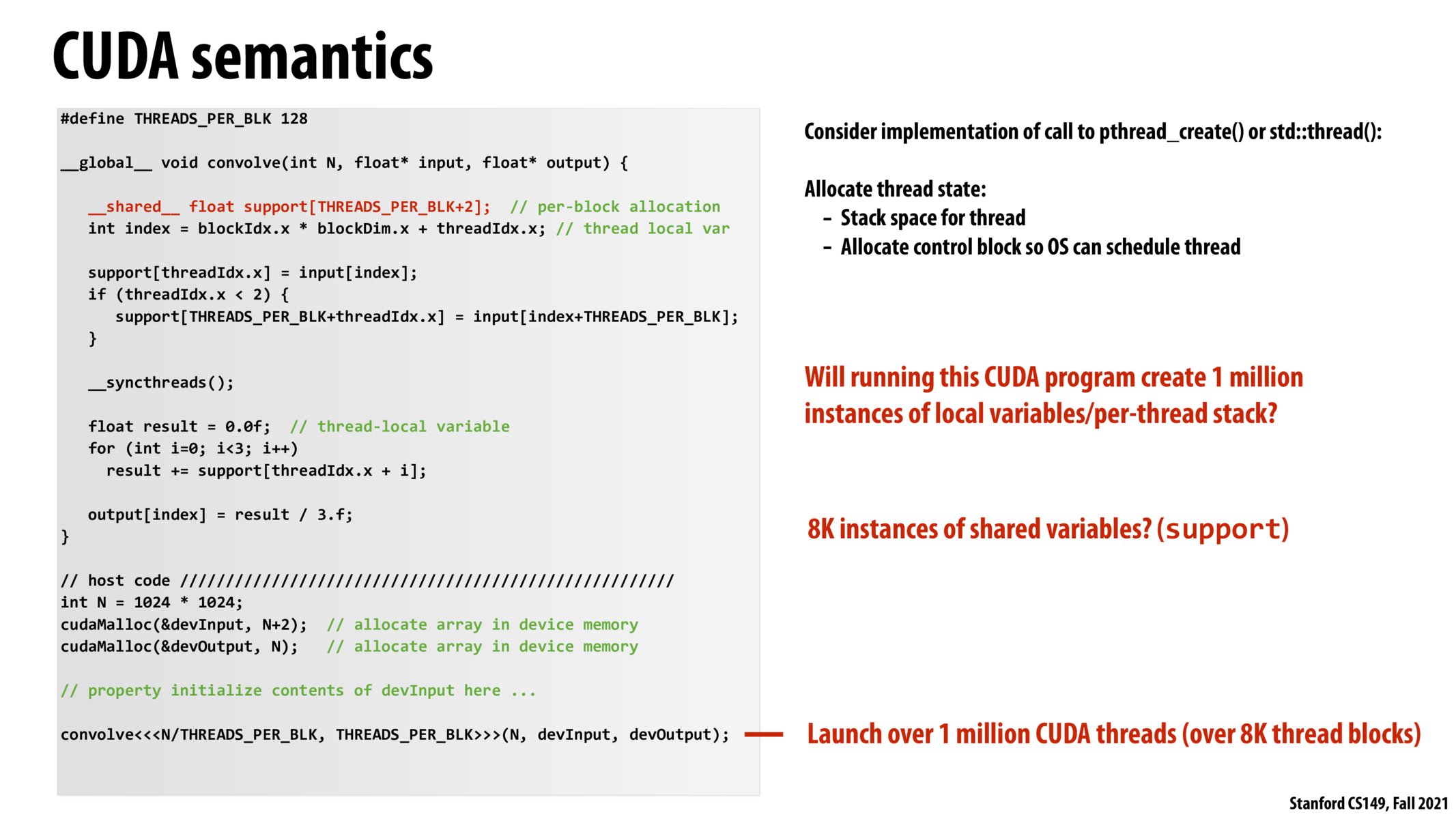

I think it was mentioned in the lecture that we are only interested in the one dimension aspect for this example. I guess if we were doing a 2-D convolution, we would have block dimensions.

What's the cost of such per-block shared memory - are they usually more expensive in terms of hardware as compared to main memory, and what are the typical limits for such memory (is it in the order MBs or GBs)?

@laimagineCS149 If you're talking about the cost in program time, shared memory should be less expensive. Assuming your program is written well, threads in the same block should mostly access the same variables and thus have fewer cache misses at the block level. In terms of monetary cost, I'm not sure how it factors in. I think generally L1 and L2 cache sizes are on the KB and MB scale, though you could definitely google this for the specific GPU you're using before writing code if that's a concern.

@juliewang yep if we don't specify with a dim3, I think it is assumed to be one-dimensional

Please log in to leave a comment.

Why do we not specify the thread block dimensions here? It seems like we just say that there are 8k blocks, each with 128 threads. Do we assume this means the blocks are 128x1?