Does modern graphics still follow roughly the same paradigms that the above slide does, in terms of translating triangle meshes into images? Or are there any news ways of doing graphics on the Horizon?

This discussion takes me back to my childhood when a video game would glitch and you'd see the backs of a bunch of polygons and wonder how the virtual world that felt so solid on the inside was really just a set of well connected but infinitely thin 2d surfaces.

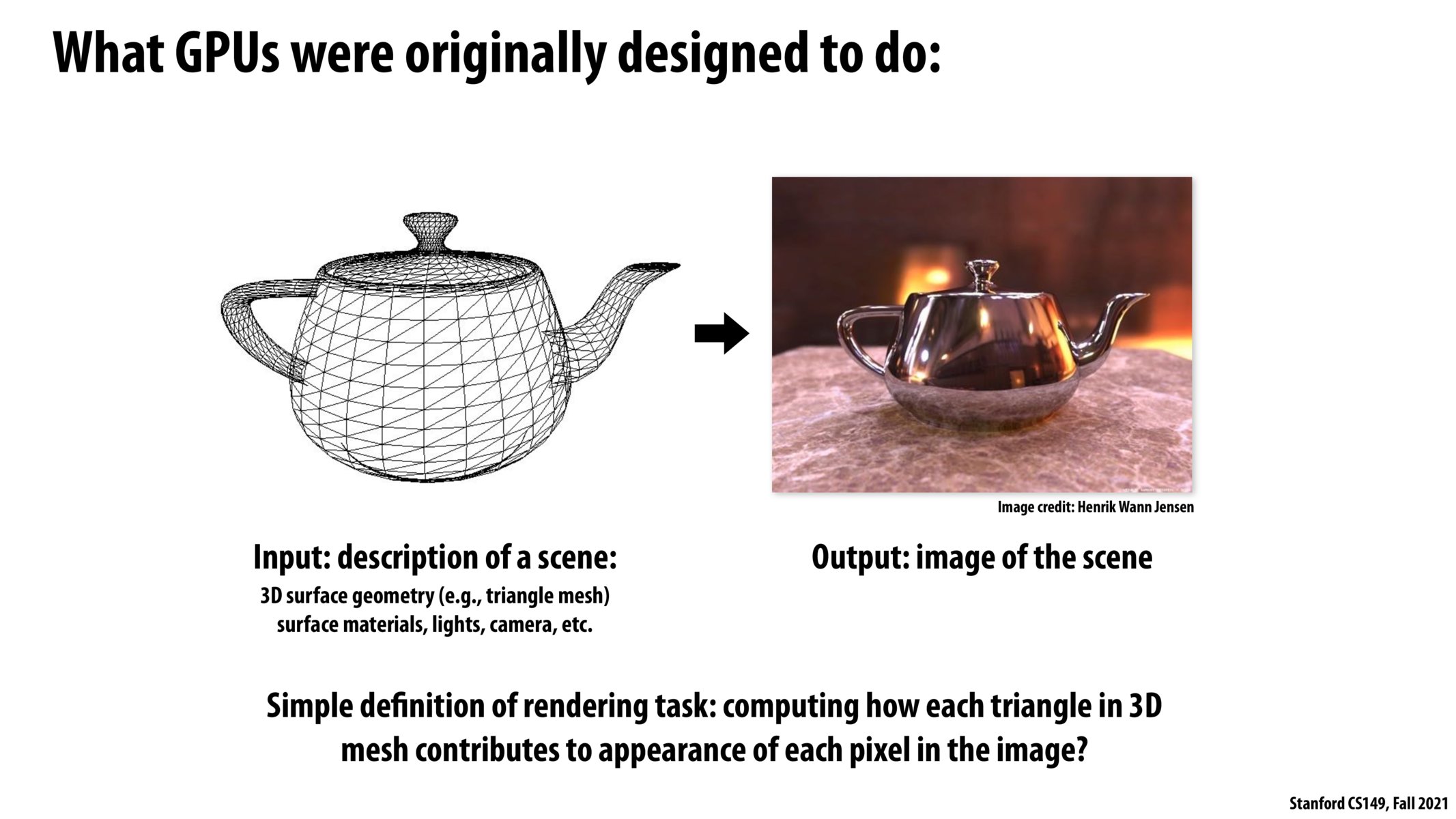

Some additional information from lecture regarding the motivation for GPUs:

Early 2000s, GPUs were originally designed for graphics computation, consider for example rendering tasks. One example of such a workload is as follows: - Given a triangle determine where it lies on screen given the position of a virtual camera - For all pixels covered by the triangle, compute the color of those pixels - More generally, given properties of a pixel, determine its color

GPUs have so many parallel processing elements because they were originally designed to evaluate a user-defined function for pixel coloring at every pixel in the image. Many SIMD-enabled multi-threaded cores provide efficient execution of such shader programs.

Please log in to leave a comment.

I think it was CS148 class where I used Blender to do raytracing to render some cool things. There were things like shaders, textures, cameras, light angles, 3D mesh etc that were needed to render a scene of our choice. Now I understand how much compute power is needed for all the matrix calculations as "painting" the vertices is the algorithm used to give color and shape to the 3D meshes and we ran these on NVIDIA GPUs. During that course, I hardly understood what a GPU does. Now I understand much better.