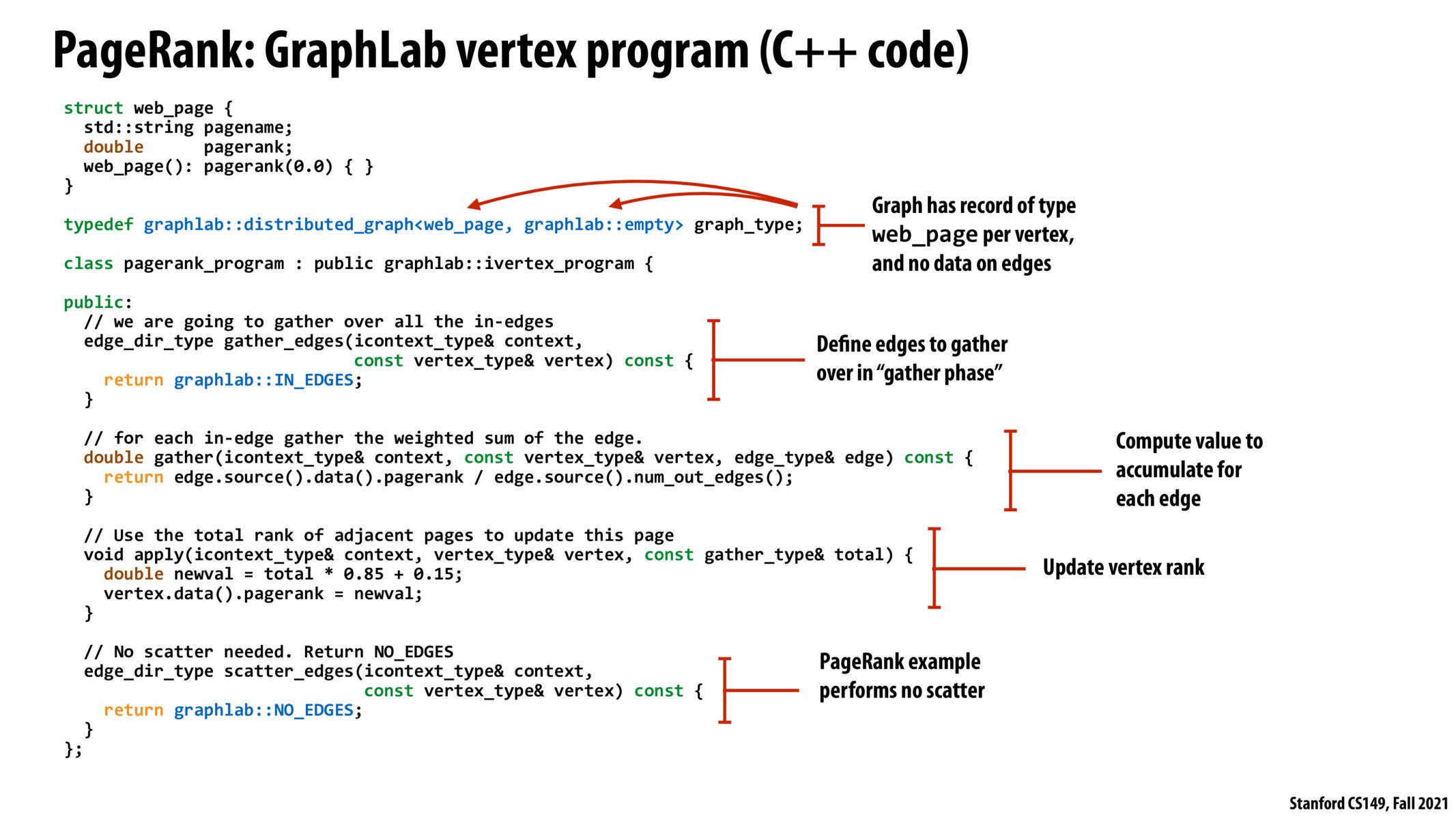

Yes, I believe that's the case. It's very similar to implementing a graph neural network in using PyTorch Geometric (though this is probably copied from the former).

Is there a reason for double newval = total * 0.85 + 0.15 and not double newval = total * 0.85 + (0.15 / num_graph_vertices()) (or whatever the actual syntax is)? Do we somehow not need to divide the additive damping constant by the number of nodes in the graph because of the summative powers of apply, or is this a bug?

It's worth observing how the values returned by gather are "summed" per vertex. This may seem like a highly restrictive operation, since many graph algorithms don't quite use a summation across the edges [even though PageRank happens to do so]. However, since you can do operator overloading in C++, you can define a custom type per vertex: instead of "double", you can define your own class/struct and define a custom "+" operator. As long as your operator is associative and commutative, I believe GraphLab [and certainly many of its successors] will work just fine. Notice that a "set merge" is / can be associative and commutative and hence you can accumulate all the information from the edges, and then apply a more complex operation in "apply". This might result in unnecessary bloat, however, depending on the algorithm and system implementation. (Because of this, such DSLs can sometimes result in work-inefficient versions of the algorithms relevant to some applications.)

This feels like a lot of boilerplate for a language that is supposed to improve development time. I would if there are ways to make it less verbose.

Also, what other ways would you use gather_edges? I'm struggling to see how this is generalized enough to be useful (granted I'm not well versed in graph algorithms).

I think Kayvon mentioned that graph algorithms are generally bandwidth-bound, as we don't do a whole lot of computation on any particular set of nodes in the graph. How does GraphLab get by this issue? Is it just exploiting locality as much as possible by reusing variables / fusing operations? Or is it using some really clever graph representations as well?

Also @jiaju I'm curious if a lot of the graph operations offered in PyG are built on top of GraphLab.

Interesting... @matteosantamaria that's exactly what I thought... Wonder if there's a better way to design a DSL that offers a more flexible paradigm...

My understanding is that graphlab provides you with these four methods (gather_edges, gather, apply, and scatter_edges), and then takes care of the under-the-hood storing of your graph vertices and edges, abstracting that away from the user. If you wanted to use a different technique for storing a graph - say if you had certain nodes and edges that were more important to you and accessed way more for a custom algorithm, would that mean you wouldn't be able to use graphlab?

I'm pretty curious about how graphlab generalizes to more specific use-cases with a lot more sparsity. For example, what if we only care about a very small fraction of in-edges for gather_edges?

Please log in to leave a comment.

I'd appreciate if someone could check my understanding:

The GraphLab interface defines these four methods (gather_edges, gather, apply, and scatter_edges), and it is the responsibility of the programmer to implement them. The GraphLab runtime will then take care of invoking these methods.