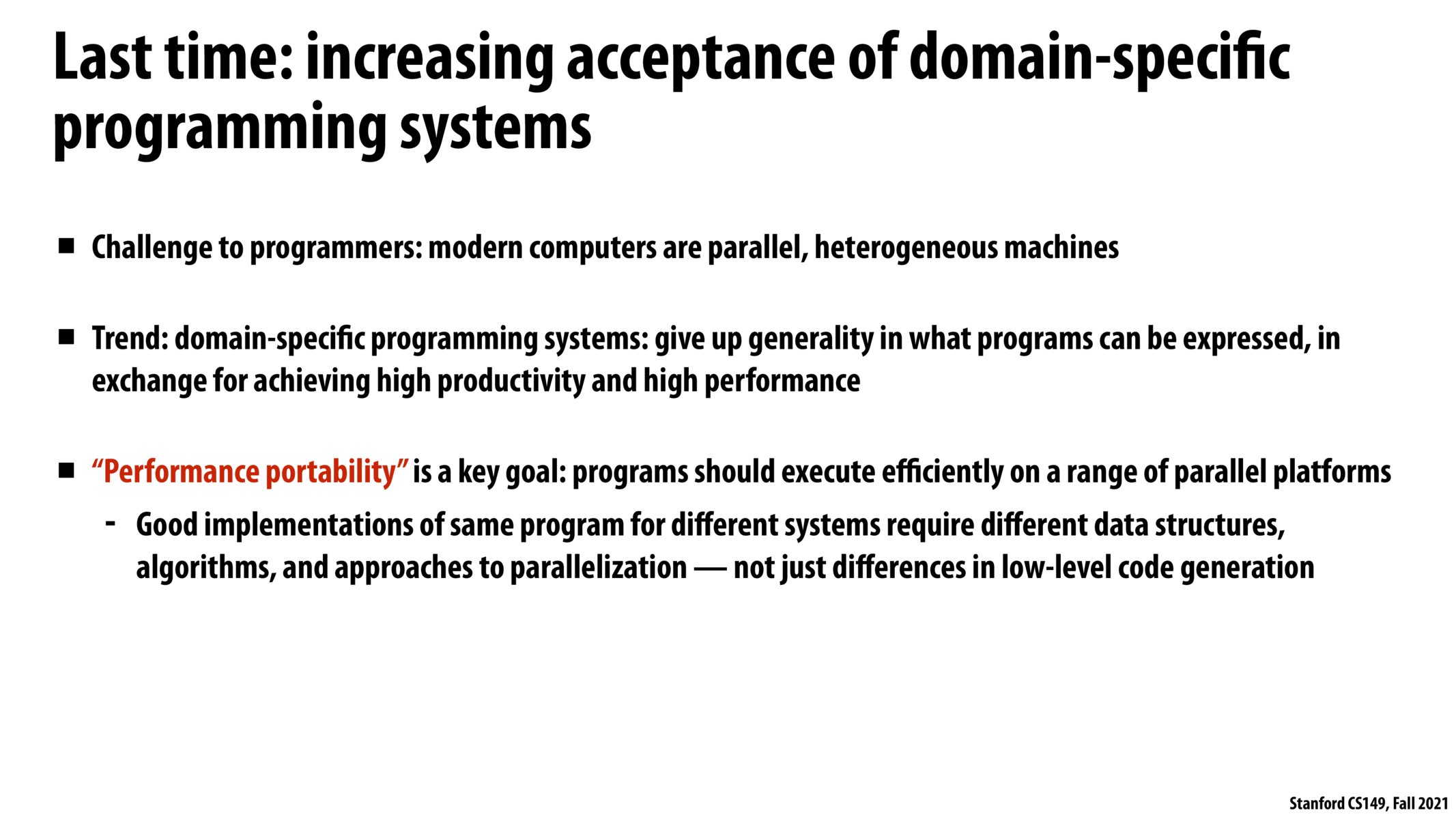

An educated guess, this may refer to achieving good performance on both SIMD and SPMD or for devices with different cache transactional memory implementations. Especially with the last one, the difference between lazy optimistic and eager pessimistic might have a bigger impact than just changing say the order of the atomic regions. There are probably much better examples but the overall idea is that different approaches to parallelism may require big differences when figuring out how to run these programs.

@tigerpanda you are correct that the implementation of the same program may be different on different systems - a simple example would be a matrix multiplication implemented in CUDA (https://docs.nvidia.com/cuda/cuda-c-programming-guide/index.html#shared-memory) vs a CPU only implementation. The multiplication can be sped up significantly by using shared memory which is a hardware specific feature. Even something as simple as a matrix transpose can look quite different from parallel CPU code when you leverage shared memory - https://developer.nvidia.com/blog/efficient-matrix-transpose-cuda-cc/

Sure you can have a naive translation from parallel CPU code to GPU code but it will likely be suboptimal if you don't leverage GPU specific features.

To add on to this discussion about performance portability, the first thing that came to mind was the fine tuning we had to do on myth vs aws for assignment 4 and also just the sheer difference in runtime we get for both assignment 3 and 4 when we moved it to aws. If we were to solve the circle rendering problem for just myth, we definitely wouldn't have used cuda - we were only able to do that because we had GPUs. Likewise, for something like parallelizing BFS, our solution was optimized for 32 threads, not 8.

Please log in to leave a comment.

why is this last statement true, that good implementation of the same program for different systems require different data structures, algorithms and approaches to parallelization? Is this just an assumption that in order to maximize efficiency on different systems, the implementation of the same program may be different?