Is there a good way to know which cases or operations will be the most important? Can we know which optimizations are likely to give the best results for the vast majority of programs? Of course, the answer to this will be highly domain-specific.

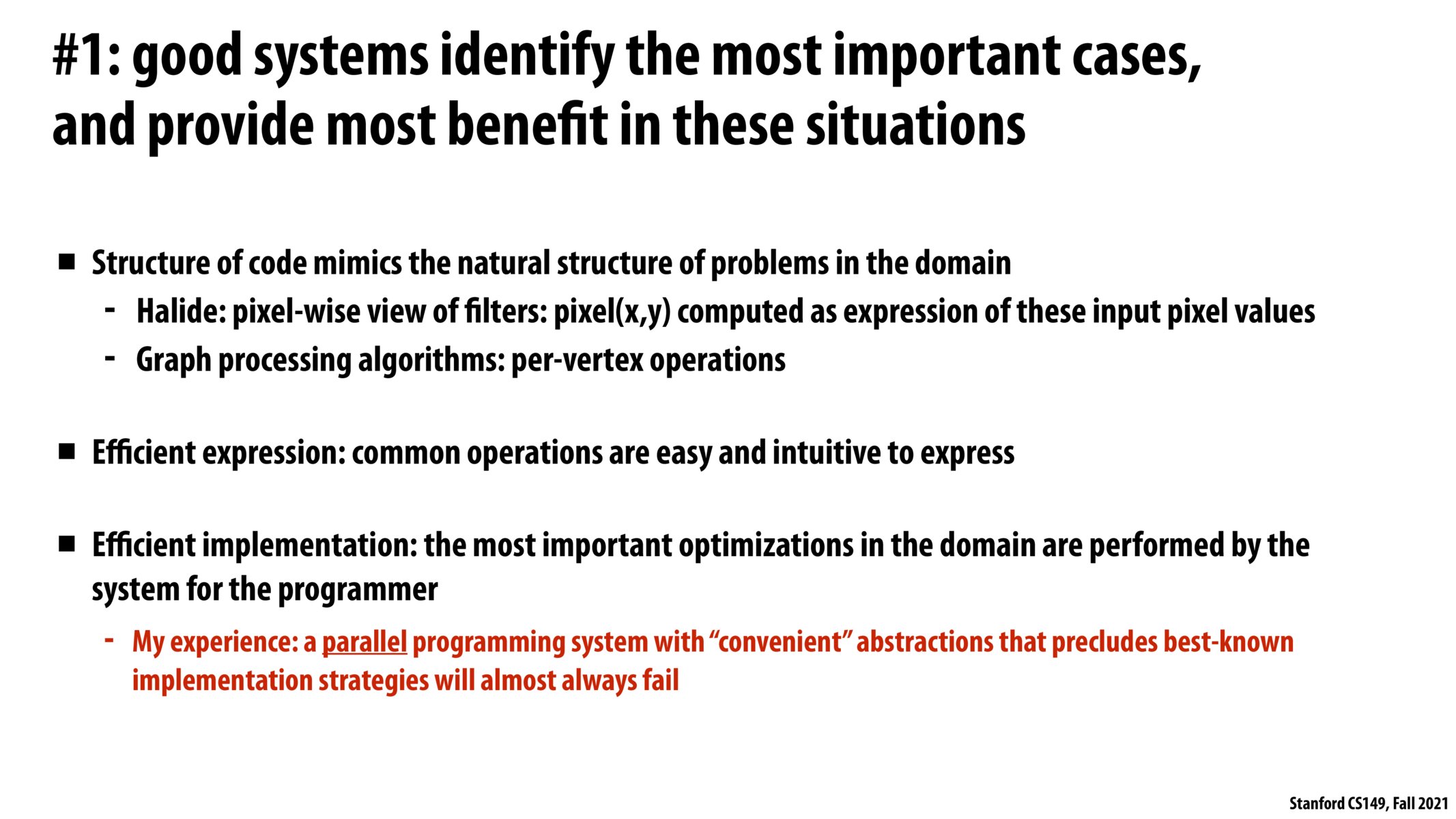

I liked the summary of key points how how to design a good system a lot. This probably requires lots of iteration and refinement (the example Kayvon gave in class was that of TensorFlow, where ML practitioners came up with new use cases and hence needed more abstractions). I wonder what the last point means though: what is meant by precluding best-known implementation strategies?

Does the red sentence mean that a parallel programming system that uses less-optimal implementation strategies will always fail? If it is, why is the case? Is it not possible to sacrifice implementation for parallelism?

Please log in to leave a comment.

What are some examples of “convenient” abstractions that will almost always fail?