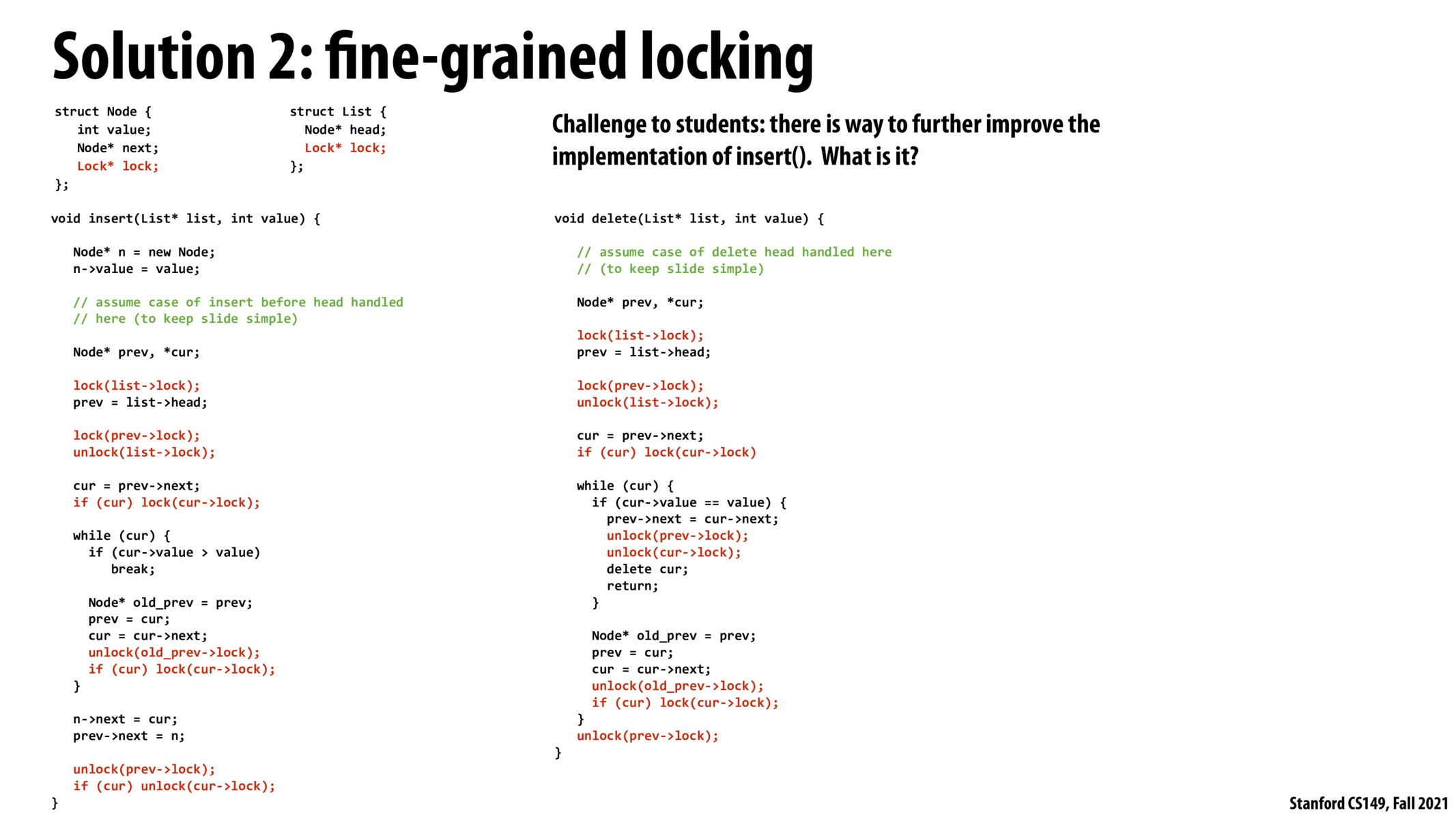

For insert(), why do we need locks for both pre and cur? What will be wrong if we only lock prev ptr?

I'm a bit confused why the entire list still needs a lock in this case - does the fine-grained lock on the first element to set prev not suffice?

I have the same question as @12345. It seems that for insertion, you're the only one who knows about the node you're going to insert (in some sense it is locked because nobody else can access it until you insert it), and nobody can (simultaneously) delete the node that comes after the one you are inserting because in order to perform that deletion you need to lock on the node that comes before it. However, in the insertion case, you are already holding the lock on that node. Is this thinking correct?

@juliob My interpretation is that we need to acquire acquire lock on the entire list first is that we want to guarantee that list->head is up to date as we might change the list head during deletion.

I also have the same question about why is the locking for cur necessary for insert(). It seems that locking prev is sufficient for insert, as we lock both prev/cur in delete() and is not able to delete cur if prev is locked during insert.

@xiyan @12345 I think this might be an optimization to improve the implementation of insert().

Consider 1 -> 2 -> 4 -> 5 -> 6

Insert is trying to insert 3. It locks 2 and sets 2->next to 3, and 3->next to 4.

No other inserts can get to 2, because the first insert has a lock on 2.

There cant be a delete on 4, because delete would need a lock on 2. If there is a delete on 5, then delete holds the lock on 4 and 5. It would be changing 4->next to point to 6. This is totally fine, as insert is just changing the pointer that points to 4, and no details on 4 itself.

I /think/ the optimization to insert is to hold the lock of prev without holding the lock of cur; the pseudocode will be like

``` lock (list.lock); prev = list.head;

lock(prev.lock);

if (prev && prev->val > new_node->val) { new_node->next = prev; list.head = new_node;

unlock(prev.lock); unlock(list.lock); return; }

unlock(list.lock);

while(prev->next) { // prev.lock is held when program executes this line // since prev is locked, prev->next cannot be updated if (prev->next->val > new_val) { break; }

// Note in order to acquire the lock of a node, the program must hold the lock // if its previous lock. lock(prev->next->lock); unlock(prev->lock); prev = prev->next; }

// prev->next->val > new_val || arrives at the end of the list

// when program arrives this line, prev->lock is held by current thread

if (prev->next) {

// no other threads could hold the lock of prev-next because prev->lock is held

// by current thread

prev->next = new_node;

new_node->next = prev->next;

} else {

// append new_node at the tail.

prev->next = new_node;

}

```

I'm wondering if the same optimization could be applied to delete as well.

Basically, the prerequisite is that, the val and lock field of a Node is immutable, so by holding a lock, we are essentially getting exclusive access to update the next of a node.

If the above statement makes sense, then delete method could lazily acquire the lock of cur (i.e., only until when it's going to be deleted, or become the current pointer), as opposed to eagerly acquire the lock of cur while holding the lock of prev.

Minglotus - I agree that I don't know if it's necessary to lock cur while traversing the list in delete (before deleting a node). May be enough to just lock one node at a time during traversal - except, importantly, for momentarily when you're "passing off" the locking, to make sure that a thread is always holding at least one lock.

I.e., it's not necessary to hold a lock on cur while checking if cur->value == value, because no other thread could delete cur or insert another value into prev->next if a thread holds a lock on prev. (Could change cur->next, but reading value is independent of that.)

That said, I don't see how this is a significant improvement, because the only difference in duration of locking cur->lock is ~1 line of code (if cur->value == value).

In this scheme we make sure to lock after we have acquired something, is the reason behind this to spend as little time locking as possible, because having access to data isn't an issue, rather it is doing things with it e.g. reading or writing.

Please log in to leave a comment.

This has improved the parallelism at the cost of additional lock/unlock calls with are relatively expensive, and by adding additional memory usage. Useful if the parallelism was able to speedup the application to overcome the added lock/unlock overhead where the added memory usage is inconsequential.