This slide cleared up a misconception I had about superscalar execution. The definition given in lecture 1 for superscalar execution was the following: the processor automatically finds independent instructions in an instruction sequence and executes them in parallel on multiple execution units. I had initially confused execution units with additional cores. Thursday's lecture made it clear that you cannot truly run two threads in parallel without multiple cores.

@ismael, I wanted to thank you for this distinction. Emerging from lecture 1, I was wondering about the differences between concurrency and parallelism remembering what was covered briefly in 110 and now as we deep dive in 149. A quick google search, in addition, helped give a clear comparison of Parallelism vs. Concurrency

We (programmer or compiler) need to spun up multiple threads to leverage the multi core design in the processor

In practice, do cores typically share some data (from some source of truth), or do they usually copy the data such that there is one copy of the dataset per core?

Please log in to leave a comment.

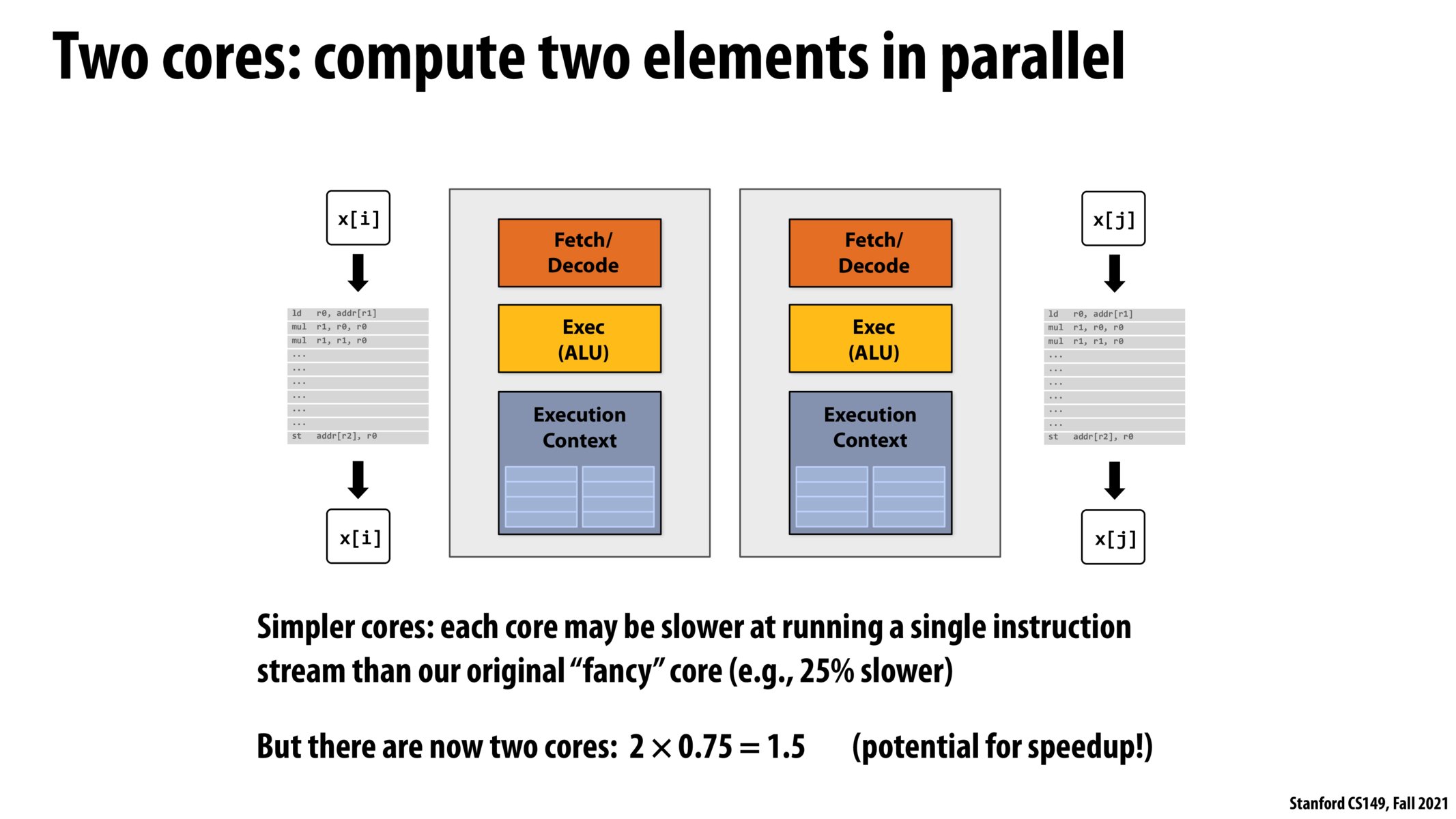

A summary of how we get the 2 x 0.75 = 1.5 speedup in case anyone missed it.

Here, we are comparing: * the "fancy" single-core processor on slide 17 with add-ons such as data cache, out-of-order control logic etc that helps a single instruction stream run faster * and the "simpler" multicore processor on this slide with 2 cores, each core can run one instruction stream/thread, without the add-ons for speeding up a single instruction stream in fancy processor

Suppose the multicore processor runs 25% slower compared to the "fancy" single-core processor when executing a single instruction stream, this means that the multi-core processor can run 75% (0.75) of the instructions that the fancy single-core processor can run in the same amount of time / clocks. If there are 2 instruction streams that can be run in parallel at all time, the multicore processor can run 2 x 0.75 = 1.5 times the number of instructions compared to fancy singlecore processor since it has 2 cores, thus the speedup of 1.5.

Note that this is the speedup when both cores are being used. Suppose the processors are only scheduled to run 1 single-threaded program, there is only 1 instruction stream, and only one of the two cores of the multicore processor is busy and the other is idle, then the multicore processor will still be 25% slower than the fancy single-core processor since the additional core isn't utilized.