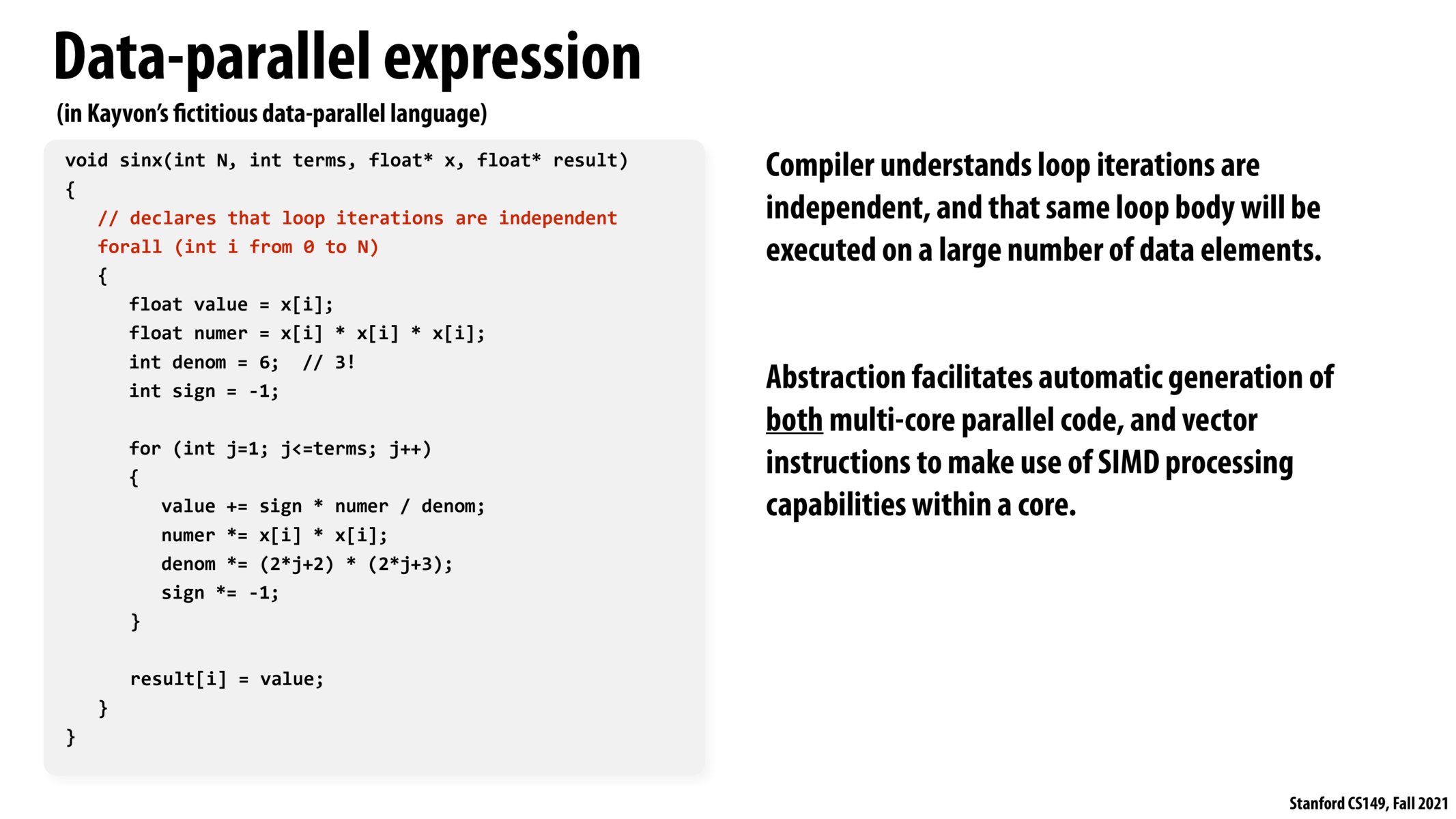

Note that this particular code is not in any actual language. (It's not ISPC, if that's what you are wondering.) It's a "fake" language with a "for all" loop that we said is used to declare that loop iterations are independent. We did not provide an implementation of this fake language in class.

Your post above is asking about the implementation of this program. The slide is not saying how this code is parallelized on a machine, it is only pointing out that since the loop iterations are known to be independent, a compiler/runtime system has the flexibility to execute them in any order if chooses, and therefore has the flexibility to choose different forms of parallel implementation. It is possible for a hypothetical implementation to parallelize this code by creating threads that run on different cores, or by compiling it to SIMD vector instructions in a single thread, or both!

Epic — thanks Kayvon that makes sense!!

Please log in to leave a comment.

I think I may be a bit confused here — is the idea that each independent loop over i would be scheduled on a different core, and SIMD would be used to compute the inner loop over j (which are the Taylor terms for a specific x[i]?) This is confusing to me because a few slides back it seemed we were using SIMD for iterations across i, so I wanted to clarify which part of the loop each type of parallelism corresponded to (it seems like there are multiple ways of doing this, eg multicore execution for the loop over i, or SIMD execution for the loop over i as suggested on a previous slide)