@shaan0924. Absolutely. The idea of SIMD designs is to amortize all the processor logic needed to control an instruction stream over many execution units. And the savings of sharing all this logic across multiple ALUs can be huge.

I was interested in precisely how programmers can take advantage of these concepts, hence the summary below. Please correct me if I'm missing something / am incorrect here!

-

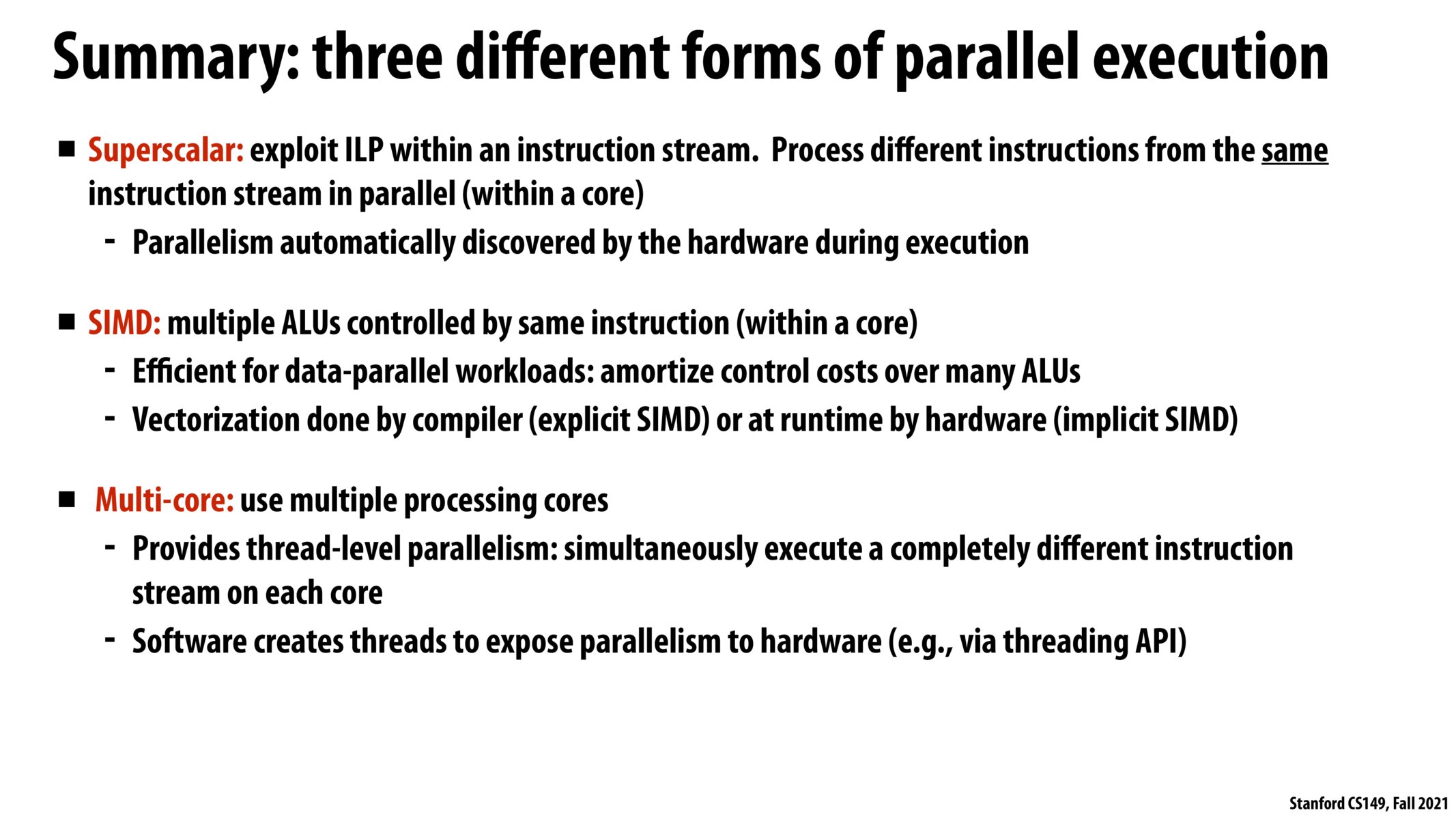

Superscalar (ILP): automatically discovered by hardware, nothing a programmer can do to enable better superscalar execution beyond writing code that has an optimal dependency tree.

-

SIMD: potentially discovered by compiler (programmers can write SIMD instructions using intrinsics) or at runtime by hardware (sidenote: how does hardware identify these points of optimization? this seems far more challenging than discovering ILP)

-

Multi-core: only enabled by explicit programmer thread/process creation. Further boosted by potential hyperthreading: in this case, the OS allots some fixed number of threads to each hyperthreading-enabled processor (equivalently, core), and the processor hardware switches between these threads (0 context switching cost) in order to achieve peak utilization (so utilization isn't hampered on RAM access). On fs access, a thread would block, resulting in an OS-level context switch at which point a different thread would be assigned to the blocked thread's processor.

I am still a little confused on the concept of implicit SIMD. It seems that there are so many different types of instructions that might need to be run, and in order for this architecture to be general, we might assume that we have no idea what program the user will want to run. In that case, it seems unlikely that an arbitrary program would have enough of the same instruction so close together in execution time that we can use implicit SIMD. How much utilization can we achieve on average, or are we oftentimes at the 1/8th worst case scenario we discussed in lecture? Or is this architecture only used when we have specific applications we know can be parallelized?

Please log in to leave a comment.

I find it hard to rationalize why I would choose SIMD over multi-core when multi-core can operate more intelligently. Is it the ALU control costs that makes SIMD more optimal or is SIMD more efficient at running simple operations in parallel?