@jtguibas, there are few reasons for that. The first would be that the maximum size is dictated by the page size. In order for us to be able to do virtual-to-physical address translation at the same time as cache lookup, we need to keep the L1 cache small (usually 32KB). The second reason is that comes from the circuitry of caches. In general, making memory larger makes it slower, so if we were to make our L1 faster, we would make it slower (due to wire delays, etc.) which is not desirable for us.

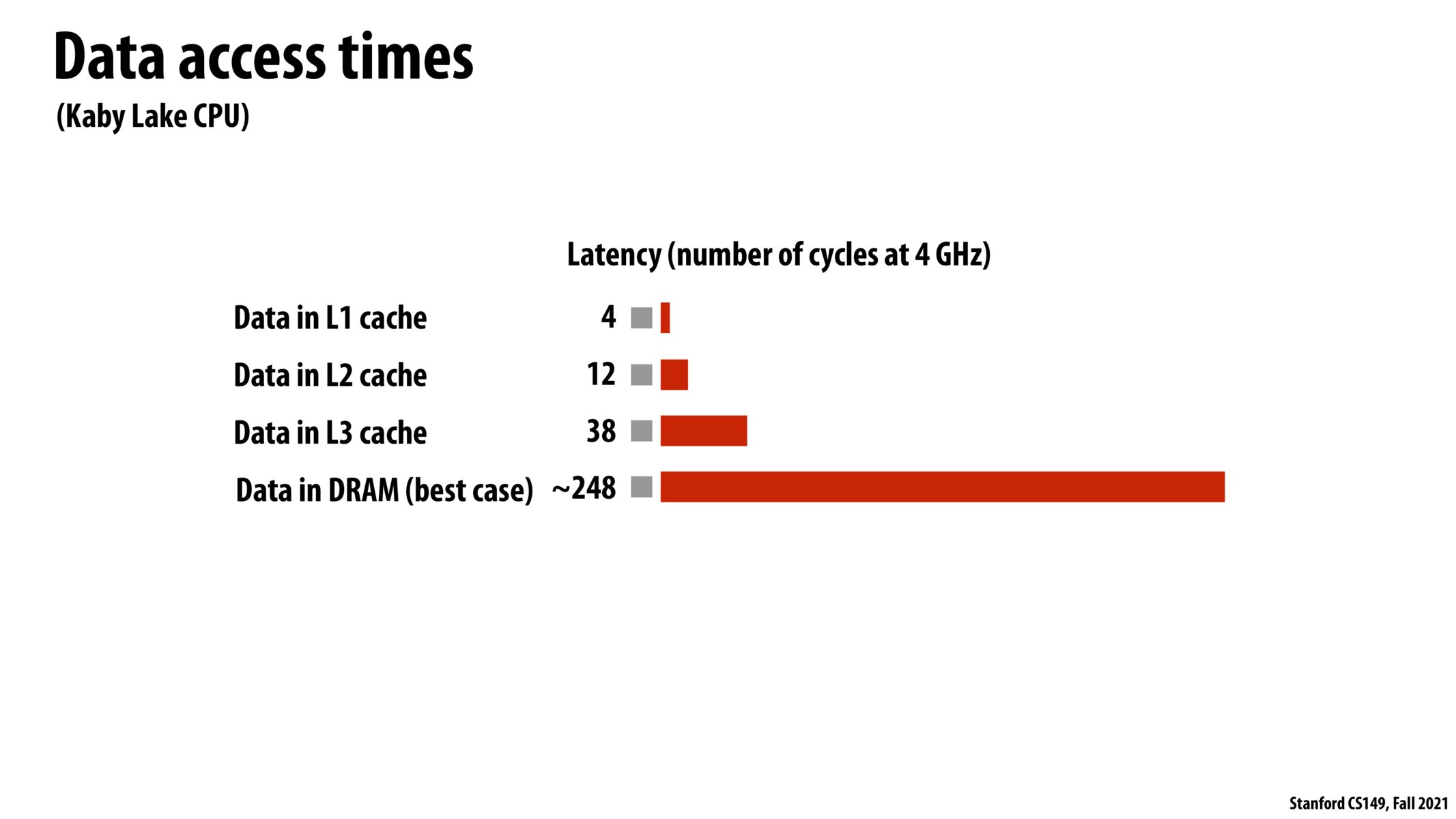

I wonder what would happen if we laid out memory in concentric circles, with different cache regions being different radial slices of the circle. This kind of layout would have a linear relationship with cache size and speed of access. Practically this is what we see with cache sizes and speeds: different cache tiers have order of magnitude differences in latency as well as size.

I found this reference from stack overflow comparing speeds of various cpu operations (including cache and DRAM lookups) that is particularly eye-opening, especially with network latency in the comparison: https://stackoverflow.com/questions/4087280/approximate-cost-to-access-various-caches-and-main-memory

As an added bonus to being a lot faster, getting memory from the cache instead of the dram saves a significant amount of power. This might not seem as important as the memory latency, but on mobile chips especially, but also on server chips power usage (and cooling) energy efficiency is valuable.

Please log in to leave a comment.

Why is it more efficient to have three different tier's of caches? What is stopping us from having a huge L1 cache?