What would be the benefit of using the message passing model over the other two? The code looks much more complicated in this scenario - is the use case in a world where shared memory is impossible, like in super computer configurations?

It seems to me that this code would be more efficient if messages were sent without waiting. The program would only need to confirm that messages had been received just before sending the next messages. Is there any reason why this wouldn't be better?

This feels risky, because it seems like the program instances could potentially get out of sync. I suppose the theoretical definitions of send and recv prevent that, but it seems like those would be really hard to implement robustly.

^Slide 35 talks about non-blocking async send/recv that may work better for some applications. In fact, I think async functions are used more in practice because in most cases you only need to react after receiving some messages and you don't want to block the whole thread.

In response to @juliob, my understanding is that the other two models are restricted to a single core/multiple cores that share the same memory. In a distributed setting, these requirements are not feasible across different machines so the message passing model has to be used.

This may be questions regarding implementation details, but how does each node differentiate between messages from different iterations of the outermost while loop? Do they attach iteration number in every message so each node do not execute the loop out of order?

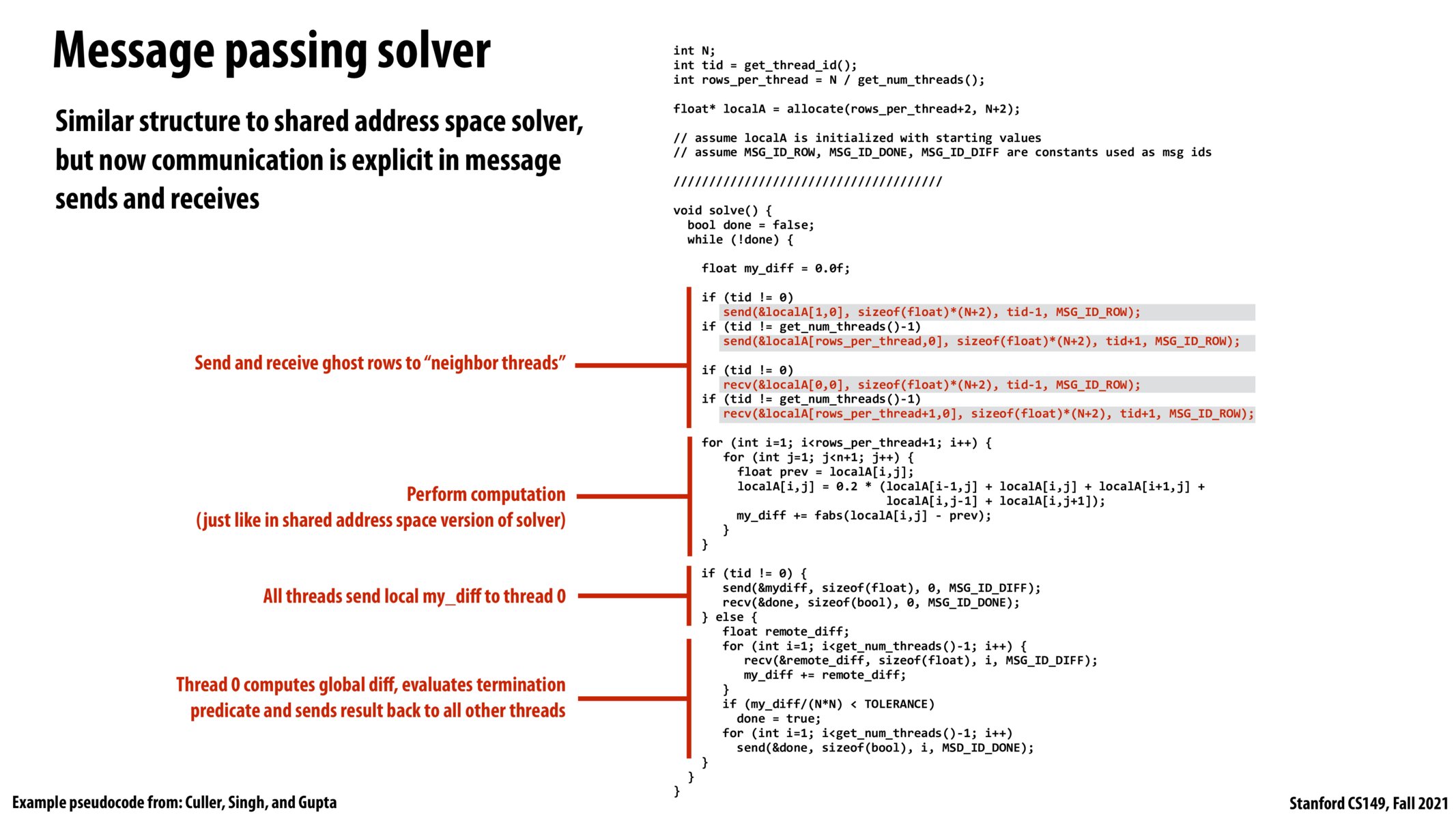

This message-passing solver is also an example of an SPMD solver from an execution model standpoint. It is message passing because each SPMD worker or thread is in a different address space, and the communication between these workers is implemented as message passing as opposed to loads and stores. Data-parallel, SPMD, and message passing are not meant to be distinct categories. In actuality, these categories are mixed and matched together.

if we are going to use the async rec/send, how will we know we have sent/received the data and can proceed further? My understanding is that if the async version of the rec/send is used, the code will look exactly like the one we have in the slide above, except there will be a line of code right before the for loop saying "wait until received/sent", something like while(1){ if (received && sent){ break } } Is this correct?

This form of parallel programming model seems to rely on other computers in the network to be available and not busy/broken (I might be wrong). For the threads-on-1-computer case where some other threads are unavailable, then we may have other threads in the same processor/computer that may take over an available work in a queue. However, what would happen if other computers that the "main computer" may rely on is unavailable? Do we just stall until the dependent computers become available/free?

Please log in to leave a comment.

As Kayvon explained in lecture, this code will lead to a deadlock between processors as all are spinning in the send() function and no processors are attempting to receive data. The way to get around this is to have some processors send() and others recv() for some length of time, then swap those roles for another length of time.

The specific solution that we came up with in class is to have those processors assigned to even-indexed spatial blocks send() first and have those assigned to odd-index blocks recv() first.