Since the traversal strategy that minimizes cache hits may depend on the # of cache lines and size of cache lines, is it possible to program in such a way that the compiler can optimize this for you?

^ I guess the compiler has already done some kind of optimizations for cache lines. However, this specific example would require the compiler to understand the semantics of the program to be able to split up the loop into separate traversal. And if I had to guess it is not currently possible in standard compiler (g++, etc.)

It seems like this blocked assignment strategy is based on the cache size of the machine it is running on. How, as the programmer, do you make cross-platform code for caches of different sizes? I have never seen a C global variable called L1_CACHE_SIZE haha

This seems to suggest that if I loop over all the elements in a 2d array, I should always loop over like this. Is this true?

@abraoliv, you can query the size of the L1_CACHE_SIZE via a call to sysconf(3):

sysconf (_SC_LEVEL1_DCACHE_LINESIZE)

The other possible options for sysconf (which include L2 Cache Size) are here:

Please log in to leave a comment.

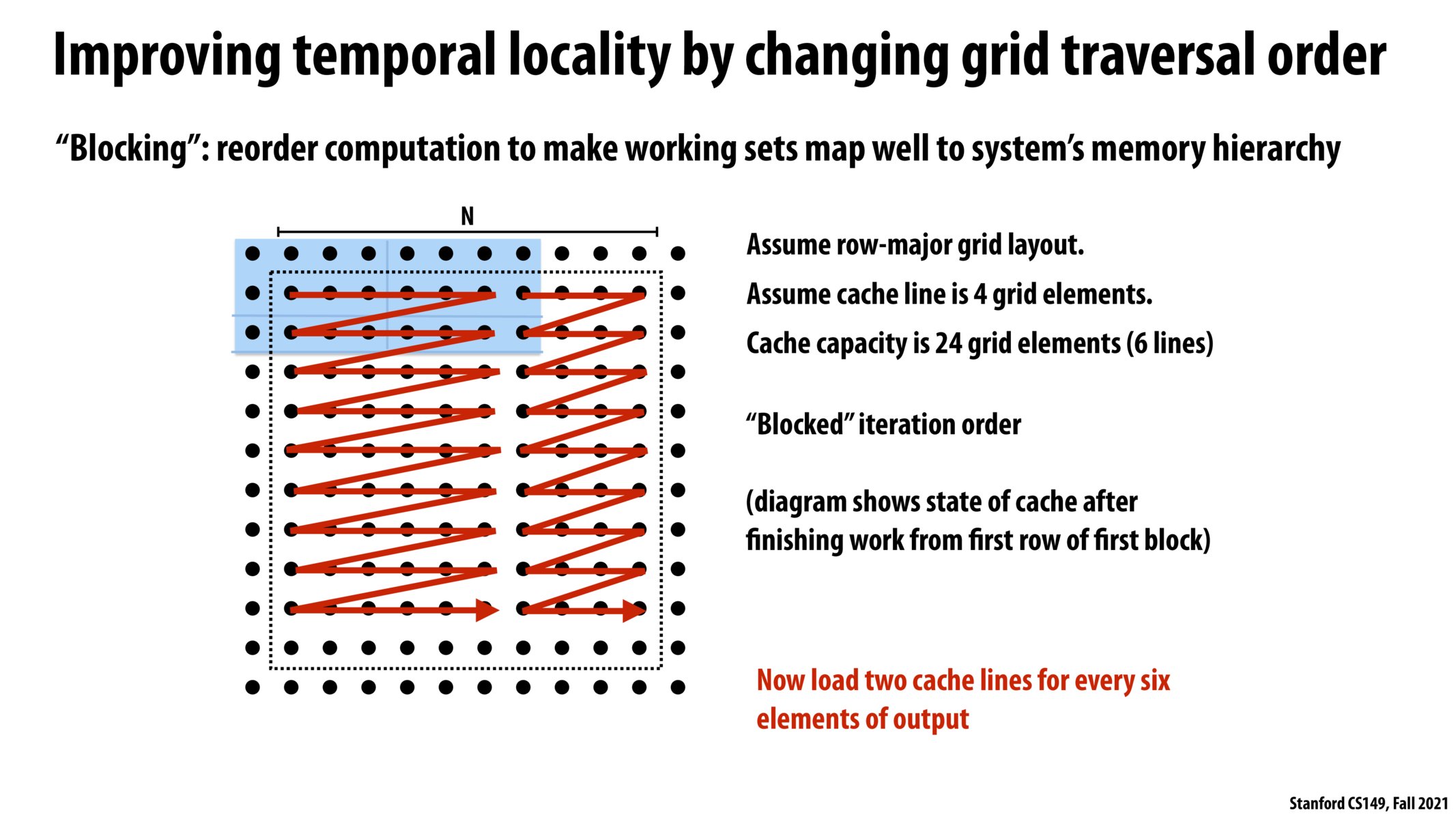

We are taking advantage of temporal locality in cache i.e. maximize the use of cache lines for all compute that require those cache lines. This improves arithmetic intensity as more work gets done on fewer memory loads.