Yes, the values for each iteration will be slightly different. However, it should eventually converge to the correct solution here, with some assumptions about our input [1]. Since we're performing this algorithm until our diff is less than some tolerance (i.e. we're close to convergence), we should end up with an answer that's very close to the sequential result and the analytical solution.

[1] See converge properties here: https://en.wikipedia.org/wiki/Gauss%E2%80%93Seidel_method#Convergence

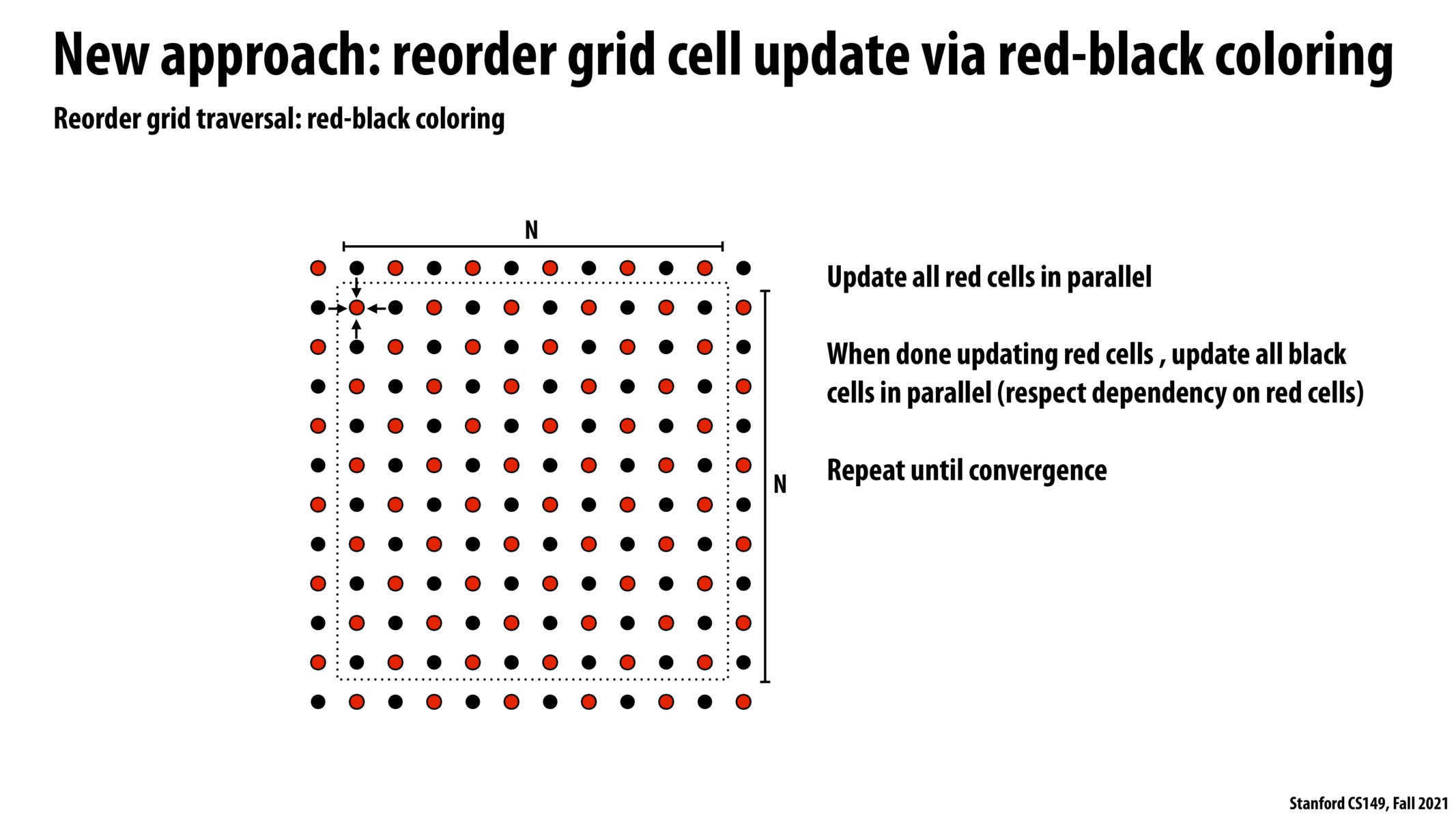

During the lecture, one of the students posted a question on this slide which talks about the tradeoff between increased parallelism and increased number of iterations with this new algorithm of red and black cells. I am not quite clear about why the number of iterations increases. Is it because it now requires two iterations to compute all the cells in the grid? Or is it because each cell requires more computations now to reach the tolerance difference? Or both?

It's that the modified algorithm converges slower than the original one (needs more iterations to reach tolerance threshold). In this example, the effect of the algorithm change is that there is the potential for much greater speedup due to enhanced parallelism, but that benefit comes at the cost of needed to do more work to converge to the same result. Whether or not the benefits outweigh the costs would be something the programmer would have to consider.

Please log in to leave a comment.

Just to confirm, if we are using this approach, for each iteration (update for black and red), the resulting answers will be different from the previous approach (the one that is less amenable to parallelism)? That is, since in the first round updating the red cell, for instance, the red cell on (1, 1) will be average of surrounding four black pts and itself, where the black above and on the left are still the initial value, while in previous case we already have the up and left black pts updated to a new value?