Back to Lecture Thumbnails

tigerpanda

shivalgo

Map Reduce was discovered in Google in 2003. We read a paper on MR in CS240. There are interesting ways in which the infrastructure scales up as time passes and stragglers are found, which are deemed failed due to heartbeat timeout, even if they come back later on. To get rid of this problem, Google cloud brings online a lot more nodes as time elapses so, on failure, work can be shared on more nodes to quicker completion. Read the paper!

jagriti

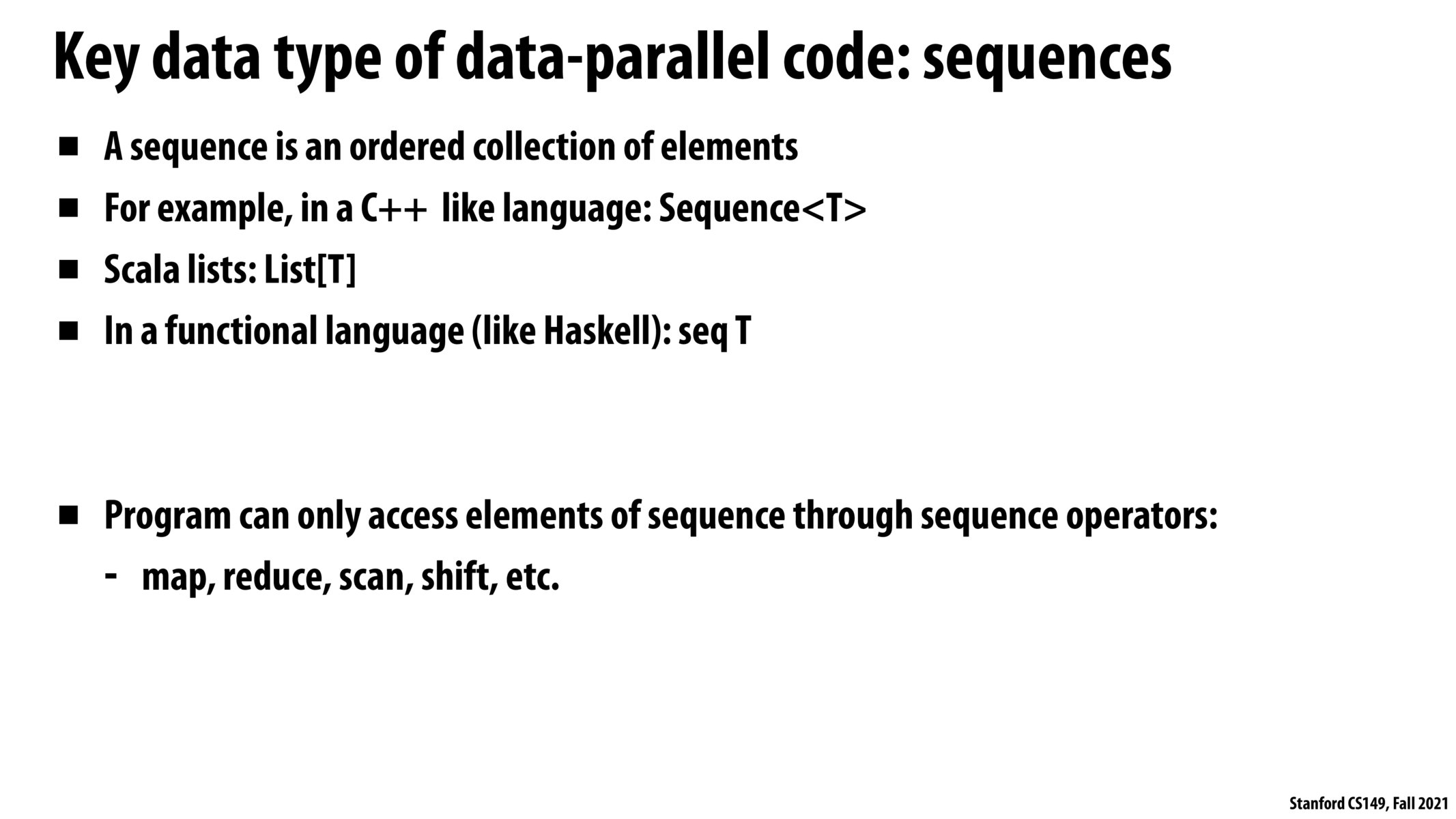

For a platform like Apache Spark - can RDDs (Resilient Distributed Datasets - fundamental data structures of Spark) be thought to be analogous/similar to the sequences in the slide?

Please log in to leave a comment.

Copyright 2021 Stanford University

I actually had the opportunity to work with Scala this summer during my internship. I didn't realize until this lecture how much parallelization there was happening in the code base I was working in until I took this class. We were basically working with a lot of data in excel spreadsheets which now that I think about it is a great example of work that can benefit from SIMD execution. We would have a bunch of data we needed to perform the same calculation to. I also had a hard time understanding what map was doing at times in the code(partly because we were also working with slick which also has its own map function)but it became truly clear after seeing the image on the next slide. I will now think of that image whenever I see a map being used in scala and think back to whether or not it makes sense for this particular operation to be performed. It's always really cool to see real world applications intertwine with what I am learning in class and vice versa.