I feel like it is: Tasks are created and run a gang of 8 program instances. Those 8 program instances figure out how to schedule the threads based off the foreach loop. Each task gets scheduled like any other old hyperthreading task

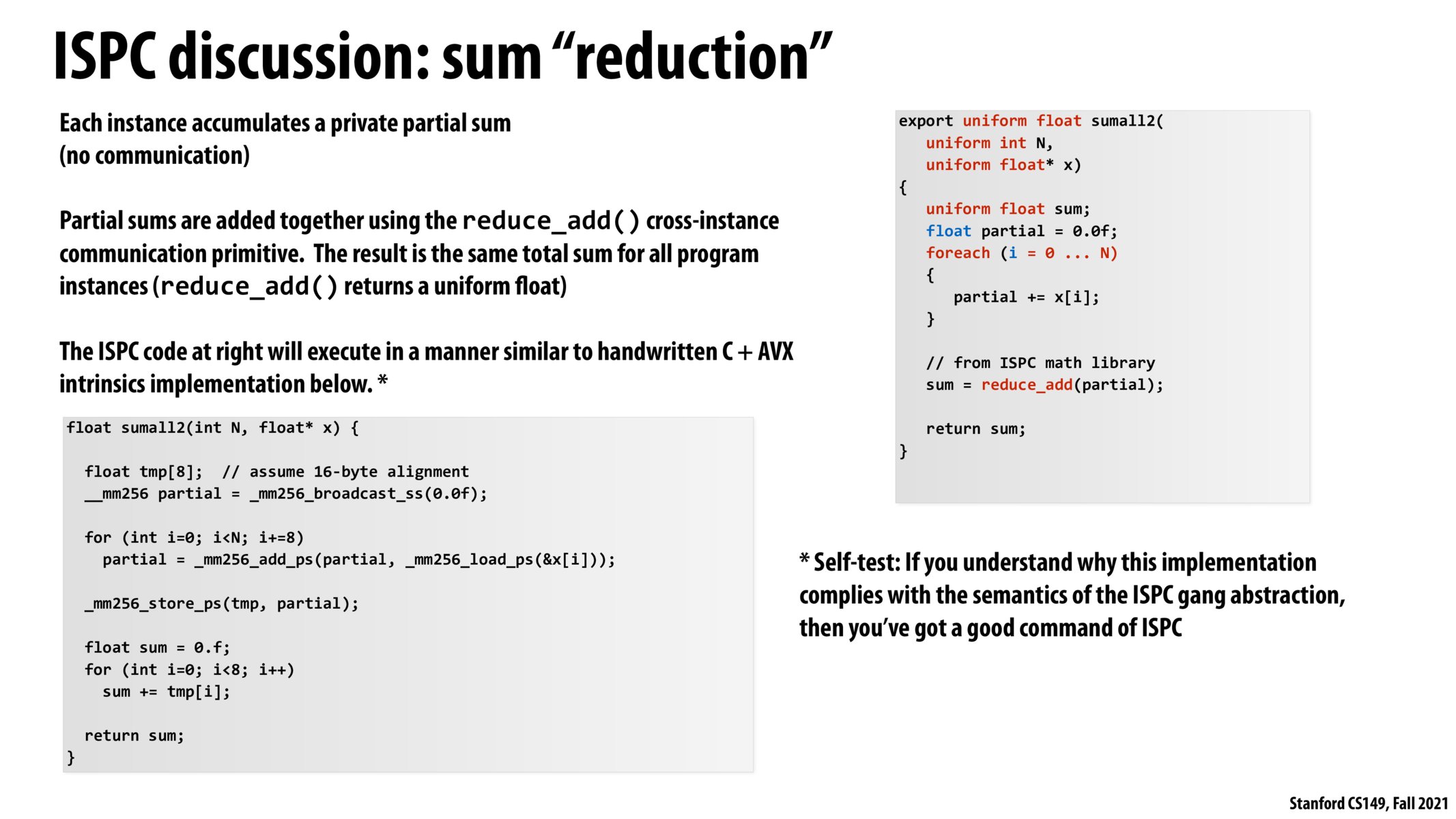

The abstraction ISPC provides is a really useful way to reason about parallelism, but we usually want to go deeper and understand what is happening end-to-end, this side really shows how vectorization is used to implement the parallelism and further provides the link to reason about how it is mapped to hardware.

From my understanding, running ISPC without tasks is essentially doing a compiler-generated SIMD. I'm curious, however, when would we want to solely use SIMD without tasks if tasks seem to always have a greater speedup?

This was also an "ah-hah" moment for me as well. I love this slide because it explicitly shows how ISPC is related to SIMD AVX intrinsics and how the foreach abstraction could be implemented.

I also see how this uses the data-parallel model and how one would perform a similar task using threads/locks/barriers to orchestrate workers.

@A bar cat: I think that's a general argument that we can (almost) always throw in more resources to get a higher speedup, whether it's e.g. ISPC tasks / hardware threads / etc. (although as we discussed regarding the difference between ISPC tasks and hardware threads, the latter generally tends to create more overhead). So I think it's more of a tradeoff of hardware resources vs. performance. In most cases, we probably don't want one program to eat up all our hardware resources.

Someone asked a question about how SIMD comes into play here and I wanted to check my understanding:

Individual program instances are ~not~ running SIMD/vector instructions right? Rather, it's that multiple program instances (within the same gang) are running the same instruction which then utilizes multiple ALUs/SIMD.

Wait so what's the relationship between SIMD and program instances? I'm still confused about the abstraction vs implementation side of this

@jchao01, Correct. This is how I understand this: each program instance has a certain part of the work assigned to it and since all these program instances execute the same exact sequence of instruction on different data, we (or compiler) can use SIMD unit to execute these instructions.

@A bar cat: Additionally to what @leopan said, tasks is an abstraction that takes advantage of multiple cores.

If we have a single core, then all tasks will run in that one core, which means that we will be just interleaving them in some random, albeit sequential, order (same problem of having multiple threads in single core leading to timeslicing).

@pizza,

If there are as many instances as SIMD vector indexes, then you can assume that there will be a 1:1 relationship between SIMD vector entries and program instances.

If there are, however, more vector indexes than instances, generally in this class we are using modulo operation and assuming that the vector is 8-wide, so each instance will handle each 0, 8, 16, ... or 1, 9, 17th entry, and so on.

@A bar cat: This is what I understood - If ISPC code does not have tasks and makes use of terms like foreach, programindex, programcount, etc only, it basically gets implemented on SIMD units in one core. When an ISPC code creates tasks, this code can be implemented on one or more cores with SIMD units where each core can take tasks from the task queue as they progress.

One more thing to note from this slide is the 'uniform' type variables. The value of the uniform type variables remains same for the entire gang of program instances (Gang size = SIMD width). That is the reason, x[i] is not added to the variable sum in the foreach loop. Instead each program instance accumulates the sum of the processed array elements into its dedicated partial sum. Here, 'partial' is not a uniform float, its a normal float variable. Every program instance(each vector lane in SIMD) has a partial sum by the end of all iterations. The reduce_add function which sums up all the partials gives out the required result of the operation.

SIMD instruction is an implementation for ISPC gang abstraction. They exist in different dimension.

Please log in to leave a comment.

A note of clarification that was a lightbulb for me today: In ISPC: Tasks are created and have (in our case) 8 gangs which are program instances running completely independently in parallel. This is an abstraction. Under the hood, this ISPC abstraction is IMPLEMENTED as SIMD! Someone please correct me if this is wrong!