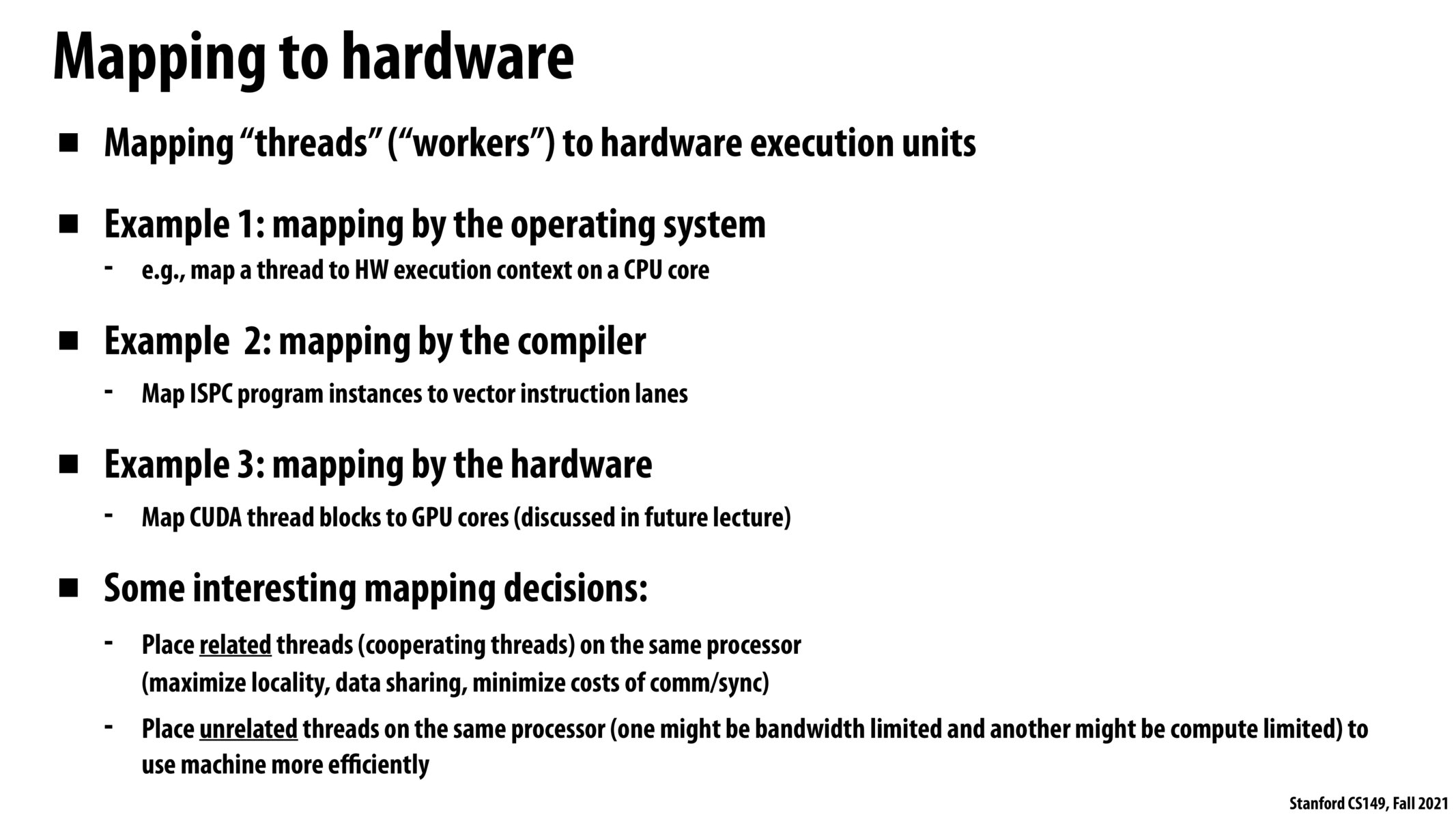

Mapping threads according to their relation with others is something that interests me. Is this something that is standard in OS's? It seems like finding the relation of threads, eg, one being bandwidth limited and the other compute limited would be complex to do at every context switch.

@ggomezm, it looks like mapping strategies, akin to thread scheduling strategies, are quite the area of research (here's an example of a new strategy). Some appear to be built to be independent of the program running and more generalizable with more intricate use of the ideas of locality, data sharing, and communication/synchronization requirements.

I know that when scheduling threads it is incredibly difficult/impossible to schedule threads in the most efficient manner as we don't know the exact runtime during scheduling. I imagine that is the root problem that research is looking for solutions.

Thanks for the paper, @ecb11! After running through it, here's a basic summary of what the paper covered:

-

They tested several different scheduling strategies, such as the (1) Native Linux Sceduler (Specifically "Tile Linux"), (2) Static Mapping (which we've discussed several times), (3) Basic Lowest Load (One thread is executed per core at a time, "It fills out the cores of the system in a Round-Robin fashion"), (4) Extended Lowest Load (Uses information from /proc/stat/ to see what threads are doing useful work vs sleeping / waiting. They use a custom data structure to analyze information from /proc/stat/ )

-

It was shown that on something like the N-Queens Problem, all four performed similarly

- The native SMP Linux Scheduler from Tile Linux performs well for small numbers of threads, but performance degrades as the number of threads goes up.

- For single-program workloads, BLL, XLL, and Static Mapping perform similarly, but as the number of threads goes up, Linux performance degrades

- Because static mapping is tuned at compile-time, it cannot handle multiple programs.

- When testing Linux, BLL, and XLL with the multiprogram, the best was XLL, and the worst was BLL. The reason for BLL performing worse than XLL was that there are workloads where the round-robin assignment of threads to cores leads to the assignment ignoring cores that are free (but are not immediately next in the RR-assignment). The reason XLL was better than BLL was that it was better equipped to understand which threads were busy and schedule them on-demand.

Long comment, but that was a fascinating read. Thanks @ecb11

Please log in to leave a comment.

The mapping decisions presented here are very interesting, I would think the best mapping would greatly depend on the program running, but is there an approach that has been proven to be generally better?