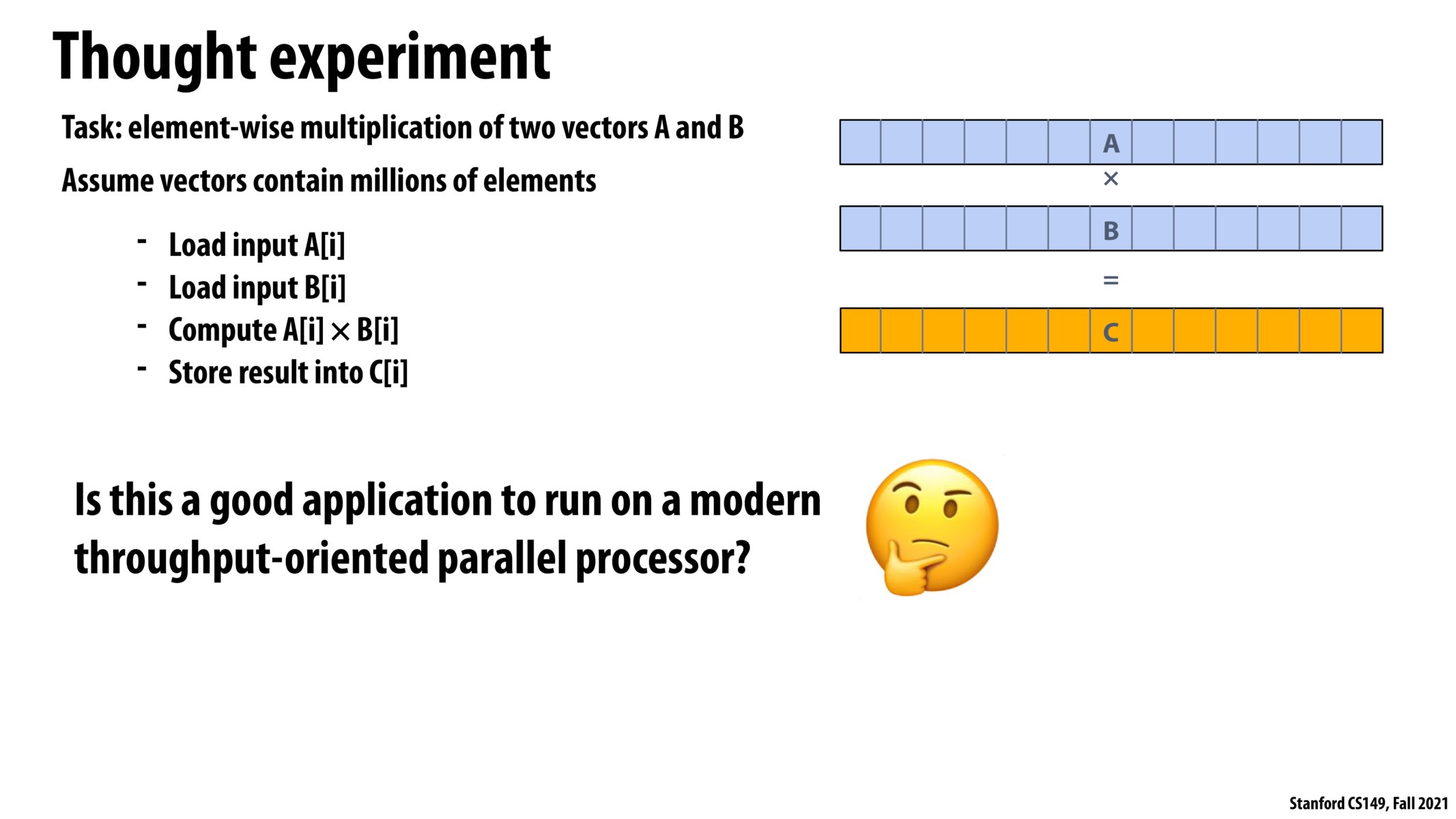

But isn't this the same as (or very similar to) saxpy which did not achieve much speedup with additional processors?

@sareyan I think you're correct – this program will likely be bandwidth-bounded because our arithmetic intensity is so low. Increasing throughput will not help us compute any faster unless we have sufficient memory bandwidth!

What is the definition of a throughput-oriented parallel processor? Also what kind of modern processor would be a good option to run the above program?

I believe that although this program satisfies the 3 requirements on the previous slide (for a program to utilize modern parallel processors efficiently). But like other students have said, it could still subject to memory bandwidth limit (it needs frequent read / write to memory and have relatively simple arithmetic operations on the data loaded from memory) and therefore can't make utilize 100% of a modern parallel CPU/GPU without the support of high bandwidth memory components. More details and calculations are shown on slide 36.

Even though this would likely be bandwidth-bound on typical modern architectures as @superscalar says, I think it typically takes several CPU cores to saturate memory bandwidth with sequential access patterns these days. So I'd definitely expect this program to benefit from multi-core parallelism, let alone SIMD as well.

This program is memory bandwidth limited. Although multi-threading in the modern processor could hide stalls, I think there will still be significant stalls.

Why would this be bandwith bounded as a result of our arithmetic intensity?

@noelma, it's the ratio of arithmetic (operations running on data that is stored on volatile memory) to memory accesses. In this program, 3 out of the 4 instructions are memory-bound (load / store), which is why this program is bandwidth bounded.

Please log in to leave a comment.

Yes it is because every element can be processed independently and we are doing the same operation to every element.