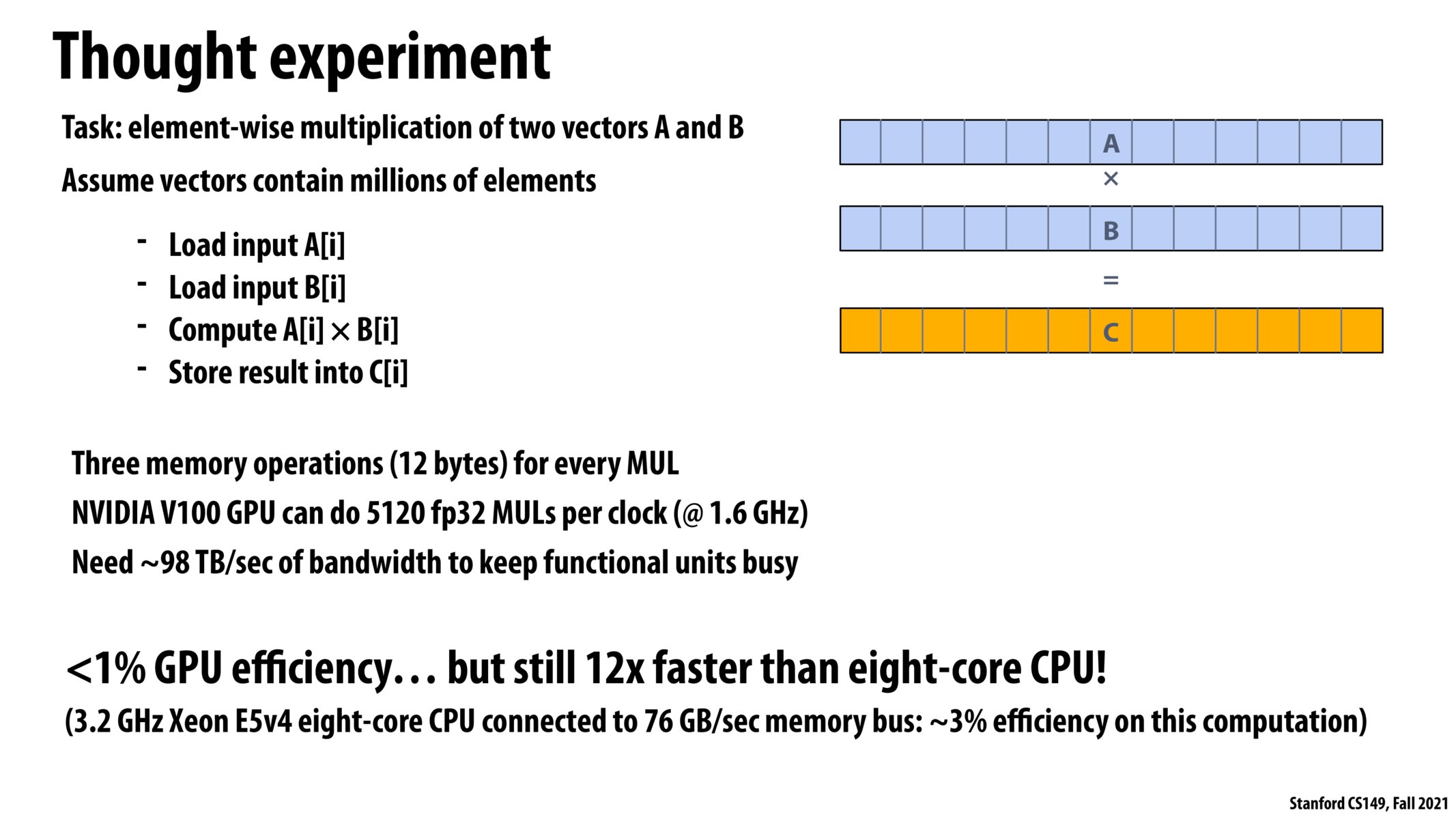

@rahulahoop The memory system on the described GPU can only serve 900 GB/s to the functional units while the specified program would need 98000 GB/s to keep the functional units running at full capacity. Because the computations are going to be limited by this 900 GB/s bottleneck, the functional units are only going to be active ~0.9% of the time.

How do we calculate bandwidth needed? For instance on this page, how do we get 98TB/sec for the program?

@jaez.

-

First compute the number of bytes per operation. Here its 2 floats input + 1 float output, that's 12 bytes.

-

Now compute the number of operations per second. That's number of ALUs x clock rate.

-

The total amount of bandwidth needed to keep all these ALUs running at peak rate is then: ops/sec x bytes/op

Is bandwidth at all limited per processor? Or is overall memory bandwidth the only potential bottleneck?

Seems like we spend all this effort into improving the performance of the hardware, but really tremendous performance gains could be spend investing into the performance of cables for throughput. Is this an active research area? Seems like if we improved 20x, that's much easier than improving the performance of processors by 20x

how would something like apple's unified memory system where the GPU and CPU both share an 8 or 16GB RAM pool affect this type of bottleneck?

How would we go about optimizing a piece of code, like the example above, to use less memory bandwith?

Please log in to leave a comment.

How did we get 1% efficiency again? I got a bit lost during lecture.