To quote from the MapReduce paper (https://static.googleusercontent.com/media/research.google.com/zh-CN//archive/mapreduce-osdi04.pdf),

The MapReduce master takes the location information of the input files into account and attempts to schedule a map task on a machine that contains a replica of the corresponding input data. Failing that, it attempts to schedule a map task near a replica of that task’s input data (e.g., on a worker machine that is on the same network switch as the machine containing the data).

so chunks are replicated in terms of storage but don't cause repeated processing (a waste of computation resources) based on this (the implementation a prod system may make the trade-off and implement something different though).

The quoted content is from 3.4 Locality, and there is one paragraph in section 3.4.

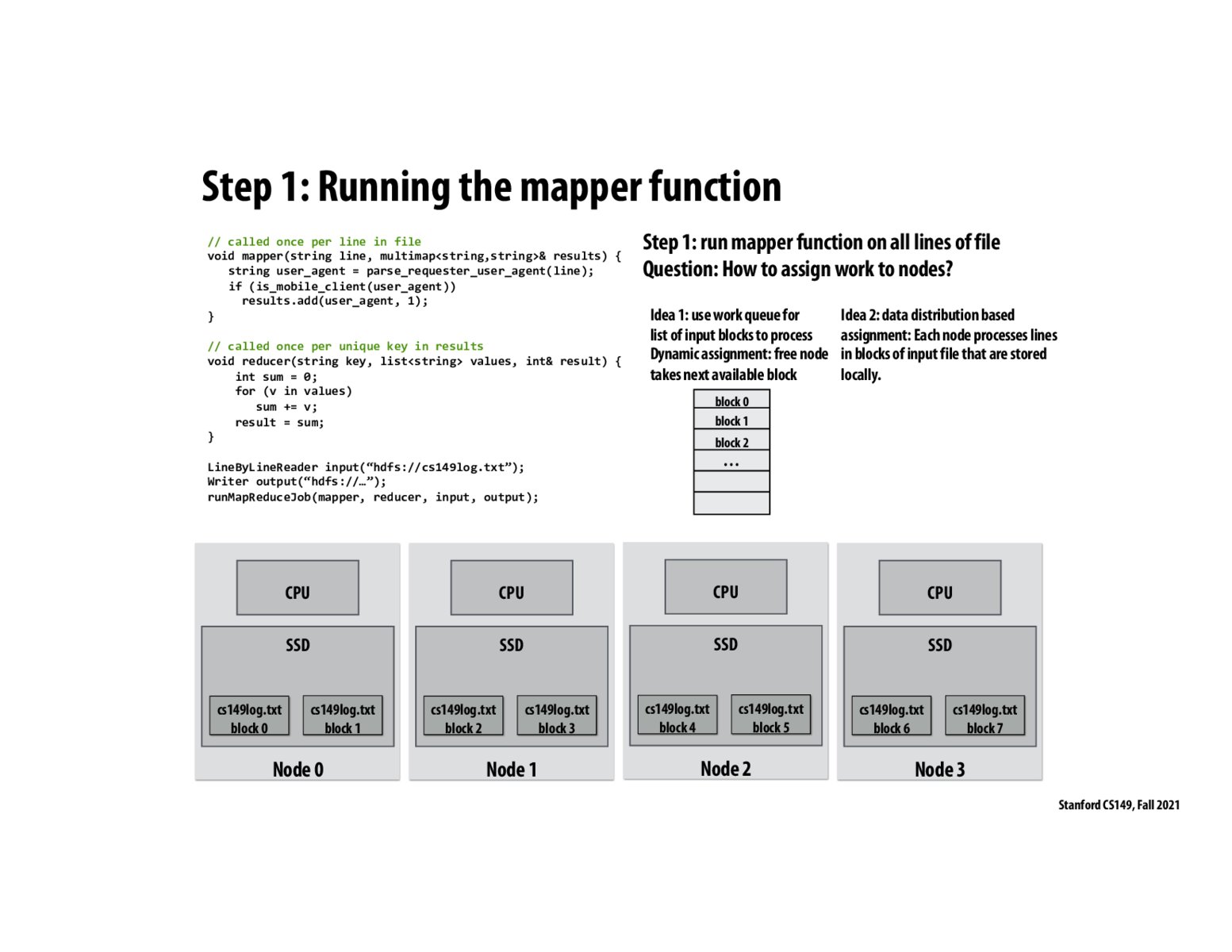

Idea 2 here is probably more desirable since it takes advantage of data locality.

Idea 1 is a bad idea because it would require a lot of network communication between nodes. Idea 2 is better because we could the mapper function where the blocks actually reside.

Please log in to leave a comment.

Does this mean that replicated chunks will be processed multiple times?