@evs I'm not sure what the answer is, but I'd assume that this isn't necessary for all cases – a set is usually implemented as a collection of linked lists (many short linked lists if it's a hashset, one long one otherwise), so I don't see any reason why write-only operations couldn't occur in parallel on different nodes in the linked list. If you have a read-only process and a separate write-only process going, though, you could get race conditions.

I suppose we could also just pad each field within the object, so that reading one in takes exactly one cache line, so we don't have false sharing anymore. That would artificially inflate the object to take up a lot of memory though

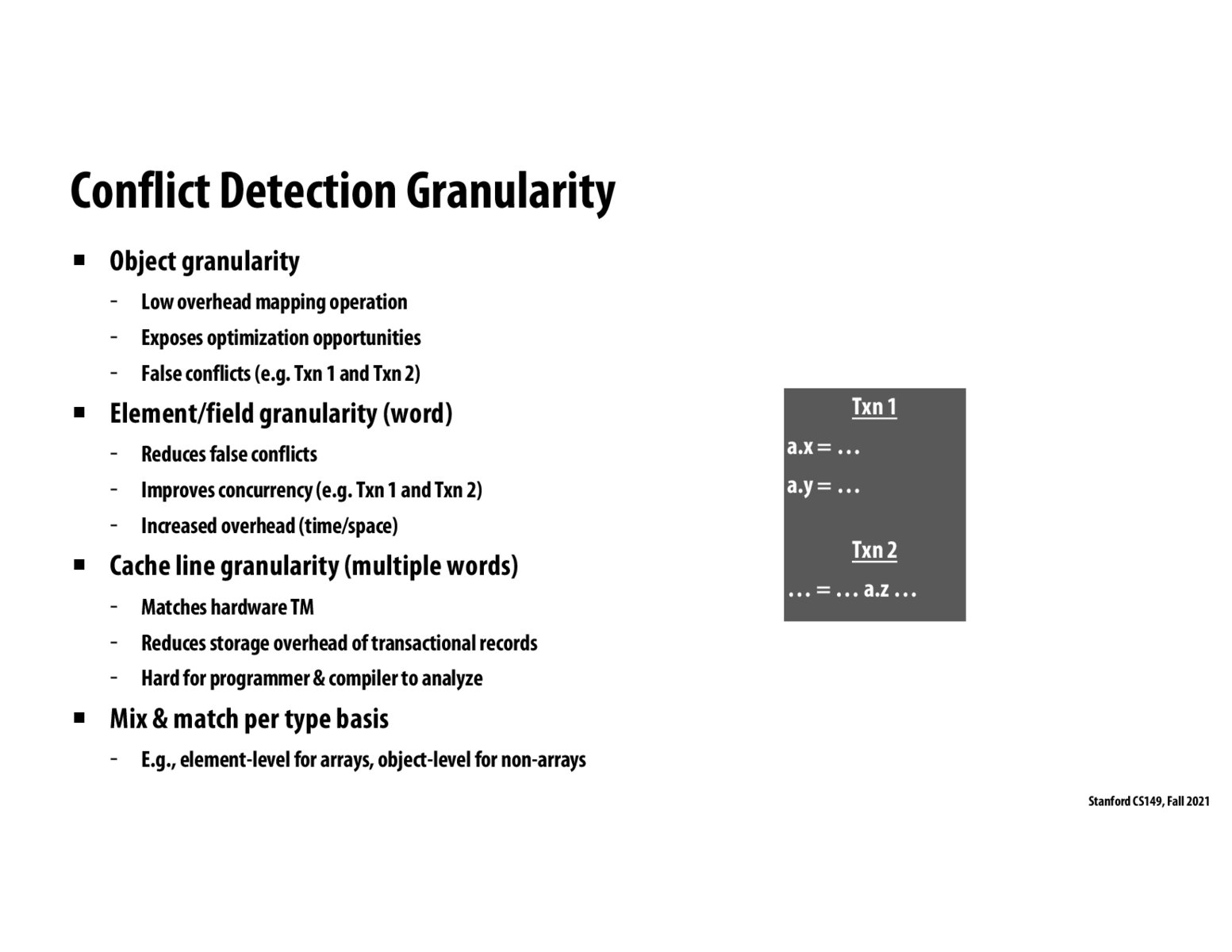

For STM, it is recommended to use object level for non-arrays. However if we have a struct with lots of members like OS kernel structs, then the propensity for false sharing increases quite a bit? So is it on a case-by-case basis then?

Slide synopsis: It's important not to consider the granularity overhead against two granularity sizes, but rather for each the counterfactual tradeoff against the cost of conflicts instead

Please log in to leave a comment.

So dos this come into play for our writing and reading sets? In object granularity if you have two threads working on the same object will one always just stall even if they're working on different parts of the object?