Very simple hardware question -- what's the difference between "CPU", "Processor" and "Core"? It seems this one processor can have multiple execution units (I'm assuming execution units correspond to CPU?), and is a processor the same as a core?

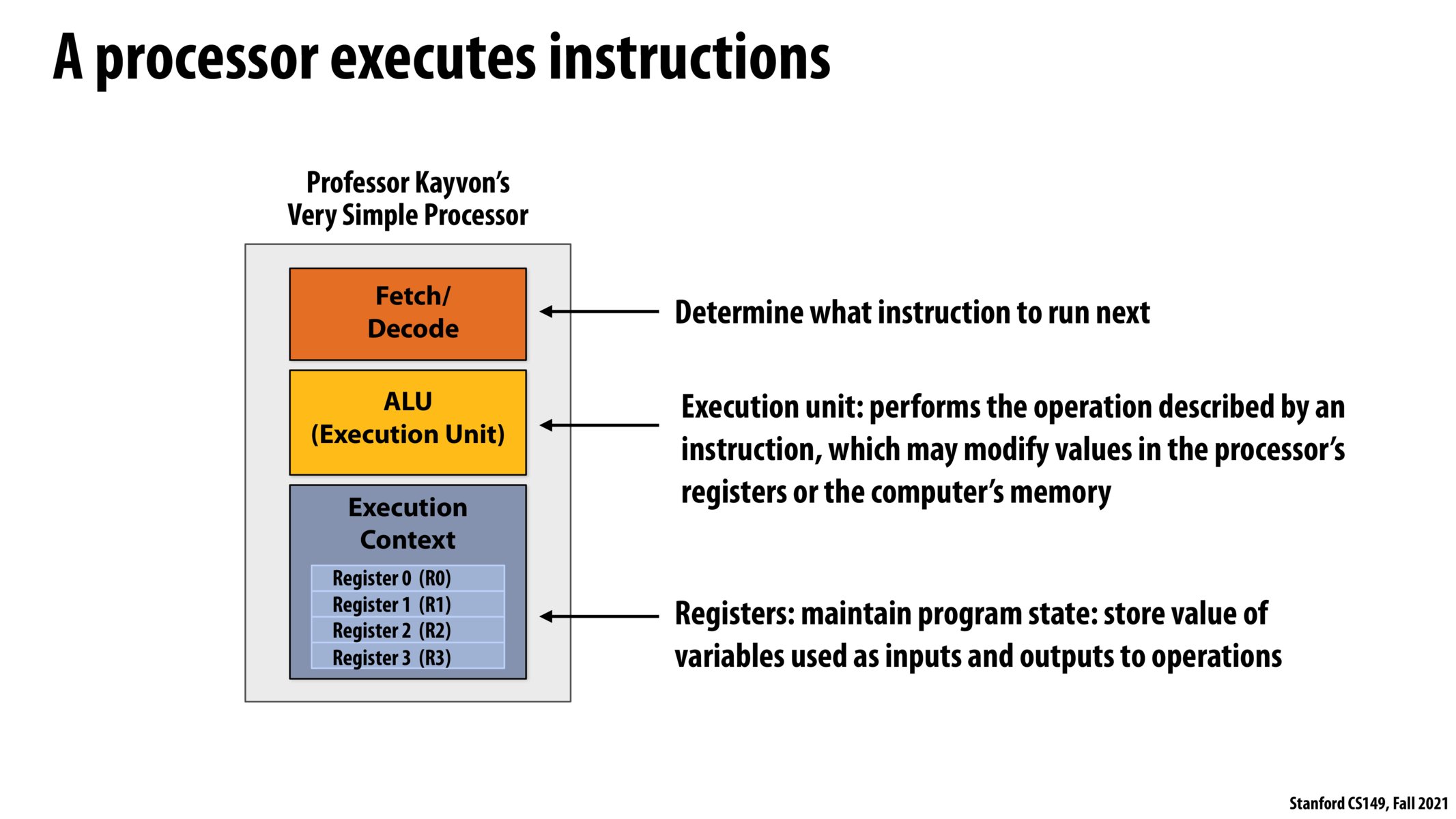

Kayvon said that the execution context is made up of all of the registers (usually more than 4) and memory. Memory and registers are related because the registers sometimes hold virtual memory mappings.

I second the question about the difference between "CPU", "Processor" and "Core". I'm also interested in knowing exactly what distinguishes them.

I think the hierarchy is CPU > Core(s). A CPU can contain one or many core(s). Not sure about 'processor'. It may be a term used to refer to CPU. Can anyone confirm?

+1 to needing help with this CPU vs Processor vs Core question. I feel like even after 107, 110, 140 this is completely unknown to me

This forum seems to talk about this topic in depth:

https://superuser.com/questions/1041370/definition-of-a-processor-vs-core-multiprocessor-vs-multicore

From what I gathered, @noelma is correct here, where CPUs contain cores and processors refer to CPUs and GPUs. The processor handles all instructions in the code while the "cores" themselves are the execution units that Kayvon talks about.

After seeing this slide, I was curious about which components of the processer speed up when increasing clock speed. Is it just the execution unit, or is it also the fetch/decode part?

I might be wrong but I believe each instruction cycle (fetch-decode-execute) takes a fixed number or clock cycles, and the fetch/decode part of the instructions are still load/store-type operations so increasing the clock speed will also increase the speed in which fetch/decode occurs

Might a single ALU have multiple execution contexts / sets of registers to allow for context switching (e.g. concurrency)? Or is this handled purely at a memory level with all registers being dumped to / loaded from memory?

Many of these questions are addressed in Lecture 2!

WRT terminology, there's a lot of loose terminology across the industry:

- A multi-core CPU (or GPU) contains multiple cores.

- When you hear "processor", it's often referring to the full CPU. For example, the title of this lecture is "A Modern Multi-Core processor".

- But sometimes you'll hear "processor" refer to one core of a CPU or GPU. For example an NVIDIA chip has many "streaming multi-processors" (SM's) on it. Unfortunately, You'll just have to infer intent from context.

The point is that the terminology is less important than the concepts.

I'd prefer you just make sure you understand the types of parallelism here: http://cs149.stanford.edu/fall21/lecture/multicorearch/slide_79

How does the fetch time from getting data from the execution context compared to that of values that are stored on the cache? What are the benefits of one over the other?

Please log in to leave a comment.

Kayvon said

ALUstands for "Arithmetic Logic Unit" and executes the actual computation, see https://en.wikipedia.org/wiki/Arithmetic_logic_unit