@rubensl. Yes, please make that assumption at this point. In fact it is typical these days in that many modern processors can perform floating point adds and multiplies with the same throughput -- and even with the same or higher throughput than integer math.

Due to pipeline, it is possible for a processor to complete one instruction per clock (a measure of throughput) while still requiring multiple clocks to complete the instruction. Let's hold this thought until lecture 2.

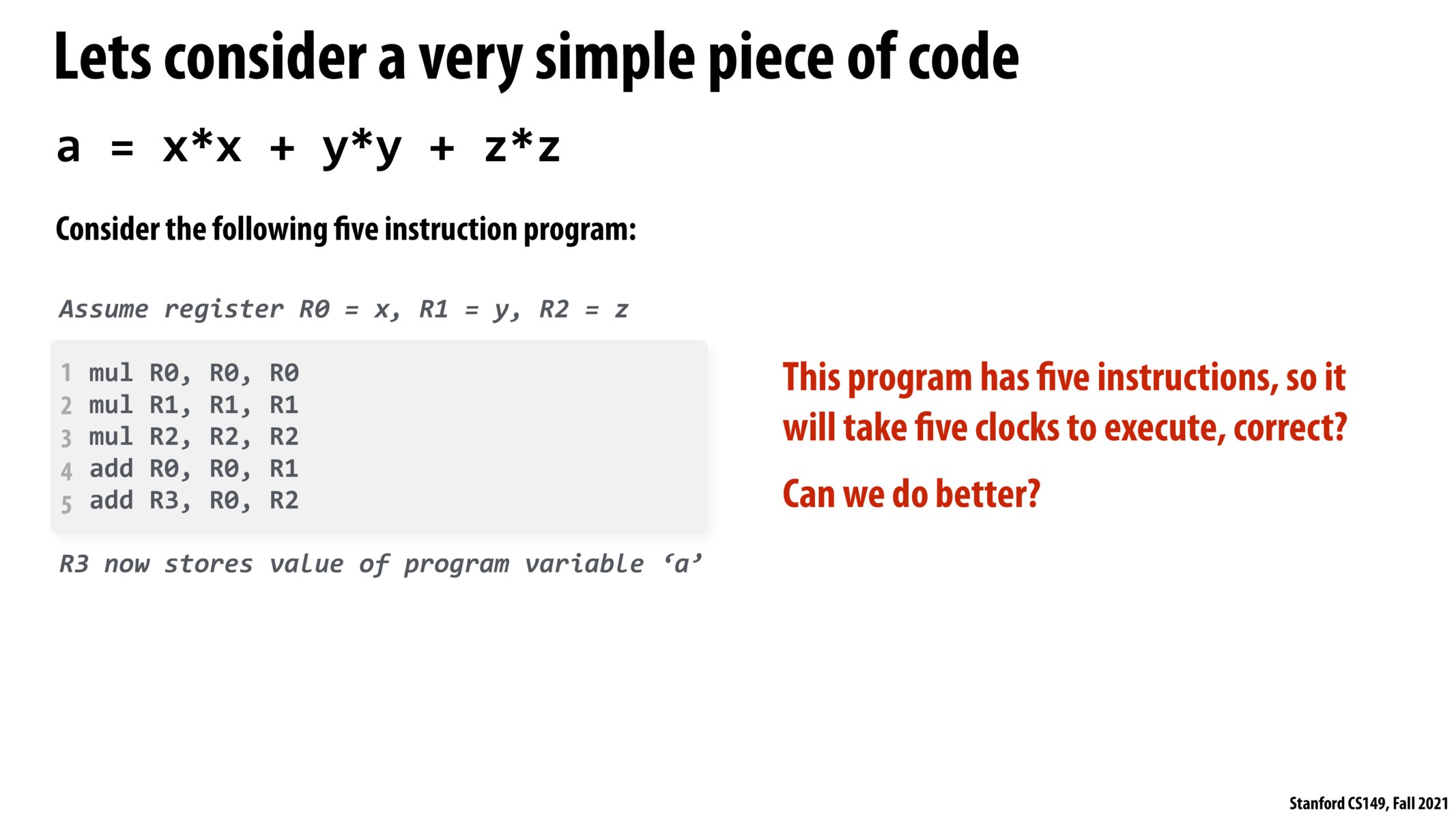

I was a little bit confused about what the 3 registers meant at first. From my research, the first register is the one which stores the value of the computation. For instance, add R0, R0, R1 means to store the addition of R0 to R1 in R0.

@jasonalouda, that's correct.

Im curious how this might many steps a program might take when they get something like this

a = xy b = xy+x*y

I know that a compiler might make a change from b to b = a+a. Does this affect runtime at all of the number of instructions that we might run. What about dependancies? Might it be easier to keep b as its original?

@Abarcat You bring up a good point. What tools (besides manually hardcoding SIMD instructions) do software developers have at their disposal to control how their compiler reorders instructions?

Is there a gcc flag that allows you to say "don't optimize out instructions if it'll improve instruction-level parallelism"?

@riglesias. According to this StackOverflow post, the -O0 signals to the compiler to not optimize your code

@albystein. I'm asking something different. I'm asking if there's a way for the user to change the strategy for compiler optimization. Often, the O3 flag generates the "fastest" code, but how do we know if the compiler takes things like your computer's architecture / degree of ILP into account? Is there a way to specify that in the compilation options?

I know processors can perform "Out-of-Order Execution" independent of the compiler, but I'm asking "can the user ask the compiler to generate code that's easily parallelizable for the given processor"?

Do we have a formal definition of clock? Does all clocks represent equal computation time?

What are the details behind how floating-point add/mul operations are performed? How is the speed of these computations optimized?

@thepurplecow It seems that integer add is much faster than integer multiply, but for floating-point operations multiplication can actually be faster than add because of what can be run in parallel in the implementation details. I got this information from John Collins response on a Research Gate discussion.

Please log in to leave a comment.

Are we making a general assumption here that the add and mul instructions will be executed in the same number of clock cycles or is that typical?