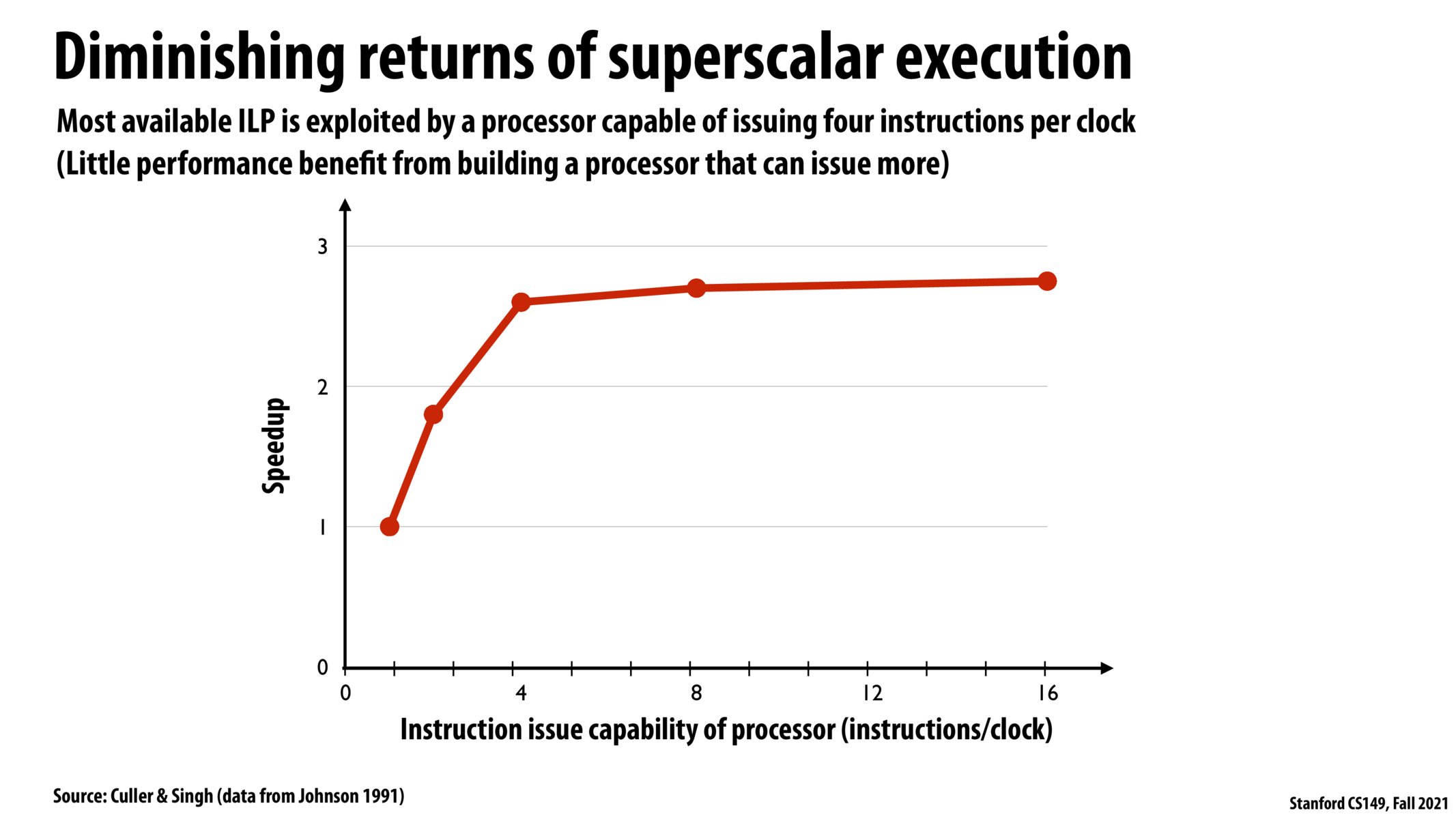

@martigp I believe this is the limiting factor. As we saw in the example in class, at any given level in the dependency DAG, there can only be so many instructions to be executed in parallel.

I was curious if programs (especially computation-heavy programs) can be re-structured and re-written in such a way as to utilize more ILP (this could be for example a compiler optimization), but I'm not sure if there would be any significant efficiency improvement from that. I found this interesting tool (and paper) to measure ILP for a program and thought it might be interesting as a segue into an optimization like that.

Link: https://hal-lirmm.ccsd.cnrs.fr/lirmm-01349703/document

This plot seems to be measuring the performance of a single program’s execution. I’m curious about what the optimization problem is like when there are multiple programs, each with different ILP, being executed in parallel (e.g. in multiple threads). It’s not immediately obvious to me if the greedy algorithm would be optimal.

@amohamdy a quick look at that paper seems to show a max ILP of around 12 for the applications the researchers looked at. I suspect the plateau is pretty inevitable, if not at 4 then somewhere close to it.

I was wondering, I wonder what programs would even exist that get ILP of > 16? If you declared many different variables (20+) and added pairs of them I suppose there would be large ILP. Good code is written such that if you have to do a lot of things, you'll probably do them in a loop with a simple body, so it won't be ILP that will speed things up.

Please log in to leave a comment.

What was limiting factor that caused the flattening of the curve in this instance. Was it because of code dependencies?