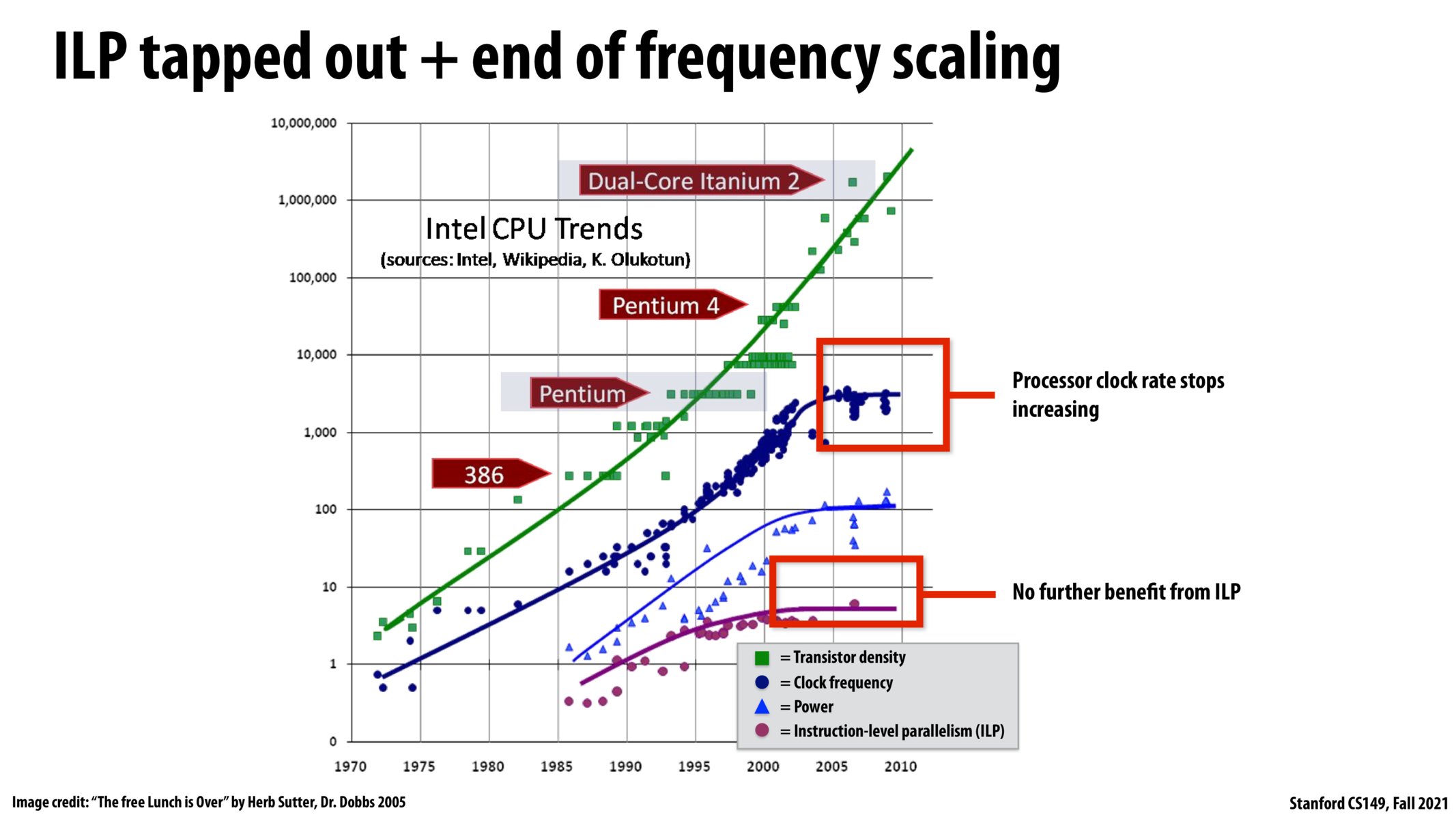

Is there some sort of non-negligible dependence between any of the four plotted here? As in, could a dramatic improvement in one break the plateau in another?

@victor, I believe what this chart is trying to convey is that we can no longer squeak out more performance by increasing Power, Clock Frequency, or ILP -- despite Intel's best efforts (presumably). I'd be curious what this chart looks like for the last 10 years, I assume the flat trends continue through today.

What work is being done on newer processors today to continue to increase their speed, given these asymptotes? Is the new approach something like Apple's M1 approach, where they're unifying the entire system onto a single chip to increase things like memory access time?

What's the y-axis for Power? If it's measuring the power consumed by increasing transistor density over the years, shouldn't we see this trend line keep increasing linearly (on the log scale) instead of flattening out (based on the next couple slides)?

@juliob Going forward, it seems like the general trend for building faster system is shifted towards building more vertically integrated HW/SW stacks where the HW is built specifically to optimize some of the use cases higher up in the layer (OS and application-level). In this regard, Apple has a competitive advantage over general-purpose chip companies like Intel/AMD because they also control the OS and application layer. This article articulates very well why M1 chip is so fast on macOS: https://debugger.medium.com/why-is-apples-m1-chip-so-fast-3262b158cba2

I think the reasons of ILP tapped out are:

- Programs usually have limited ILP.

- ILP is complex to implement and hard to scale.

- ILP overhead can be significant, especially for branch misprediction.

As ILP has plateaued, and there does not seem to be a way to overcome the power wall, this seems to suggest that at some point raw computing power will reach its absolute peak. Does this mean that the only work being done on the hardware side is to figure out how to cram more and more cores into a smaller space? Does this have similar power wall implications, when the chips get small enough? I've been hearing news recently about Moore's law slowing down, and I'm wondering if it s a function of the power wall, as well as ILP demanding more cores instead of faster processors.

Please log in to leave a comment.

Why were the processor clock rates, ILP, and power allowed to flatten out for so many years? I would've expected the Intel folks to see this and correct it as soon as it started to hit the plateau... or did it take them that long to find a solution?