Not sure what they actually do, but you can get a sense of focus from the magnitude of the gradients. This gives you a digital way of checking what is currently "in-focus".

Focus can be adjusted using small motors to move the lens closer/farther from the sensor (see lens equation: 1/f = 1/S1 + 1/S2).

This process would give you a capacity to choose your focus without explicitly estimating the depth to an object.

Though honestly, the new iPhone 12 LiDAR sensor could definitely help get that dist to obj value more accurately than a gradient based digital estimate.

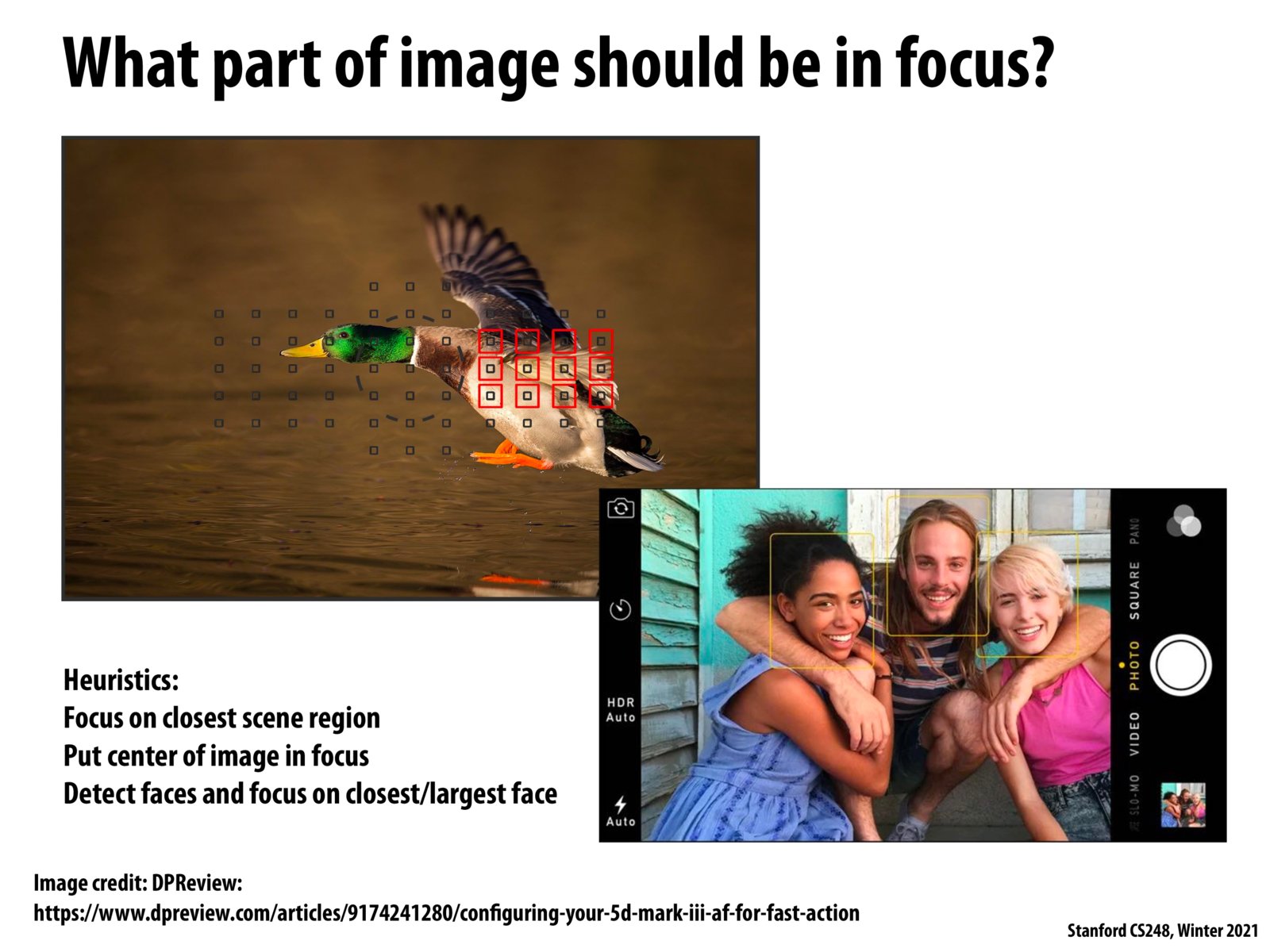

Perhaps some devices (like newer iPhones) have multiple cameras that take pictures of the same thing from slightly different angles, and having those stereo pairs allows us to estimate depth (kind of like how we sense depth better with two eyes as opposed to one eye). With the estimated depths, the algorithm can then choose to focus on the closer objects.

It is very easy comparatively to tell what should be in focus if a user taps the area of the image they want in focus!

Please log in to leave a comment.

It seems to me that if we want to set a region of the camera image in focus, we need to know the distance between that region and the lens aperture. If this is the case, do modern digital cameras estimate depth information (z values) in real-time? If so, what sort of technology does it use? Stereo camera? infrared light?