Back to Lecture Thumbnails

jeyla

mapqh

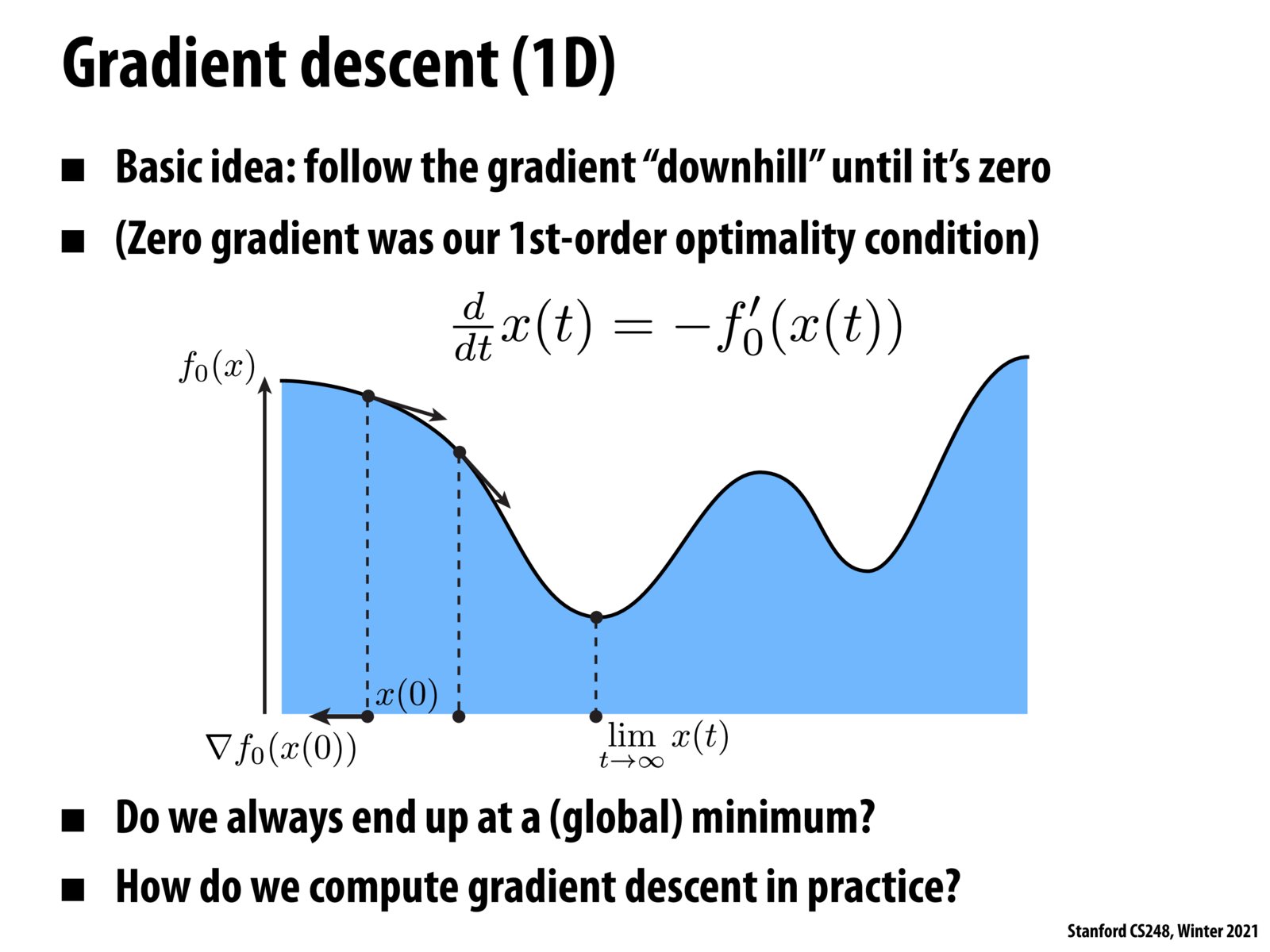

I think Newton's method also should work? https://en.wikipedia.org/wiki/Newton's_method_in_optimization According to Wikipedia, Newton's method uses curvature information (i.e. the second derivative) to take a more direct route.

Sneaky Turtle

Gradient descent always ends up at a global minimum for convex functions, but only guarantees local minima in general.

clairezb

Newton's method is also good option to find minimums but can be computationally costly due to the calculation of Hessian

Please log in to leave a comment.

Copyright 2021 Stanford University

Is gradient descent the only tool available for finding minimums of complex/non-convex functions?