As I understand it, the benefit of Spark's RDDs is that all the RDDs are stored in RAM (rather than writing back to disk / shared memory); since the data is mostly kept locally (except for some operations that need to aggregate data across processors), you benefit from reduced communication costs.

You only need to store the RDDs that are needed for future dependencies in computing the final desired RDD (e.g., the last-computed RDD), and you can discard the other intermediate RDDs without ever writing them to disk. You are indeed correct, however, that if the size of the RDDs is very large (i.e., large working set), you'll end up needing to write to disk and much of the benefit of RDDs goes away.

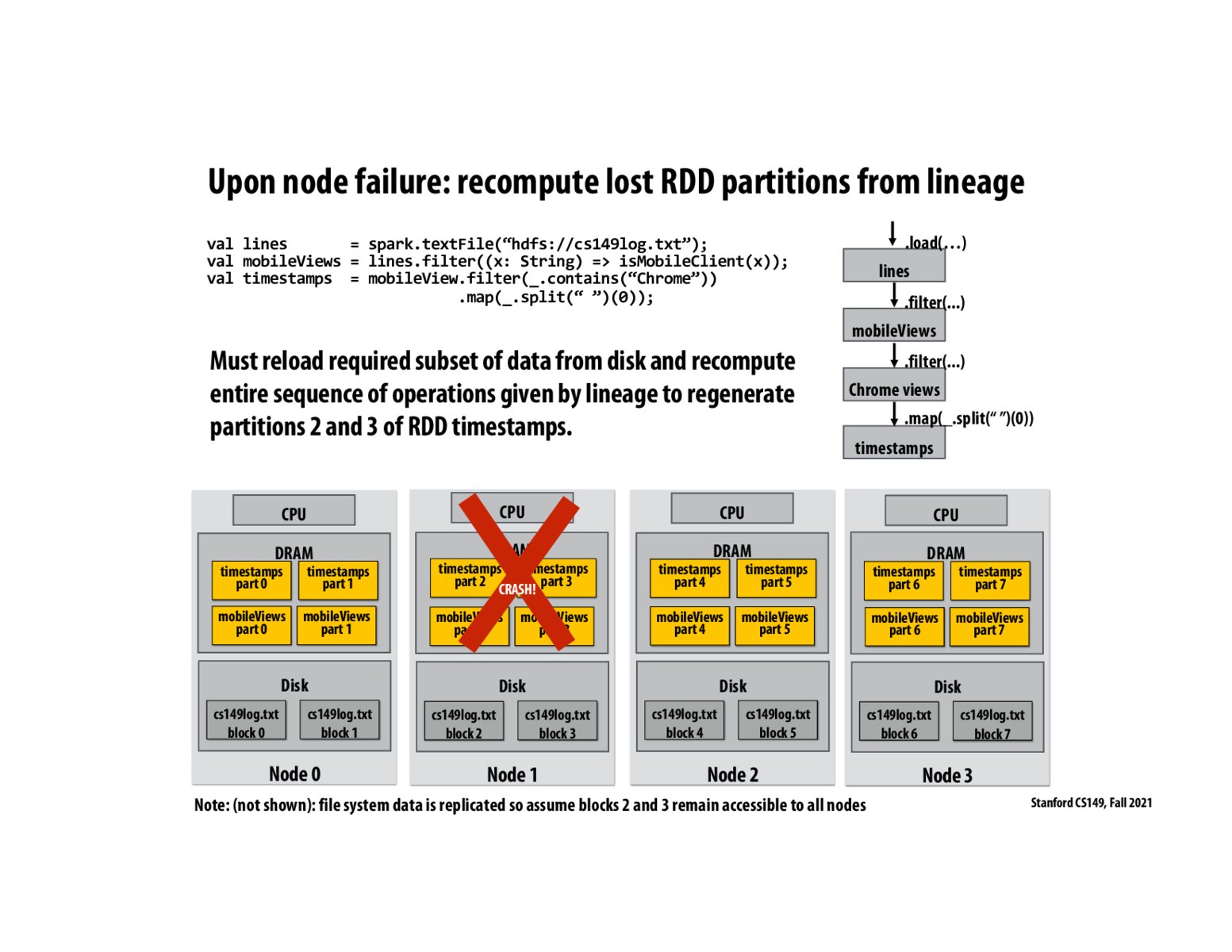

Where's the lineage kept?

@Martingale on the drivers https://stackoverflow.com/questions/34713793/where-spark-rdd-lineage-is-stored https://stackoverflow.com/questions/35410553/how-does-apache-spark-store-lineages

Another main benefit as I understand is using the lineage allow lazy evaluation.

Please log in to leave a comment.

If I remember correctly from lecture, the final desired RDD is created by performing transforms on the data and along the way creating new RDDS rather than modifying the existing data. My question is what happens to these intermediate RDDS along the way which are needed in order to create our finished desired RDD? Perhaps there is some flaw in my understanding though and there are no intermediate RDD's created on the way to our final RDD? It seems like to store all of these intermediate RDD's after reaching our desired RDD could end up taking a significant amount of storage with really no benefits.