@sareyan my understanding would be yes – I'm not sure how using a GPU would make temporal locality more or less important. Would you be able to share your thoughts?

@sareyan @superscalar apart from discussions of the memory system (e.g. how expensive are cache misses), the issue of locality seems like mostly a question for the caching system. If we have a large enough cache, then we can get away with poor temporal locality because our data will still be in cache many cycles down the line, but if the caches are similarly sized and there aren't any huge differences in internal designs, I imagine the temporal locality issues are similar.

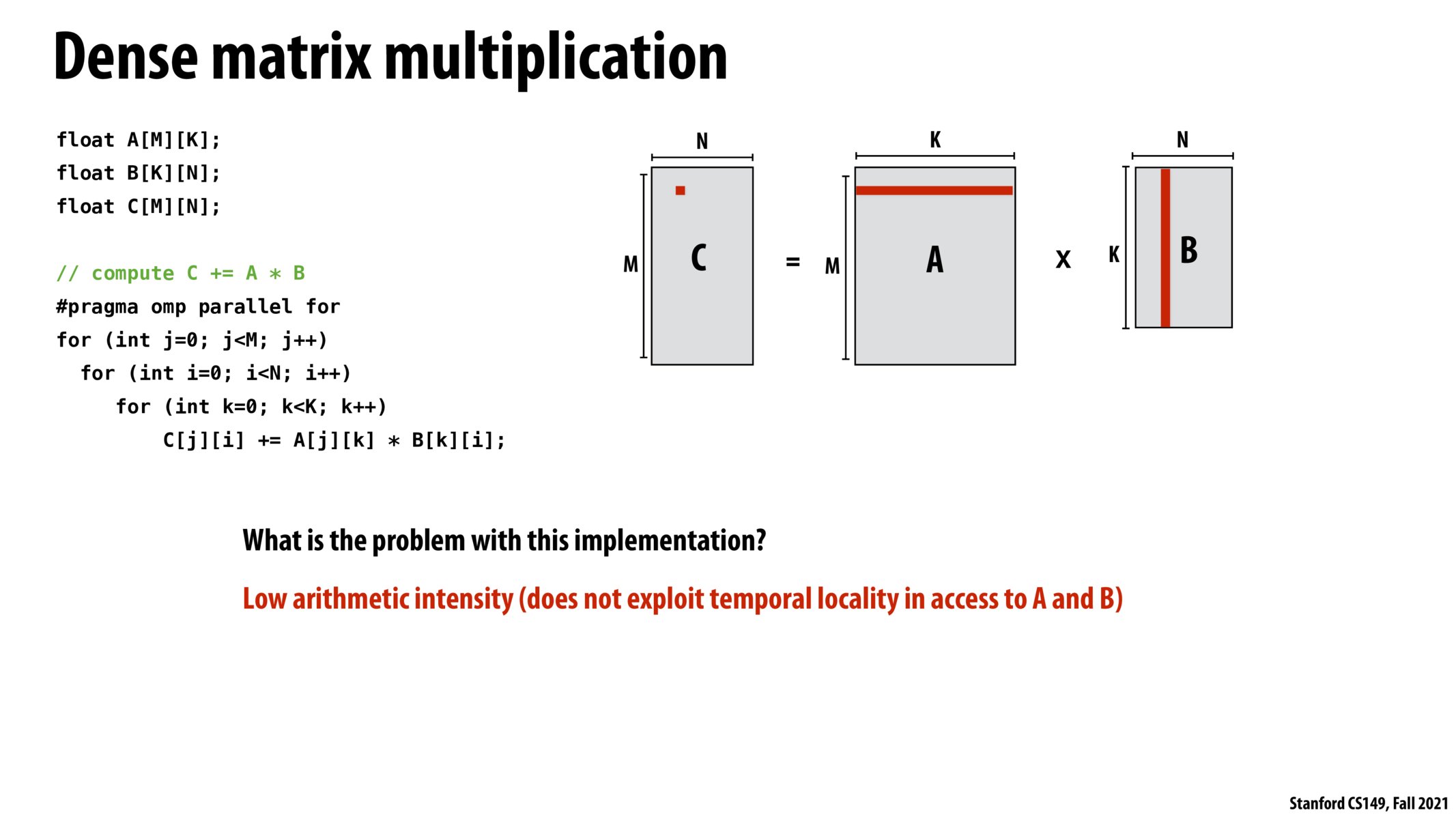

Here is a very interesting idea that is the centrepiece of how Google designed its TPU for DNN training and inference. The major component is a systolic array that performs matrix multiplication. It is essentially a pipelined model which processes a subset of matrix elements at a time, and minimizes the number of memory accesses

Please log in to leave a comment.

For this example, is temporal locality an equally relevant issue if the computation is done on GPUs?