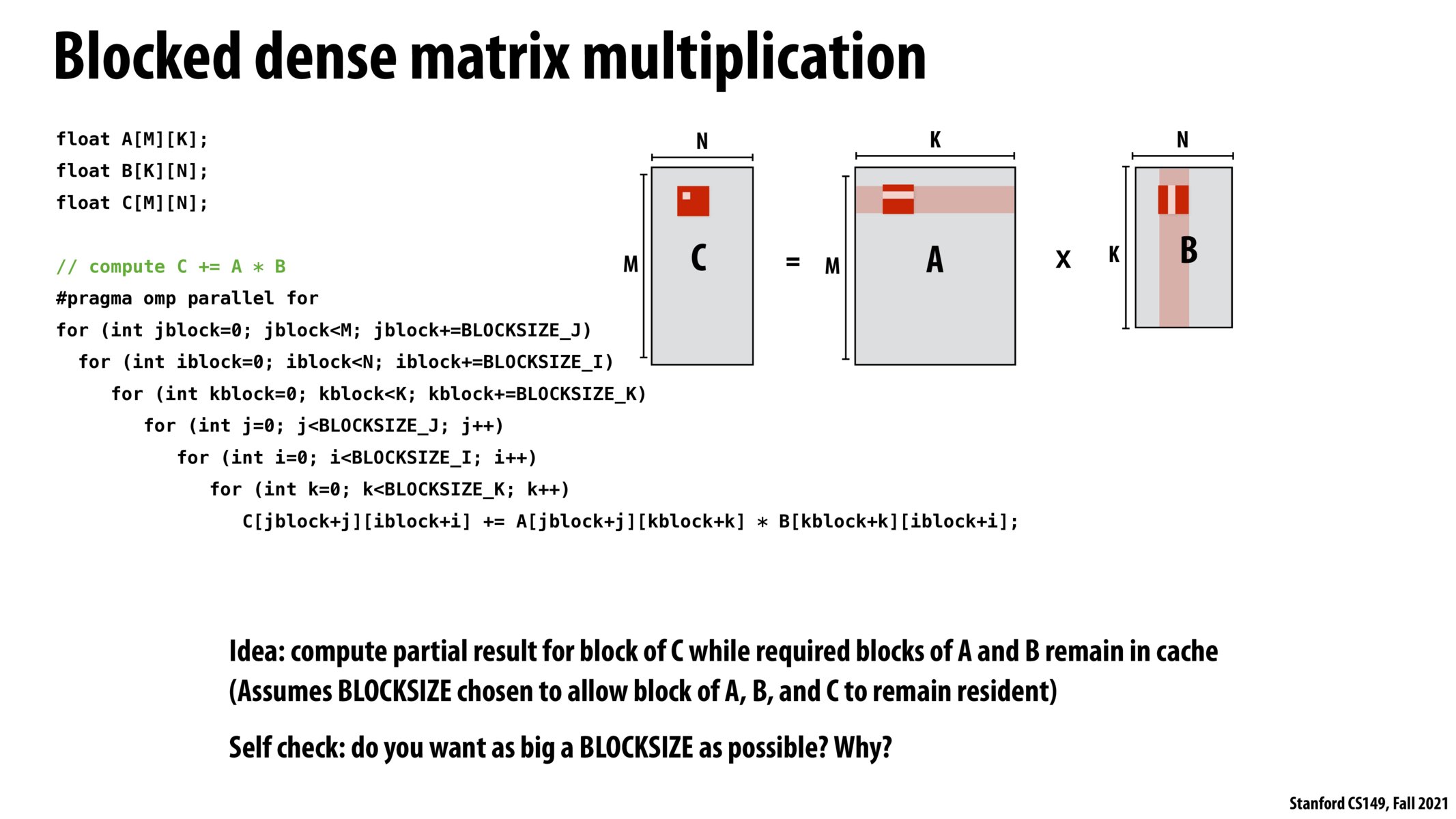

The BLOCKSIZE should fit in the cache, not as big as possible. BLOCKSIZE should be sqrt(M/3), given M is the cache size and we need to load 3 blocks from A, B and C into the cache.

How much more beneficial is this approach over the previous slide's approach? I know that using parallelism helps distribute workload in these chunks, but due to the 3 new layers of nested for loops wouldn't each thread slow down as well? One one hand, seems intuitive to use parallelism and speed up, but on the other hand, we add 3 more nested layers to computer per chunk.

@leo this method improves cache locality while the method in previous slide does not (imagine we have super large matrix that our cache cannot even fit a single row/col)

@yt1118...we only 1 block from B I think. A's is a row sliver which is already contiguous memory and the output write to C is also a row sliver. Basically we do a dot product of A's 1xtilesize sliver with a tilesize x tilesize block of B to store a 1xtilesize into C. So, the entire cache (almost) can be just for a block of B's data. This observation also got validated when I did the optional PA5. I chose a TILE_SIZE that filled up the entire 128KiB L1 data cache size.

since the results eventually need to be reduced, cache locality and the advantages of chunking the input and output only apply to the initial computation step, right?

For the different conv layers, is there a way to handle it such that different predicted conv layers that require more "Computation" get more heavily weighted computation? Because as I currently see it, everything is handled in parallel.

So in this method, would all the data in the "box" be on the same cache line?

Are we just assuming the cache blocks it up like this rather than storing data that lives on the same row? If the cache worked that way, we wouldn't want it block up like this right?

@12345 if the cache doesn't fit a single row or col, why would it be useful to grab data from the next row(s)?

Please log in to leave a comment.

For computing the subblock in C, amount of computation is O(Blocksize ^ 3). The innermost three for loops explains O(B^3). The number of memory operations = (B^2 + B^2 reads from A and B) + (B^2 writes into C) So overall arithmetic intensity is O(B^3)/O(B^2). Bigger the value of B is, more the arithmetic intensity.