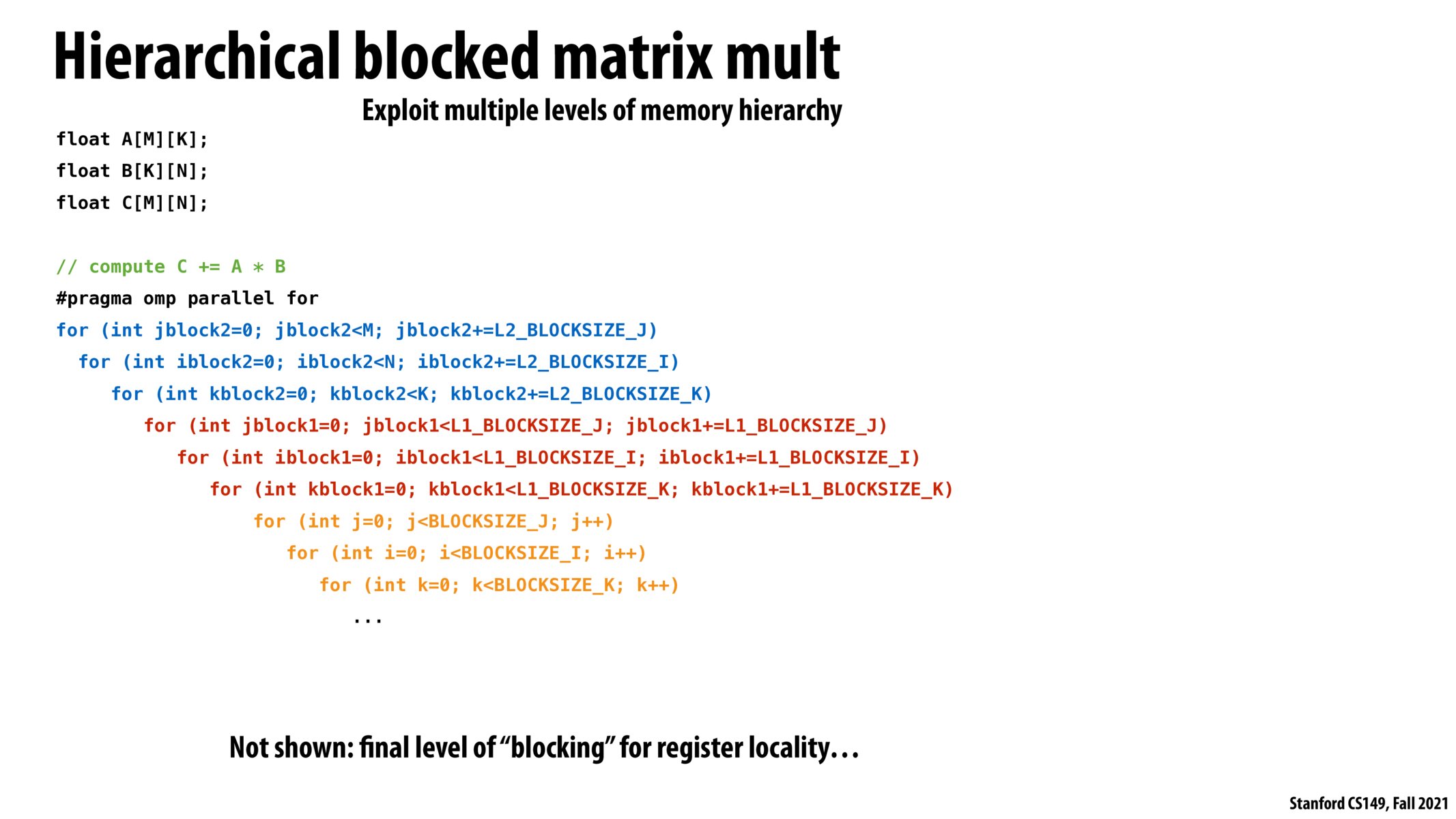

^ So on this point - how exactly would these extra loops ensure that each block is in the respective cache (l1, l2, etc)?

Crazy how much thought goes into optimizing these. But to be honest, this code is really hard to read - we probably should only reserve these types of optimizations to frequently used routines. Matrix multiplication is a good candidate for this.

Is the reason why this will be effective due to the fact that we chunk the matrix for smaller parts, and for each part, for each read, the data will be loaded to both L1 and L2 cache (and in general L2 cache has a larger cache line?), and then when we iterate over the inner matrix, we could use locality that is explored by the L2 cache and therefore read from the L2 cache instead of from the memory?

Meanwhile, won't there be trade-off for such approach since now you will have to aggregate each sub-matrix's value and at the end aggregate to make sure that the final result of the matrix multiplication computes the correct value?

I wanted to mention that in some specialized hardware designs we might also reorder loops to reduce power or optimize bandwidth. Here is a link to a paper where Stanford researchers modified a Halide compiler to generate architectures of varying loop orderings to achieve better energy efficiency: https://arxiv.org/pdf/1809.04070.pdf%3E.

One reason this nested looping structure is effective is that greatly improves cache locality @juliob. The reason for this is that when you iterate sequentially through a matrix, at some point, you'll reach your cache's capacity and start evicting old entries.

This is improved with blocking, as you have a greater emphasis on re-using data, but this nested-blocking is even better, as you essentially fit as much relevant data into your cache and work with it until you don't need it anymore, which is much more effective than the single blocking. You don't "need" to ensure the blocks will be in your cache, the structure of your code will do that for you in the case of nested blocking.

Please log in to leave a comment.

Counter-intuitively: More loops == Higher performance