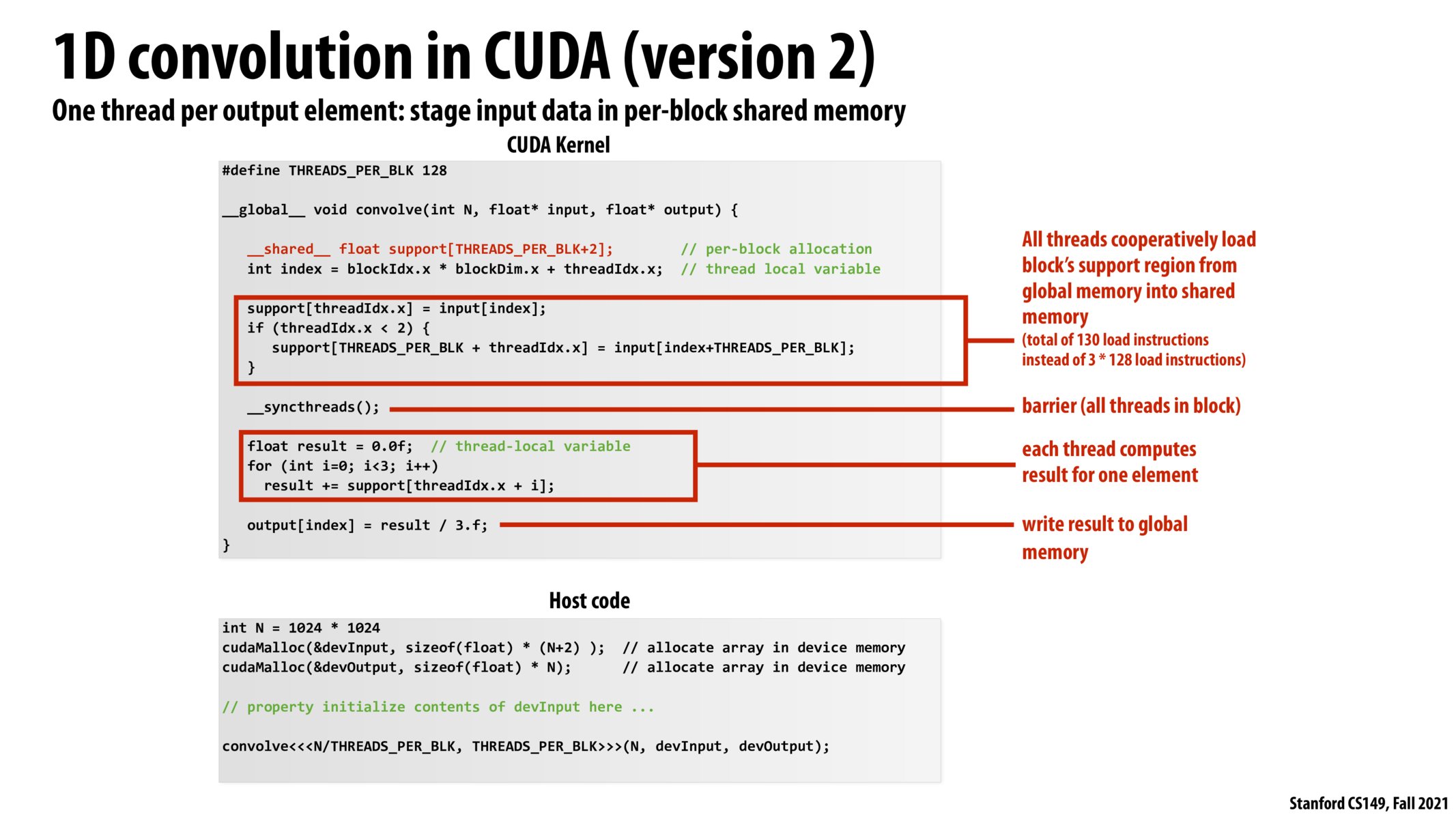

Sorry, markdown syntax error, meant to be "3 x 128 = 384 loads per block ... 130 * sizeof(float)=520 bytes..."

Are sync_threads for all threads across all blocks or is it more fine grained - that is, 1 sync_thread per each block of threads? It wasnt clear to me. Also, is there a use case for a sync_thread at a block level? For example we might want to schdule dependent tasks on separate blocks and then we would want to call sync_threads at a block level?

The way a block's shared memory is more "local", and in particular faster to access, vaguely reminds me of NUMA. I wonder if there are deeper parallels between the two. As an example, maybe there is an interconnect hierarchy that splits up the GPU's resources into local pools/domains, and accessing resources from a remote pool would be more expensive.

@shivalgo __syncthreads() is a block level synchronization barrier, i.e. it waits until all threads in a block reach the barrier. The discussion here also has some use cases of when it is useful: https://stackoverflow.com/questions/15240432/does-syncthreads-synchronize-all-threads-in-the-grid.

Seeing how moving from the CPU to the GPU and CUDA and having similar abstractions / concepts like barrier - __syncthreads() yields that abstraction thinking is important in terms of parallel computing - in my opinion

It seems to me this sort of detail is something we'd normally leave to some low-level caching implementation, with the logic that it can reason about this better than us based off the hardware. We'd maybe change the code to work around this caching algorithm, but not handle it ourselves. Is there a reason we can't do that here?

@timothy, Kayvon mentioned in lecture that there could be a caching algorithm to handle the fetching and storage of this data (for version 1). I believe the benefit of being so prescriptive in version 2 stems from the fact that we know exactly how we are going to use the data and the granularity at which we'll need it. A caching algorithm needs to be general and usable by several different use cases, so it might decide to fetch/store our data in ways that are suboptimal to this particular case, because it does not as much information about the application as the programmer does.

A CUDA kernel will never involve a load from CPU main memory, right? I assume the only boundary between CPU and GPU memory happens in host code.

@pinkpanther, yes, we are assuming that the CPU and GPU memory addresses are separate and that they use message passing-like communication primitives to communicate.

here, we can see the advantage of the CUDA block abstraction because it allows us to share memory across multiple threads. in the previous example, we saw that there were overlapping reads (redundant memory calls). Here, per block, we load only the memory we need, wait until the operation is finished across all threads in the block (__syncthreads()), and then continue with the program.

Please log in to leave a comment.

From lecture: the reason we might want to write it this way with shared memory is to reduce redundant memory reads. The example in the previous slide reads 3 elements from

inputfor each of the 128 threads for 3128=384 loads per block, whereas this example preloads just the 130 elements ofinputinto 130sizeof(float)=520 bytes of low-latency shared memory before reading 384 times from that shared memory. The shared block memory is super duper fast in modern GPUs, however (like faster than L2 cache-fast), so in effect we reduced our memory bandwidth cost from 384 loads to just 130 loads (295% improvement), increasing our arithmetic intensity.