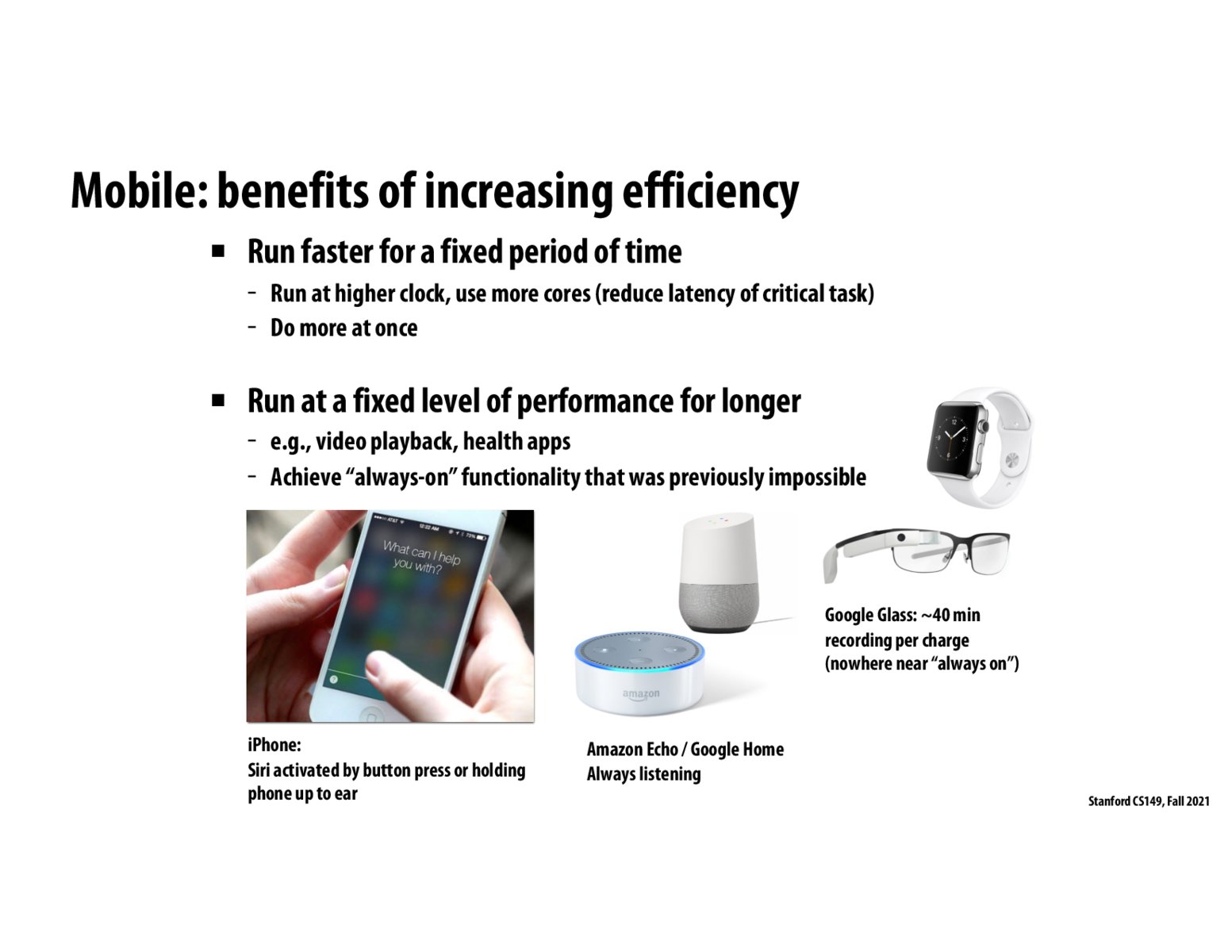

I never thought about how your devices such as an iPhone are constantly running and waiting to hear "hey Siri". I was also fascinated by how this also implies we must run at a fixed-level of performance for a long period of time and save energy as much as possible while do so.

How exactly do devices have specialized hardware for this task of hey Siri? Like, what would the chip be doing that is specialized for this. Also, since we're talking about NLP and machine learning, does this mean that if the algorithms get better, the chip cannot and is stuck with the performance that it was created at?

@derrick, I wonder if Apple uses an FGPA in this circumstance or if that would be overkill. It seems like Apple more likely relies on turnover as a result of planned obsolescence (get your iPhone 13~!!!!!1`1!1!)

The takeaway here is that specialized hardware can be utilized to perform difficult tasks, such as hearing "Hey, Siri" in the case of an iPhone. Similar to other comments, I wonder how these are built; nonetheless, a good example of the power constraint from previous slides.

Was there a specific breakthrough event that allowed the "always on" functionality to not be impossible anymore? Going back to the power constraint slide, was there some revolutionary optimization such that the same amount of operations could be performed in smaller amounts of power? Or was this just something that just got better over time?

Please log in to leave a comment.

I find it interesting that, for example, Apple devices have a designated piece of hardware specifically designed to listen for "hey Siri" and will then wake up the rest of the device's hardware as needed.