Back to Lecture Thumbnails

abraoliv

riglesias

@abraoliv You're correct! When programming FPGAs, you usually use a language such as Verilog. In Verilog (my experience in EE108 and EE180), you need to program as if all the code is running concurrently (with a granularity of one clock cycle), so there is a LOT of care that needs to be done that your code does not leave invalid states.

From the Wikipedia page of Verilog:

Sequential statements are placed inside a begin/end block and executed in sequential order within the block. However, the blocks themselves are executed concurrently, making Verilog a dataflow language.

The takeaway here is that, even though Verilog may use the hardware better, it is a significant step up in complexity.

Please log in to leave a comment.

Copyright 2021 Stanford University

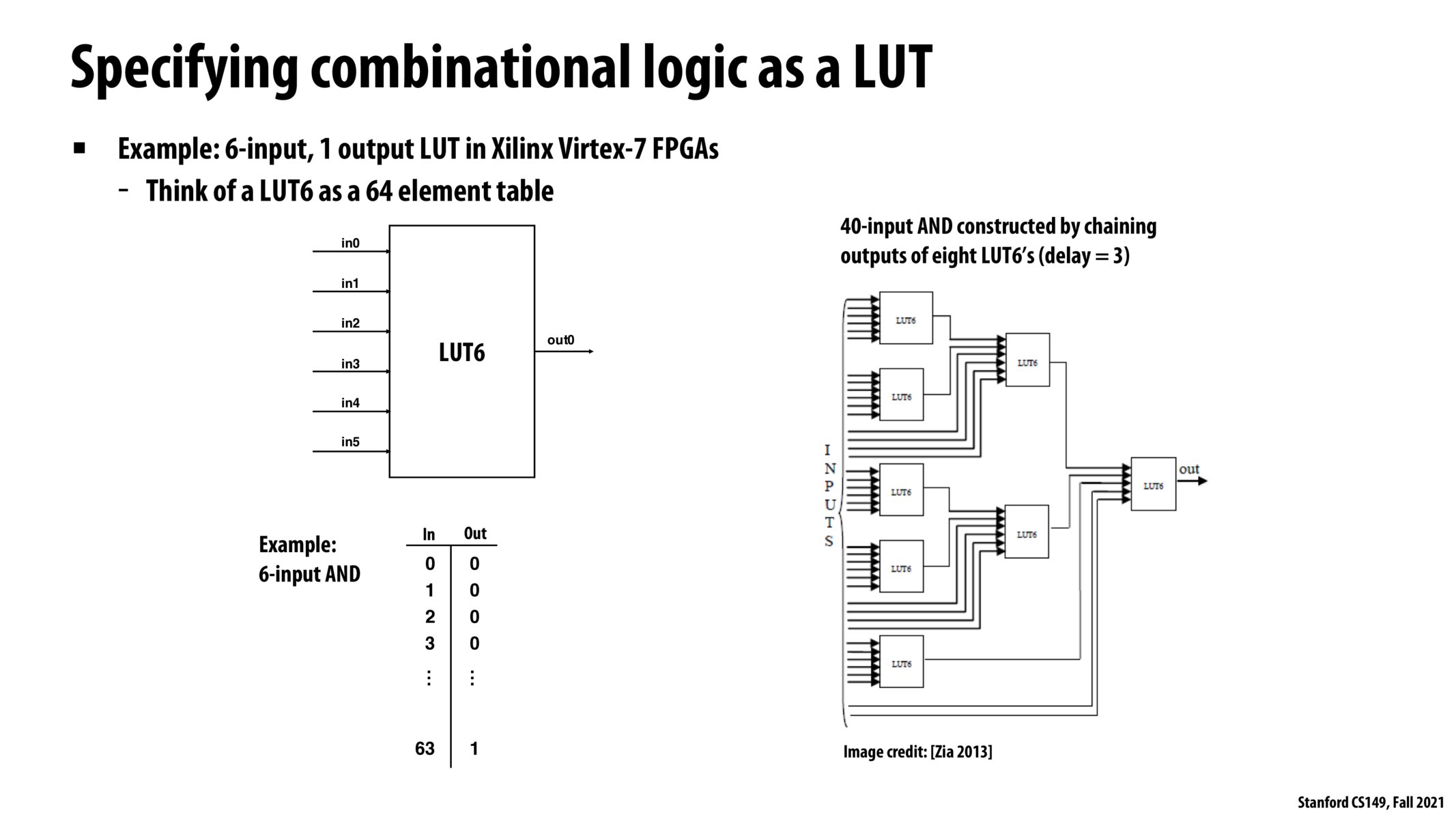

I see that there is a note for the "delay" of the 40-input AND, which seems to be the depth of the dependency DAG. When programming FPGAs, I assume this is something the programmer needs to be concerned about for every operation. Is there a global clock that moves uniformly causing this operation to take 3 time steps? Do common programming patterns require that you use "wait"s when one operation takes longer than another?