Similar question: does the data organization in the cache or RAM affect latency, since these storage are random access? As I understand, performance in traditional mass storage devices may be impacted by the data organization because the physical read/write head need to jump around from dispersed data.

How does the access time for data on a hard drive compare to the access time for RAM?

I know that there are several different ways to make data read faster, is it possible to read data from memory in parallel? Or can you only make data reads one at a time? I guess, can RAM be parallelized?

@A Bar cat -- you are describing increasing the "width" of the communication channel to memory to transfer more bits at a time. But keep in mind that this change will increase the bandwidth of the memory system, not necessarily decrease the latency of data access.

See more in lecture 3 here: http://cs149.stanford.edu/fall21/lecture/progmodels/slide_21

@shreya_ravi @awu Data organization in RAM does affect the throughput rate of data from memory (though not really the latency) due to the way cache will pull chunks of memory (64 byte blocks are pretty typical these days) without caring which part of that block you actually fetched (for example, I fetch from address 23, and it pulls everything in the range 0-63). So grouping (and properly aligning!) memory can in some situations get you your data faster, although such methods can be rather finicky to work with. Beyond physical organization of memory, there's also temporal organization of memory (accesses); CPU cache will evict cached memory when it thinks it won't be needed soon and there's data that needs to go into the cache; thus temporally grouping memory accesses can decrease effective memory latency because it's more likely that the core will still have that part of memory in cache which has a lower latency. For the second part of your question @awu I'd say pretty confidently that it doesn't have the same kind of slowdown from random access, or that if it does it's overshadowed by other factors (latency, cache, etc.) @platypus RAM is much faster; not sure how many orders of magnitude but certainly a lot.

Please log in to leave a comment.

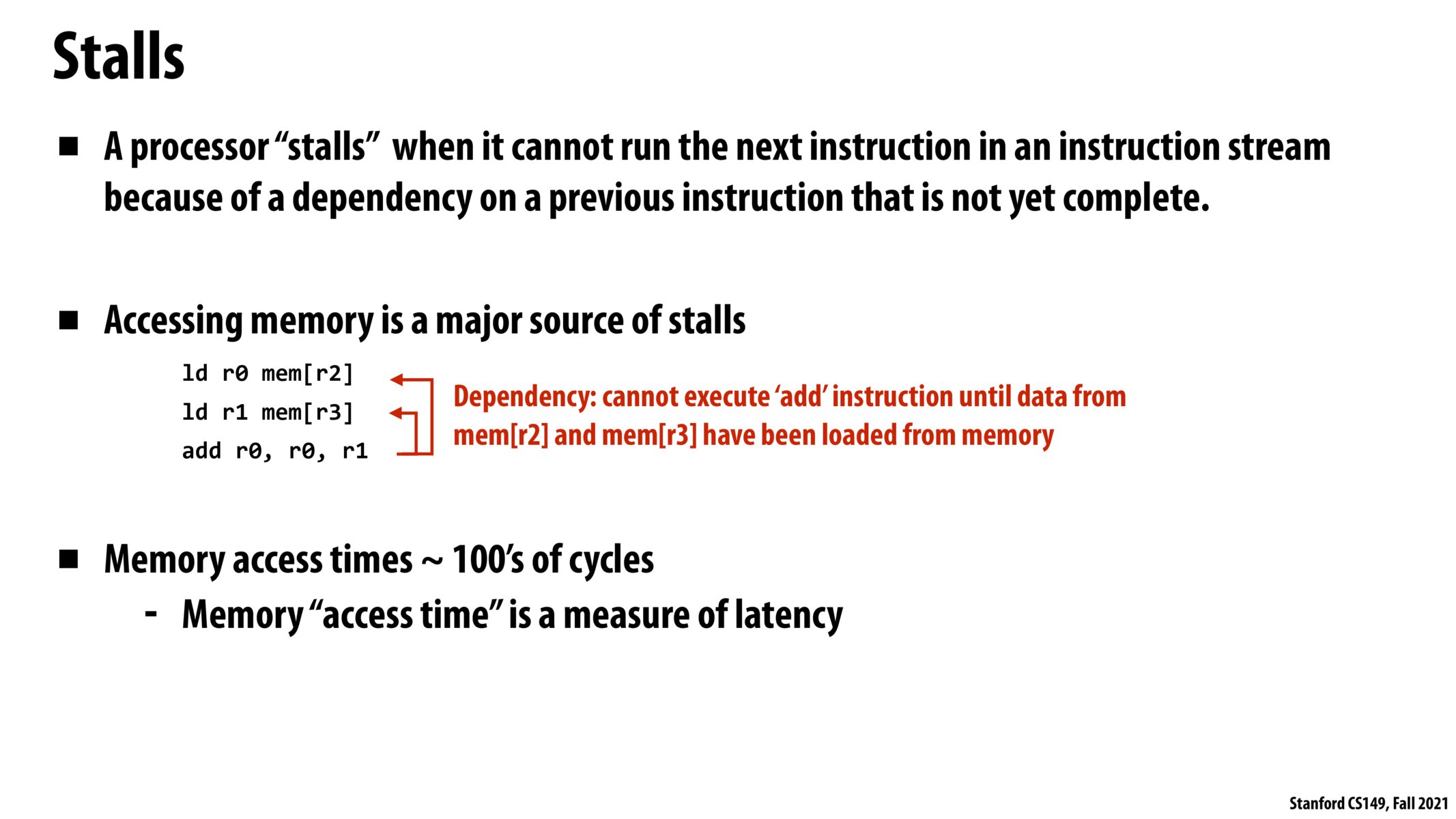

Does latency of loads increase linearly with the number of loads? Can grouping load instructions together reduce the total latency of the group of loads? Similarly, are there speedups with how memory is physically organized (i.e. can we group memory that is loaded together next to each other physically so there isn't as much jumping around to find data)?