I'm curious if there are any random partitioning or scheduling of tasks where we have no prior knowledge of the workload (aka very little or maybe no predictability of workload cost per task), if randomizing the assignment of independent tasks can improve performance. Seems like an easy but powerful improvement!

So you could randomly assign tasks, and in expectation the workload would be balanced (in the sense that each processor has the same expected workload). But in practice there could still be high variance between processors depending on (1) the variance in task workload and (2) variance in other parts of the system (e.g., latencies are not exact and can vary).

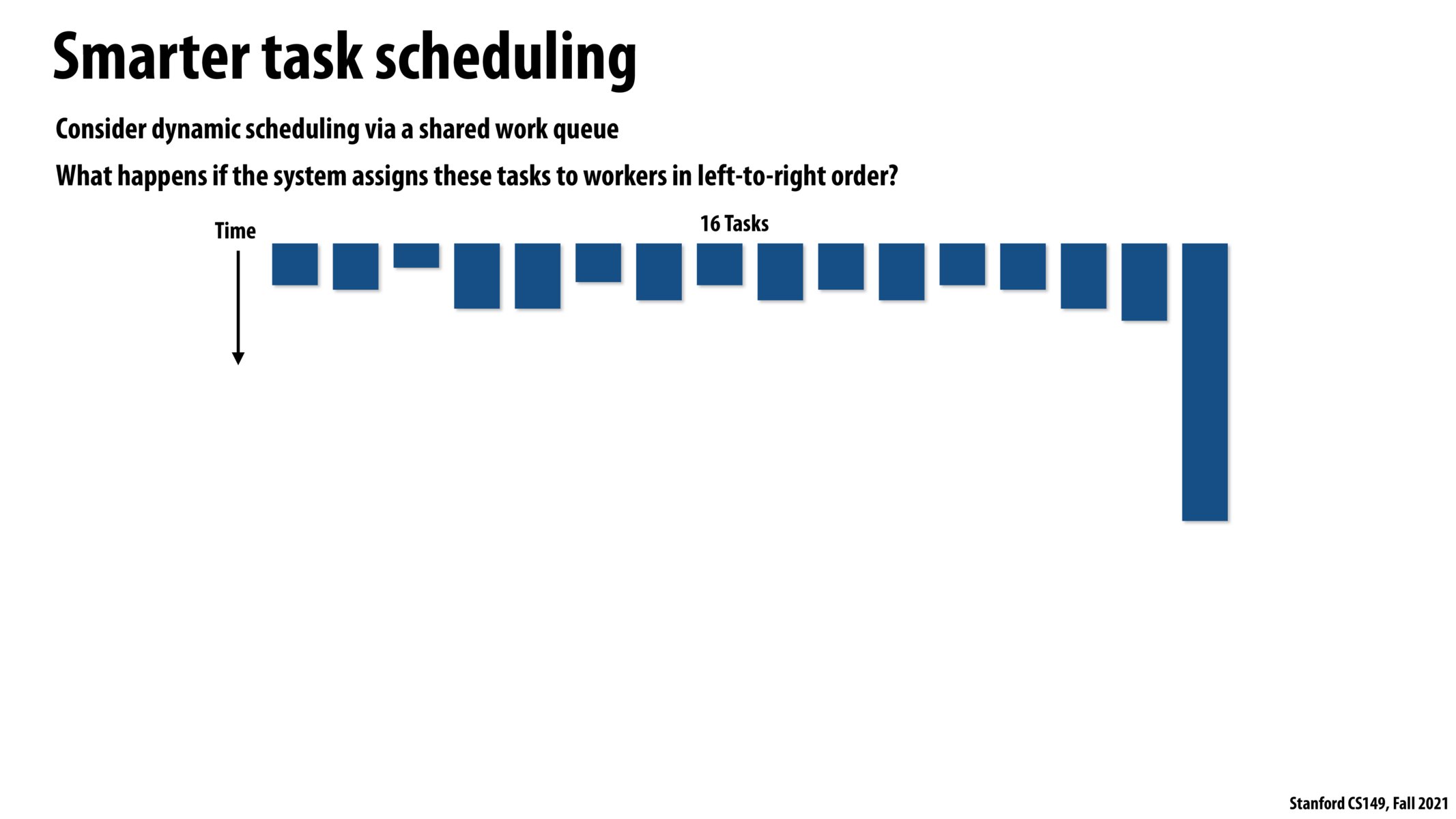

An alternative strategy we discussed in this lecture is maintaining a work queue that threads pull from whenever they are ready for more work. This ensures all processors always have some work to do with the downside that there is a bit more overhead managing these threads.

Please log in to leave a comment.

The point of this slide is to illustrate that if you have a pool of tasks that are getting executed by a fixed number of workers, you can get very unlucky if the last task to be picked up is the longest task.