Knowing your domain well is key to being able to come up with novel ways to parallelize previously un-parallelizable looking code.

@12345 I believe that the red and black nodes are alternately computed as the average of their neighbors so in order to compute the red node in a particular iteration we would only need to know the red and black values from the previous iteration.

This requires the domain knowledge that these alternating updates will converge to roughly the same as updating each node one after another.

Does this also mean that increasing the number of processing threads would lead to significantly larger overheads in terms of data transfer?

It took a while before I understood that blocked assignment will cost less in communication resources. It makes sense why that's the case now. I guess how do you figure out the chessboard pattern in the first place? Does it come up often when writing parallel code?

I'm still confused how P3 is able to get the new values so that it can send them over to P2? If P2 is waiting for data from P3, but it looks like the top row depends on values from P2, don't we have a chicken-egg type problem here? Or is there some sort of sequential kickoff that must occur before we can start the parallelization part of this?

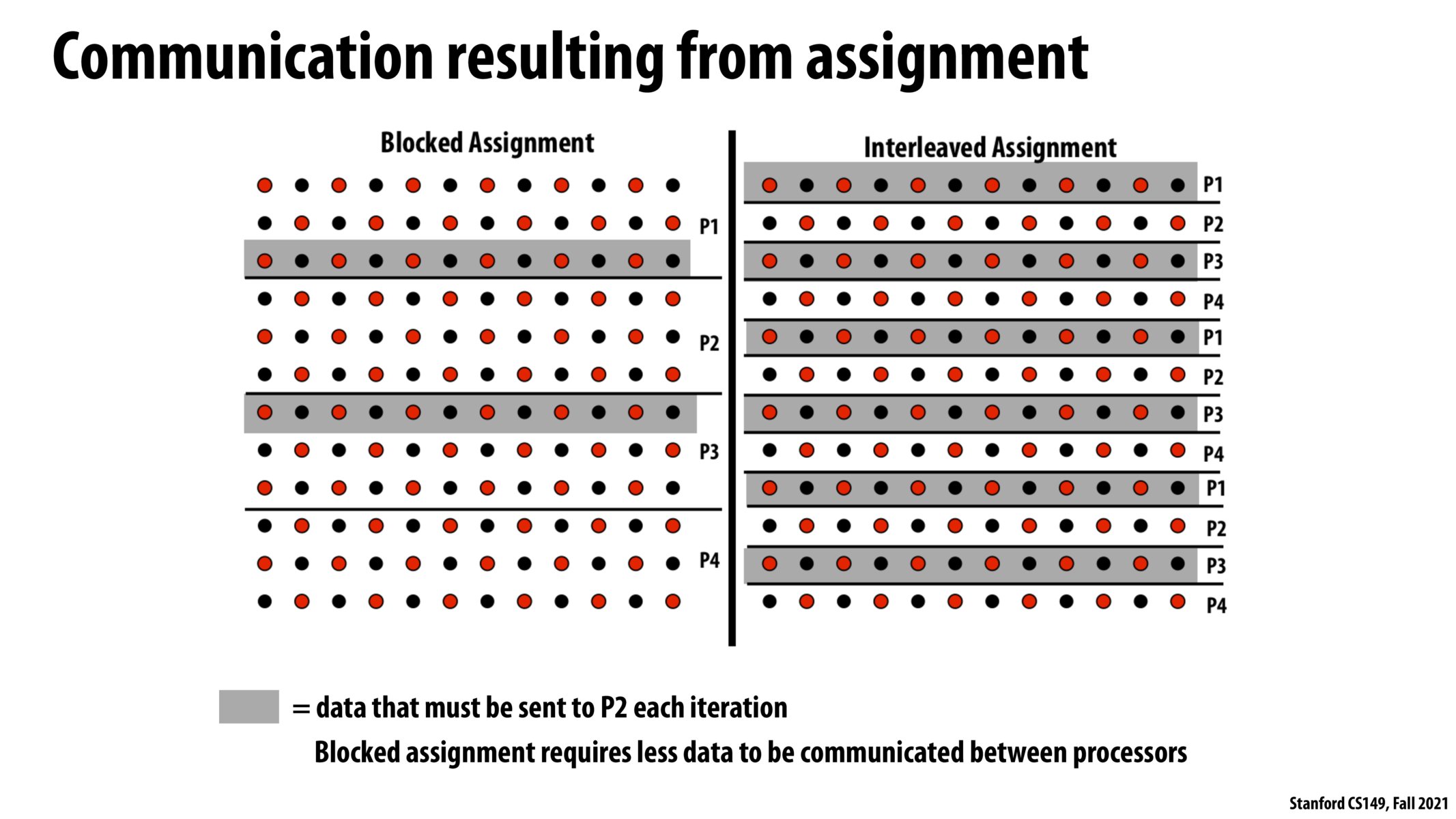

@derrick My understanding is that it is contingent on the specific dependencies of the problem. In the original problem, we had each row dependent on the row above it. However, remember that we reformulated the problem to process all of the red cells first, coordinate updates, and then after process the black cells. Thus, P3 does not need to send new values to P2 in order for P2 to compute the red cells in parallel. Instead, P3 just needs to send the old values for the black cells. Importantly, the block assignment method still has significantly less communication than the interleaved assignment.

The idea in the slide is that a row is dependent on the row above it and the one below it. Therefore, if two consecutive rows belong to different threads then there is a need of communication between the threads. The communication cost then becomes proportional to the number of 'switches', the number of rows where the next row is handled by a different thread. Blocked assignment minimized the number of switches.

Please log in to leave a comment.

Does this mean that we must wait neighbor processes to complete their previous iterations first before the process starts its next iteration?