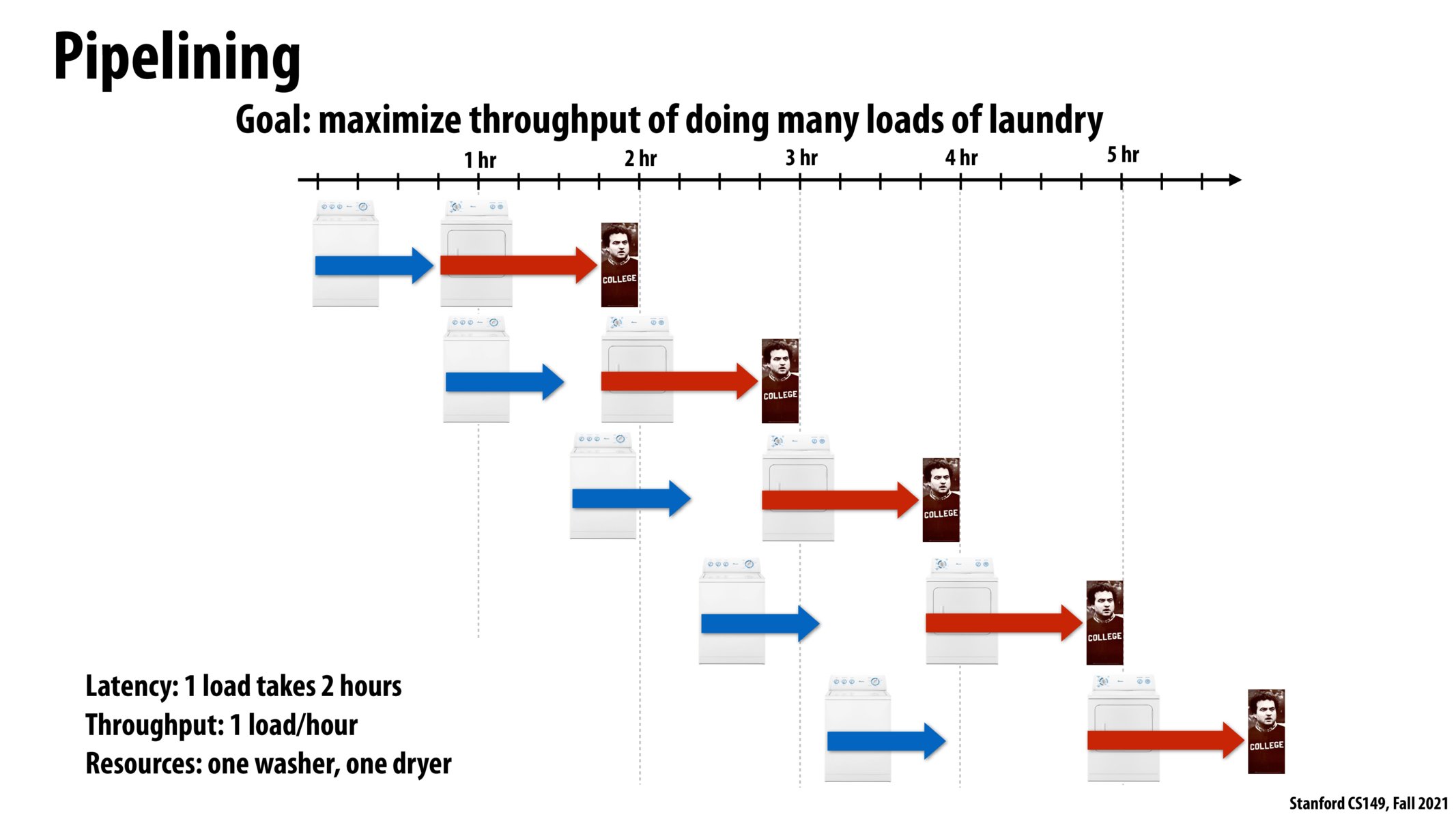

I really liked this illustration for processors. It really helped me understand the latency that comes from memory.

It is interesting to see that a dependency of our laundry needing an empty drier will increase latency for each iteration but throughput will stay the same.

Shouldn't the washer be depicted as starting with the dryer on each new cycle? As depicted here, the washer is never idle (it starts running the next load after its previous cycle has finished), but we know it needs to idle for 15 mins so that the dryer has finished the previous load and has space for the load the washer just finished

I guess to resolve @apappu's point and fully complete the analogy, we need some sort of memory / cache where wet clothes can sit in between being washed and being dried -- and depending on the solution used, there may be relevant additional latencies in transporting the clothes to/from this extra location. In the limit, since the intermediate storage is finite, the washer will eventually be forced to idle.

@fractal that is my understanding as well -- in the abstract we know there has to be some idle time, whether the washer has to hold the completed laundry or whether it can put it into some finite buffer that will eventually fill up. So perhaps the detail of where the washer idles isn't that important and I'm focusing on the wrong detail

@apappu I think the finite buffer really only makes sense as a computing solution if we expect the slack to be picked up / evened out eventually, that is, that it would only be used temporarily. If one element is perpetually slower, then we will have to just compromise and be limited by that. In CS144 (networking) buffers like this were discussed, and are used to avoid issues when the speed of things is temporarily off balance.

This slide really helped me understand why latency is bounded by bandwidth for memory - the Stanford dryers definitely take a longer time than the washers so I'm always waiting for those to finish before I can move onto my next set of dirty clothes, so this was a great analogy.

Please log in to leave a comment.

I think the latency here for 1 load could take more than 2 hours for subsequent loads because they need to wait for the current load to finish drying.