How (if at all) does the store instruction factor into this?

If you had very long memory latency, wouldn't you be constrained by number of outstanding memory instructions?

To ask a follow up to @joshco, in the general case doesn't memory latency throughput?

Also, isn't bandwidth is inversely proportional latency? In that case, wouldn't throughout be related to memory latency?

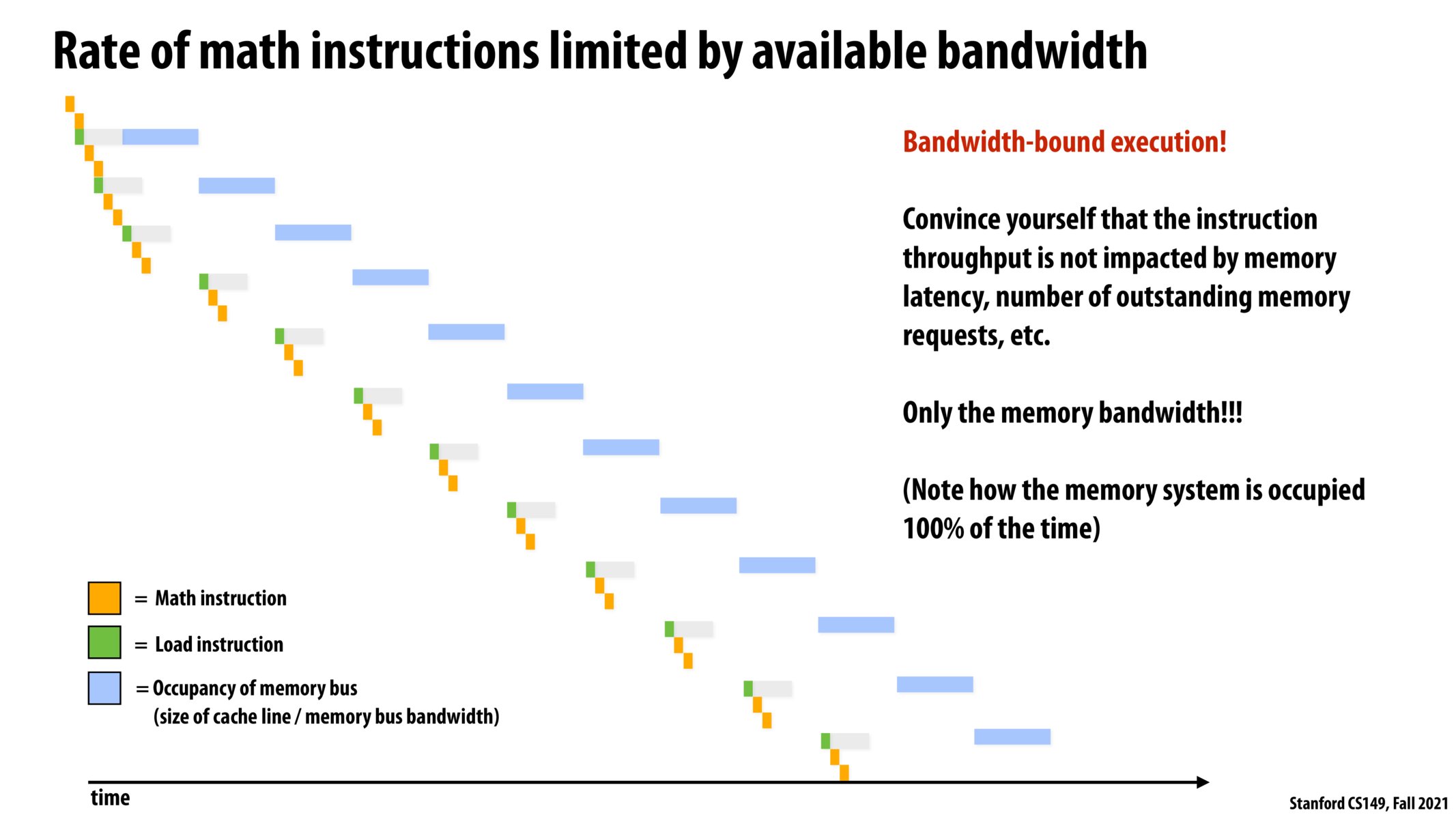

I was also confused by this slide, but I'll take a stab at trying to explain the diagram. What we can do to hide latency is issue multiple threads, but we are already doing that in the diagram (see orange boxes under gray rectangle) and we still can't proceed because we're waiting for memory to give us our output.

At steady state, it is not the gray rectangle that determines our throughput, but the blue rectangle, which is at 100% utilization. Because memory it is at 100% utilization at steady state, what's stalling our pipeline is the fact that it can't take more requests than the pipeline is issuing.

Some students have highlighted that bandwidth and latency are inversely proportional, and I agree with that, which is why I wonder if the diagram meant to say that memory bandwidth dominates the throughput?

Increasing the bandwidth would mean reducing the latency (and vice versa), so latency impacts the throughput, it's just now what dominates this specific scenario.

It will be helpful to consider the following.

-

Let's assume memory requests are for N bytes of data.

-

If the memory system can transfer B bytes per clock to the processor per clock (it has bandwidth B), then it takes N/B cycles to transfer the data. That's the length of the blue bar.

-

There's also memory latency... Assuming that memory is completely idle when a request is made, the latency of a memory request is some fixed latency for the request to get to memory, let's call that T_0, plus the amount of time needed to get the requested data back: N/B. In the diagram, T_0 is the fixed latency from the time of the start of the request, until the transfer happens. That's the gray bar.

-

Of course, if the memory system is busy when a request is made... then the latency is T_0 + time spent waiting for the memory system to free up + N/B.

-

Now assume that there is some mechanism to hide memory latency in this system. That might be the ability for the processor to issue multiple outstanding memory requests while working on other instructions (out of order execution, multi-threading, etc.) or perhaps prefetch data before it is required. Either way... assume that we can hide any memory latency with useful work (orange instructions).

-

Now, under these conditions, convince yourself that the rate that memory requests complete is determined entirely by the length of the blue bar.. That is, the only way to, in steady state, issue orange instructions at a faster rate, is to decrease the length of the blue bar, which, for a given request size N, means you have to increase B.

One final thought. Notice that at all points in time, there is a blue bar present. That means that memory is transferring data to the processor 100% of the time. It's fully utilized. And if you are fully utilizing the memory system, there's no way to get data from memory to the processor any faster.

Hi @kayvonf, you mentioned in the previous slide that there can be only 2 outstanding memory requests at once so that the third LD request happens 1 cycle after its previous ADD request. So can I interpret this instruction stream is also slightly affected by memory latency?

It was interesting to note how the instruction throughput was not affected by the rate of math instructions once the program was executing at full memory bandwidth.

Please log in to leave a comment.

the fact that instruction throughput is bounded by memory bandwidth can also be confirmed by the equation to compute the occupancy of memory bus: size of cache line / memory bus bandwidth.