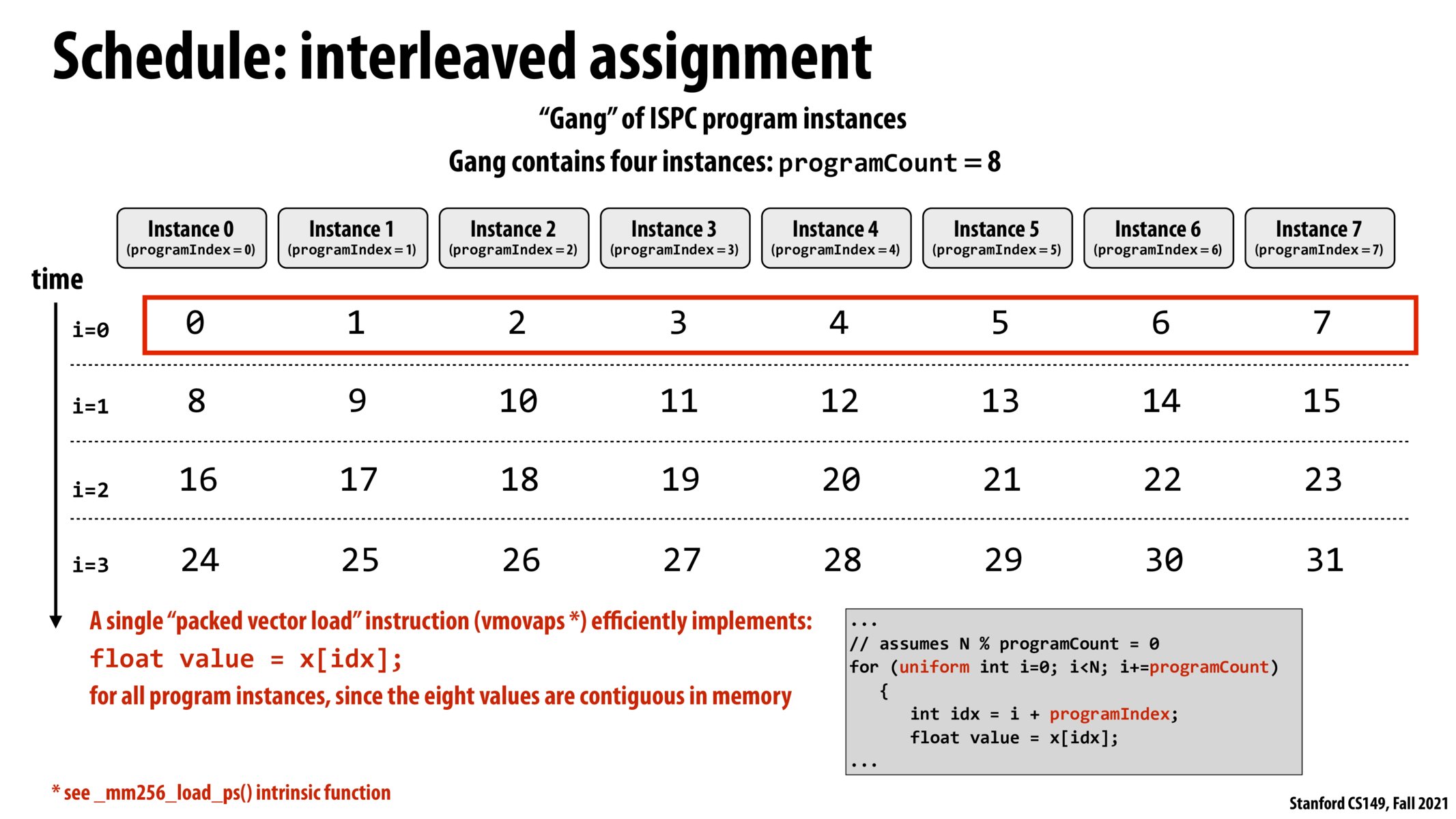

With the access pattern described in this slide, ISPC probably gets to emit more efficient load instructions, particularly for aligned data such as with the vmovapd/s family. This is in contrast to the next slide that shows data scattered throughout memory on each iteration. It would be great if somebody could provide more information on the costs of unaligned loads and gathers, maybe uOp counts, to compare against aligned loads.

@subscalar I'm wondering if there is some metadata (like a header or something?) involved when returning individual load requests from non-contiguous memory that would increase the amount of bandwidth the response takes up. I also would be curious about the actual implementation of this too.

in this example, the interleaved implementation is better because all the SIMD ALUs within each processor are operating on contiguous data. when we fetch data from memory to cache, depending on the size of the cache line, it is likely that we will prefetch some data from memory. operating on contiguous data exploits this type of locality

Ahh I see. Each data fetch does not just grab the data we need. It may grab more data than we need. Assigning one instance to consecutive array elements exploits this feature so that we don't have to make as many load instructions. Is my understanding correct?

Please log in to leave a comment.

This is a really cool implication of how programs are heavily affected by memory throughput!