I think the checkpoint would record the lineage of all currently persisted RDDs so that they can be reconstructed from the initial data when memory is back up. Lineage essentially stores the sequence of transforms on the input data until the last call to persist for each RDD. Spark does not checkpoint every transformed/persisted RDDs because they would cost too much storage space. Storing a sequence of transforms as lineage simplifies the recovery process and reduces the amount of storage space required for that purpose.

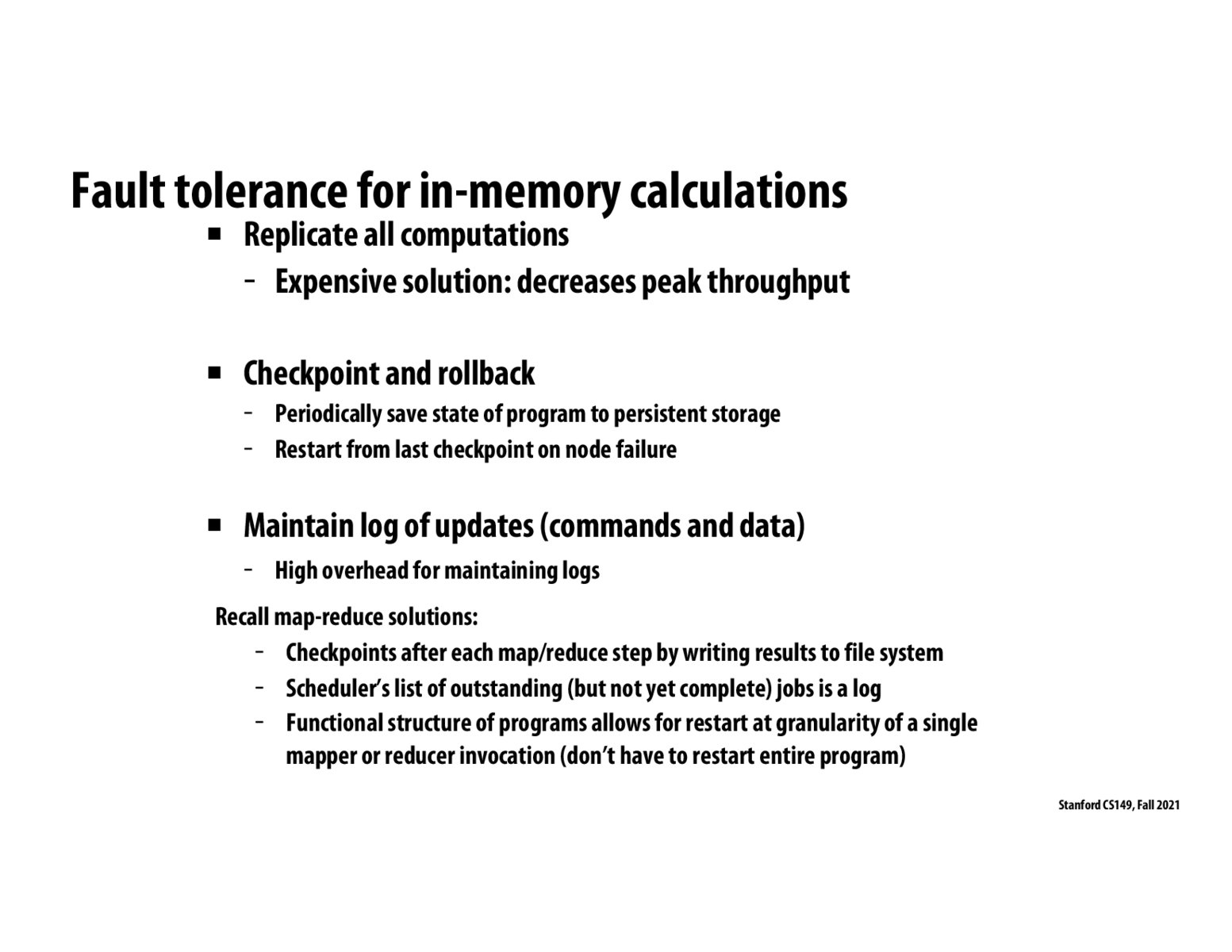

Is the reason the peak throughput is reduced when computations are all replicated is because instead of doing useful work, cycles are spent on managing replications and state maintenance related to that?

To confirm my understanding, does the RDD approach falls into any of these methods?

@shivalgo that was my impression as well

@12345 yep, RDD is a new programming abstraction that is different from the 3 described on this slide.

Please log in to leave a comment.

Can someone further elaborate on what it means for there to be a "checkpoint"? What I notice from this is that we have different "layers" of RDDs that seem to operate on a specific partition. In the event of a failure, do we simply go back to that last RDD and re-run the partition? Confused on the error checking and solutions for this! Additionally, what does it mean to write the results to file system? Is this just the next RDD?