Back to Lecture Thumbnails

martigp

ghostcow

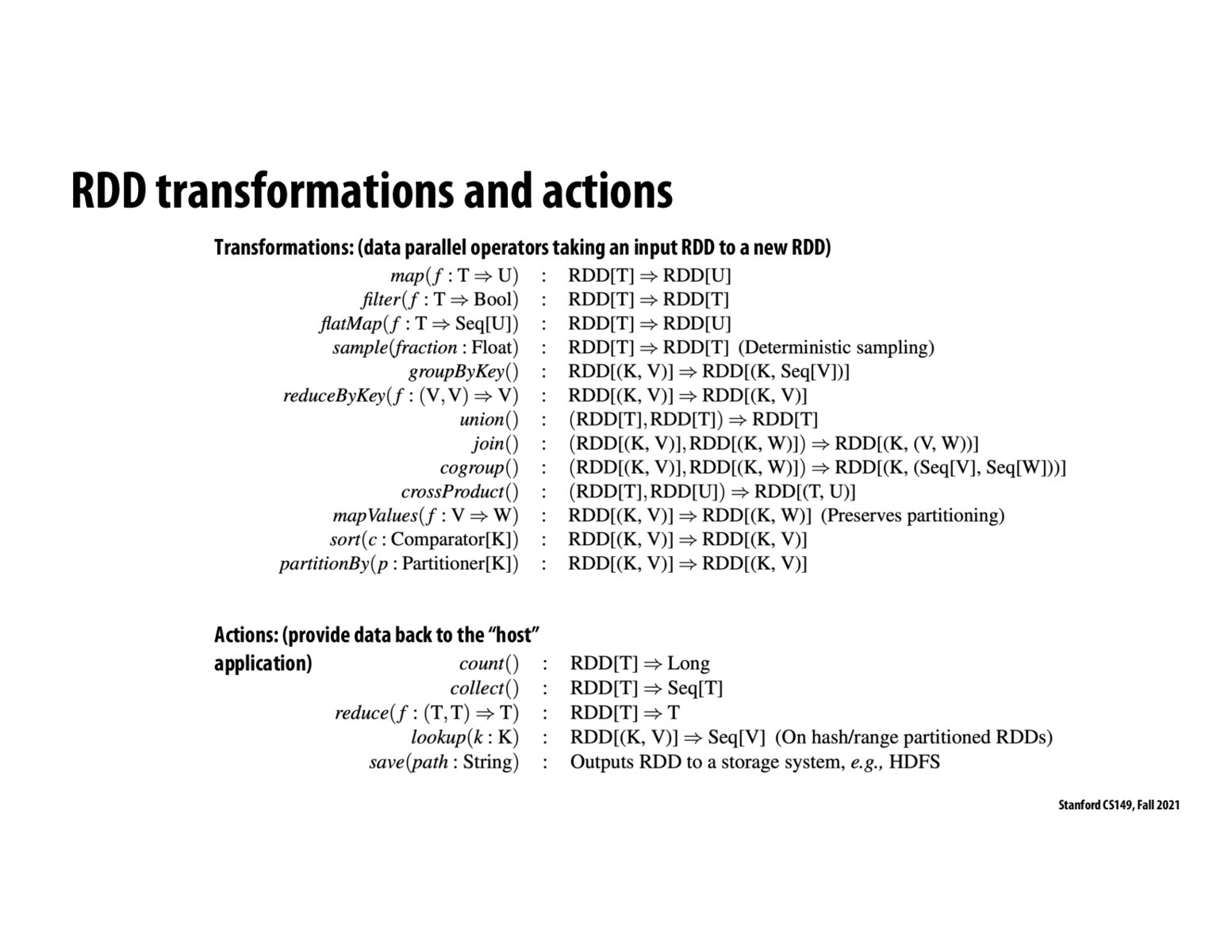

From their website, Apache Spark is a general-purpose cluster computing engine with APIs in Scala, Java and Python and libraries for streaming, graph processing and machine learning. At the beginning, it was started as a library for in-memory fault-tolerant distributed computing, using the data-parallel model discussed in the previous lecture. It's since expanded to various other domains, the core idea remaining the in-memory resilient distributed dataset (RDD).

mcu

@martigp It's both! It's a specific piece of software that implements a distinct model for creating distributed/parallel systems! It's a cluster runtime, a client library, and also a model and way of thinking for distributed computing.

Please log in to leave a comment.

Copyright 2021 Stanford University

I am a bit confused as to what spark is, is it a library for distributed computing or is it more of a way of approaching distributed computation? I.e. to we specifically invoke spark or do we approach distributed computing with a spark model.