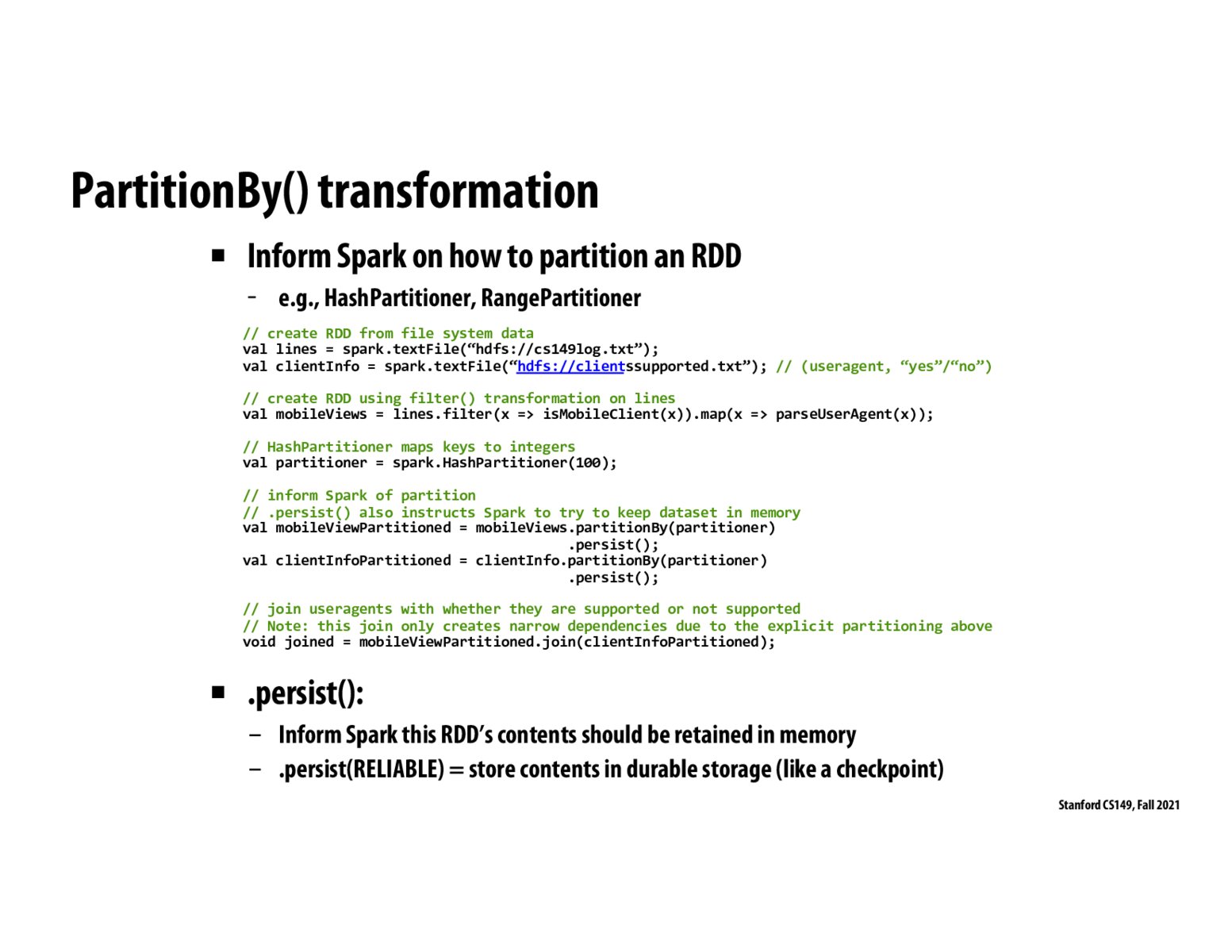

@juliob In my understanding, the two lines of code using "partitionBy" redistrubuted the data across nodes based on the hashed value of the keys for each key-value pair, so that key-value pairs with the same key (in newly derived RDD "mobileViewPartitioned" and "clientInfoPartitioned") go to the same node and we have narrow dependencies downstream. As for why Spark couldn't do that optimization for us, I'm not sure myself, maybe another student / TA could give an answer.

My understanding of what is happening with these two partitions is that by partitioning mobileView and clientInfo, when we do the join(), we have only narrow dependencies. However, what I don't understand is how this is better than doing the join() without the partition. It seems that the expensive operation here is the cross-node communication, which still needs to occur in the partitionBy() step that we do twice (once for mobileView and once for clientInfo). It doesn't seem to me that we've effectively cut down on the cross-node communication, we're just doing it all first (in a sort of pre-computation step) rather than during the join().

My question is how do I go about thinking about partitions such that I can understand why this is more efficient than joining without the partitions? What core concept am I missing in my understanding stated above?

@shreya_ravi I think the benefit of the partitionBy function is more apparent in a scenario where there are multiple functions like join that have wide dependencies. Without the partitionBy function we will have to do cross-node communication for each of these functions. With the partitionBy function we amortize our costs, we only do one expensive operation that requires cross-node communication (partitionBy) and then the rest of our functions now only have narrow dependencies.

How often can such large amounts of data be simply into narrow dependencies via HashPartitioner or RangePartitioner?

Please log in to leave a comment.

I'm confused what is happening with the hash partitioner. I get the idea of wanting narrow dependencies by putting the same groups on the same node, but what exactly is the code doing above to make that happen? Is it that the same keys would go to the same node? Why did we as programmers have to add that in - is that not an obvious optimization spark could've made for us?